THE DEVELOPER PRODUCTIVITY

ENGINEERING HANDBOOK

A Complete Guide to Developer Productivity

Engineering for Practitioners

Hans Dockter et al.

Version 2.0-local, 2022-03-11

Table of Contents

THE DEVELOPER PRODUCTIVITY ENGINEERING HANDBOOK: A Complete Guide to Developer

Productivity Engineering for Practitioners . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê1

Acknowledgements

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê2

Preface

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê3

Introduction

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê6

Part 1 - DEVELOPER PRODUCTIVITY ENGINEERING DEFINITIONS, DRIVERS & CONCEPTS

. . . . . . . . . Ê8

1. Developer Productivity Engineering Defined

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê9

2. The Intuitive Case for Developer Productivity Engineering

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê10

2.1. The creative flow of software developers depends on toolchain effectiveness

. . . . . . . . . . . Ê10

2.2. Effective collaboration depends on toolchain effectiveness

. . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê11

2.3. Overall team productivity depends on toolchain effectiveness

. . . . . . . . . . . . . . . . . . . . . . . . . Ê11

2.4. Software productivity suffers without Developer Productivity Engineering

. . . . . . . . . . . . . Ê12

3. Forming a Developer Productivity Engineering Team—Why Now?

. . . . . . . . . . . . . . . . . . . . . . . . . Ê14

3.1. DPE requires focus

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê14

3.2. DPE delivers a compelling ROI

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê14

3.3. DPE provides competitive advantage

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê15

3.4. Conclusion: Forward-thinking development organizations are all in with DPE

. . . . . . . . . . Ê15

4. Developer Productivity Engineering’s Place in the Broader Developer Productivity Solution

Landscape

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê16

4.1. Advantages of Developer Productivity Management

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê16

4.2. Limitations of Developer Productivity Management

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê17

4.3. Advantages of Developer Productivity Engineering

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê18

4.4. Limitations of Developer Productivity Engineering

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê20

4.5. Conclusion

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê20

5. DPE Solutions Overview

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê21

Part 2 - FEEDBACK CYCLES AND ACCELERATION

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê22

6. The Essential Role of Faster Feedback Cycles

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê23

6.1. Faster builds improve the creative flow

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê23

6.2. Developer time is wasted waiting for builds and tests to finish

. . . . . . . . . . . . . . . . . . . . . . . . . Ê24

6.3. Longer builds mean more context switching

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê24

6.4. The effect of failed builds on build time and context switching

. . . . . . . . . . . . . . . . . . . . . . . . Ê25

6.5. Longer builds are harder to troubleshoot

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê25

6.6. Time to troubleshoot grows exponentially with problem detection time

. . . . . . . . . . . . . . . . Ê26

6.7. Longer builds lead to more merge conflicts

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê26

6.8. The effect of build speed on large versus small projects

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê27

6.9. Many companies are moving in the wrong direction

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê27

6.10. A final word of caution: The problem grows with your success

. . . . . . . . . . . . . . . . . . . . . . . Ê28

6.11. Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê28

7. Faster Builds by Doing Less with Build Caching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê29

7.1. Local vs remote build cache

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê35

7.2. Build cache effectiveness

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê36

7.3. Sustaining cache benefits over time

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê36

7.4. Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê37

8. Test Distribution: Faster Builds by Distributing the Work

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê38

8.1. Traditional test parallelism options and their limitations

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê38

8.2. Capabilities of a fine-grained and transparent test distribution solution

. . . . . . . . . . . . . . . . Ê40

8.3. Test Distribution complements build caching

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê41

8.4. Test Distribution case study - Eclipse Jetty project

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê41

8.5. Conclusion

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê45

9. Performance Profiling and Analytics

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê46

9.1. Maximum Achievable Build Performance (MABP) vs Actual Build Performance (ABP)

. . . Ê46

9.2. Recognize the importance of inputs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê47

9.3. Data is the obvious solution

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê48

9.4. The collaboration between developers and the development-infrastructure teams

. . . . . . Ê49

9.5. Be proactive and less incident-driven

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê50

9.6. See the big picture with performance analytics

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê51

9.7. Performance profiling with Build Scan™

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê52

9.8. ABP vs MABP revisited

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê53

Part 3 - TROUBLESHOOTING FAILURES AND BUILD RELIABILITY

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê54

10. Failure Types and Origins

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê55

10.1. Common build failure root causes

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê55

10.2. Classifying failure types and determining ownership

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê55

10.3. The role of DPE in addressing build failures

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê56

11. Efficient Failure Troubleshooting

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê57

11.1. Data contextualization is the key to easier and more efficient troubleshooting

. . . . . . . . . Ê57

11.2. Implementation example: Leveraging Build Scan™

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê59

11.3. A spotlight on toolchain failures

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê59

11.4. The role of historical test data

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê59

11.5. Comparing builds to facilitate debugging

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê60

11.6. Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê60

12. The Importance of Toolchain Reliability

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê61

12.1. What is toolchain reliability?

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê61

12.2. The connection between performance and reliability

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê62

12.3. The connection between reliability issues and morale

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê62

12.4. The importance of issue prevention

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê63

12.5. The difference between reproducible and reliable builds

. . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê63

13. Best Practices for Improving Build Reliability with Failure Analytics . . . . . . . . . . . . . . . . . . . . . . Ê65

13.1. Avoid complaint-driven development

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê65

13.2. Use data to systematically improve reliability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê65

13.3. Continuously measure and optimize . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê66

14. Improving Test Reliability with Flaky Test Management

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê68

14.1. What is a flaky test?

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê68

14.2. Flaky test identification strategies

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê68

14.3. Measuring the impact of flaky tests

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê69

14.4. Flaky test mitigation strategies

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê69

14.5. Leveraging data to address flaky tests

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê70

14.6. Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê71

Part 4 - ECONOMICS

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê72

15. Quantifying the Cost of Builds

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê73

15.1. Meet our example team

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê73

15.2. Waiting time for builds

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê74

15.3. Local builds

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê74

15.4. CI builds

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê75

15.5. Potential investments to reduce waiting time

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê75

15.6. The cost of debugging build failures

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê76

15.7. Faulty build logic

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê77

15.8. CI infrastructure cost

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê78

15.9. Overall costs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê78

15.10. Why these opportunities stay hidden

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê79

15.11. Conclusions

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê79

16. Investing in Your Build: The ROI calculator

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê81

16.1. How to use the build ROI calculator

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê81

Next steps: Where to go from here

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê86

THE DEVELOPER PRODUCTIVITY

ENGINEERING HANDBOOK: A Complete

Guide to Developer Productivity

Engineering for Practitioners

© 2022 Gradle Inc. All rights reserved. Version 2.0-local.

Published by Gradle Inc.

No part of this publication may be reproduced, stored in a retrieval system or transmitted in any

form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise

except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without the

prior written permission of the publisher.

Copy Editor: Wayne Caccamo, with Justin Reock and Raju Gandhi

Cover Design: Charles Aweida

1

Acknowledgements

Over the last decade we have been fortunate to work with literally hundreds of the world’s top

engineering teams and thousands of developers who have so generously shared their experience,

stories, challenges, and successes in the software development process.

What is clear to us from working with you is that Developer Productivity Engineering is quickly

becoming a critical practice in any software engineering organization that takes industry

leadership and the developer experience seriously. We welcome your continued contributions to

the ideas in this book.

We would also like to thank all the talented and dedicated engineers at Gradle Inc.

Hans Dockter and the team at Gradle Inc.

2

Preface

Developer Productivity Engineering (DPE) is a software development practice used by leading

software development organizations to maximize developer productivity and happiness. This book

represents the proverbial “living document” that will be updated with new Developer Productivity

Engineering concepts, best practices and enabling technologies as we invent and discover them

over time. This is the second major update to the initial document which was published in 2019. We

will notify you about future updates if you have registered to receive this book, and we continue to

encourage you to give us your feedback at

devprod-eng-book@gradle.com.

About this book

In this book we share developer productivity engineering techniques and stories about how to:

• Understand the importance of fast feedback cycles and early discovery of bugs

• Quantify the costs of low-productivity development environments that waste time waiting for

slow builds, tests, and CI/CD pipelines

• Organize the data required to understand, troubleshoot, and improve essential development

processes like builds, tests, and CI/CD pipelines

• Use acceleration technologies like caching and test distribution to speed up feedback cycles

• Use data to proactively improve the reliability of the development toolchain

• Find and fix frequent errors and noisy signals like flaky tests

It consists of four parts:

•

Part 1 - DEVELOPER PRODUCTIVITY ENGINEERING DEFINITIONS, DRIVERS & CONCEPTS

Part 1 defines Developer Productivity Engineering (DPE) and important related concepts, makes

an intuitive case for investing in a DPE initiative now, and positions DPE in the broader

developer productivity solution landscape.

•

Part 2 - FEEDBACK CYCLES AND ACCELERATION

Part 2 describes the essential role of fast feedback cycles in achieving DPE excellence and three

key enabling technologies for achieving feedback cycle acceleration: Build caching, Test

Distribution, and Performance Profiling & Analytics.

•

Part 3 - TROUBLESHOOTING FAILURES AND BUILD RELIABILITY

Part 3 describes failure types and their origins, principles of efficient troubleshooting, and the

importance of toolchain reliability for improving productivity and the developer experience. It

also covers best practices for achieving build reliability leveraging failure analytics and flaky

test management tools.

• Part 4 - ECONOMICS

Part 4 provides a recipe for quantifying the observable and hidden costs of slow builds and tests

as a basis for determining the potential ROI of a DPE initiative investment in improving build

and test speeds and making troubleshooting more efficient. It also introduces a straight-forward

ROI calculator (online tool) that you can use to estimate your own DPE initiative ROI leveraging

your own data.

3

The chapters are independent stories so feel free to skip around and read the sections you find

most useful now.

About the cover art

Charles Aweida and Ari Zilnik designed the cover art in collaboration with illustrator Roger Strunk.

The illustration represents a Developer Productivity Engineering team looking at metrics and

collaborating with the developers to increase the productivity of their software engineering factory.

Conventions

As this book is about real-world examples and stories, we don’t have a lot of code to show. We

use the following convention for calling out short anecdotes that complement the text.

Reader feedback

Feedback is always welcome, and we hope to continue to add to this body of experience and

thought leadership. You can reach out to us at

devprod-eng-book@gradle.com.

About the Authors

Hans Dockter is the founder and project lead of the Gradle build system and the CEO of Gradle Inc.

Hans is a thought leader in the field of project automation and has successfully led numerous large-

scale enterprise builds. He is also an advocate of Domain-Driven-Design, having taught classes and

delivered presentations on this topic together with Eric Evans. In the earlier days, Hans was also a

committer for the JBoss project and founded the JBoss-IDE.

4

Wayne Caccamo is the VP of Marketing at Gradle Inc. and has served in executive roles at several

successful technology startups and has held leadership posts at Oracle and Hewlett-Packard where

he was the founder and director of the HP Open Source Solutions Operation (OSSO).

Eric Wendelin leads the Analytics team at Gradle Inc. His mission is to make software assembly

faster and more reliable through data. Prior to joining Gradle, he led engineering teams at Twitter

and Apple.

Marc Philipp leads the Test Distribution team at Gradle Inc. as a Senior Principal Software

Engineer. He is a long-time core committer, maintainer, and team lead for the JUnit open source

project and initiator of the JUnit Lambda crowdfunding campaign that started what has become

JUnit 5.

About Gradle Inc.

Our purpose is to empower software development teams to reach their full potential for joy,

creativity, and productivity. We are the provider of

Gradle Enterprise, the premier enabling

technology for the practice of Developer Productivity Engineering and the company behind the

popular open-source

Gradle Build Tool used by millions of developers.

To learn more, contact us at:

https://gradle.com/enterprise/contact/.

5

Introduction

I started the Gradle project out of deep frustration in working in low-productivity environments.

The amount of time it took to make progress and get feedback on changes in the enterprise

environment was incompatible with how I work and was taking the passion and satisfaction away

from the craft of software development. Later I was joined by our Gradle co-founder, Adam

Murdoch, and many other talented developers. Over the last decade we have worked with

hundreds of the top development teams from every industry and every region.

This book represents not only our learnings from that exciting journey but also what we see by

working with cutting-edge teams from companies like AirBnB, Netflix, Microsoft, LinkedIn, Spotify,

Twitter and many others. These teams make Developer Productivity Engineering (DPE) a dedicated

practice and have executive sponsorship for this effort. The company as a whole realizes that their

developer’s ability to innovate at their full potential is critical for leading their respective industry.

They have dedicated teams of experts with a sole focus on DPE to improve the toolchain, accelerate

feedback cycles, and provide a great developer experience. The lessons we present in this book

apply to any technology stack, language, framework or build system.

DPE is not only for visionaries and innovators with formal dedicated teams in place. It should

appeal to any company whose success depends increasingly on the productivity of development

teams and ensuring a positive developer experience to recruit and retain talent. Specifically, we

wrote this book for:

• Senior software developers that are responsible for their teams' productivity and morale of

their team(s) and are particularly focused on increasing trust and confidence in the toolchain to

encourage the right behaviors

• Build engineers responsible for enabling fast and frequent software delivery, improving trust

and confidence in toolchain, and making teaming and collaborations with the development

team more efficient

• DPE managers and champions looking for a comprehensive guide to the DPE practice that

they can reference, share, and use to socialize the key concepts and tools across the

organization

• Engineering management (e.g. VP or Director level) open to new strategies to decrease time-to-

market (TTM) and minimize cost while maintaining and improving quality

• Members of the development team looking to make an impact, build influence and gain

respect by bringing solutions that address personal and team frustrations, or individuals that

see themselves as future DPE champions but need help understanding and building a business

case

• CI teams who want to learn how to contribute to the developer experience beyond providing CI

as a service. This includes faster CI builds and more efficient ways for developer and CI

engineers to troubleshoot failed CI builds, as well as contain the spiraling costs of their CI

infrastructure and compute resources. Most importantly, this means changing from a reactive

pattern to a proactive pattern when it comes to CI reliability.

No matter what your role or objective, this book is chock full of personal experiences, stories, and

lessons learned that address how to:

6

• Understand the importance of fast feedback cycles and catching errors earlier

• Quantify the costs of a wasted time waiting for builds, tests, and CI/CD pipelines

• Organize the data required to understand, troubleshoot, and improve essential development

processes like builds, tests, and CI/CD

• Use acceleration technologies to avoid unnecessary work such as caching and distribution that

speed up feedback cycles and improve CI efficiency

• Efficiently discover and eliminate some of the most egregious and avoidable productivity

bottlenecks such as frequent errors and false signals like flaky builds and tests

Hans Dockter, Founder and CEO of Gradle, Inc.

7

Part 1 - DEVELOPER PRODUCTIVITY

ENGINEERING DEFINITIONS,

DRIVERS & CONCEPTS

Developer Productivity Engineering Defined

The Intuitive Case for Developer Productivity Engineering

Forming a Developer Productivity Engineering Team—Why Now?

Developer Productivity Engineering’s Place in the Broader Developer Productivity

Solution Landscape

DPE Solutions Overview

8

1. Developer Productivity Engineering

Defined

Developer Productivity Engineering (DPE) is a software development practice used by leading

software development organizations to maximize developer productivity and happiness. As its

name implies, DPE takes an engineering approach to improving developer productivity. As such, it

relies on automation, actionable data, and acceleration technologies to deliver measurable

outcomes like faster feedback cycles and reduced mean-time-to-resolution (MTTR) for software

failures. As a result, DPE has quickly become a proven practice that delivers a hard ROI with little

resistance to product acceptance and usage.

This contrasts with a Developer Productivity Management (DPM) approach that views developer

productivity as a people challenge that can be addressed by applying management best practices

and developer activity-based metrics.

Organizations apply the practice of DPE to achieve their strategic business objectives such as

reducing time to market, increasing product and service quality, minimizing operational costs, and

recruiting and retaining talent by investing in developer happiness and providing a highly

satisfying developer experience. DPE accomplishes this with processes and tools that gracefully

scale to accommodate ever-growing codebases.

Most mature industries have engineering disciplines dedicated to making production efficient and

effective. This includes chemical engineering and industrial engineering sectors that have process

experts widely understood to be essential to their firm’s economic success and long-term

competitiveness.

Developer Productivity Engineering is of similar importance when it comes to the production of

software with similar stakes in achieving a company’s economic potential and global competitive

impact. It is quickly becoming a critical practice within software engineering organizations that

strive for industry leadership across diverse and highly dynamic marketplaces.

9

2. The Intuitive Case for Developer

Productivity Engineering

By Hans Dockter

When making a business case for investing in any kind of business or technical initiative it’s

important to provide management with a strong ROI story. Fortunately, the practice of DPE delivers

a hard and compelling ROI. A financial model for generating an ROI estimate is described in Part 4

of this book.

However, process improvement initiatives will fail without bottom-up, grass-roots user acceptance.

The intuitive case for DPE is not based on the impact to management and financial objectives like

development costs, time-to-market, and quality. Instead, it is based on a visceral understanding of

the pains experienced every day by developers from problems that DPE addresses — slow builds

and inefficient troubleshooting, to name two — and the impact they have on the developer

experience and productivity.

The intuitive case for DPE starts with and is built upon the intuitive premise that software

productivity depends heavily on toolchain effectiveness. This is because toolchain effectiveness can

be defined as the ability to facilitate developer creative flow, collaboration, and team productivity.

It concludes that software productivity suffers without DPE.

2.1. The creative flow of software developers depends

on toolchain effectiveness

Software development is a highly creative process. It is similar to the creativity of an experimental

scientist. Scientists have a hypothesis and then enter into a dialogue with nature via experiments to

determine if the hypothesis is correct. Our hypothesis is that our code runs and behaves as we

expect it to and our dialogue is with the compiler, unit tests, integration, performance tests, and

other feedback mechanisms to validate our hypothesis. The quality of the creative flow for your

developers depends on the timeliness and quality of the response.

In an ideal world the answers are available instantaneously, they are always correct, and changes

are immediately available at runtime. This was more or less our experience when we started

developing software as kids. It was fun, productive, and addictive. But what if, when you wrote

your first Hello World, it took a full minute to render that familiar and friendly greeting? Would

you have continued with coding? I’m not sure I would have.

10

2.2. Effective collaboration depends on toolchain

effectiveness

Now, many of us are enterprise software developers and instant feedback has given way to long

wait times, complex collaborative processes and additional dialogue with stakeholders and tools.

For example, first you must correctly interpret a business expert or customer requirement and

translate it into code. Then, you need to run your code to get feedback on the correctness of your

interpretation. Few developers operate in a vacuum in these environments. The quality of the

collaboration depends on how quickly you can iterate.

2.3. Overall team productivity depends on toolchain

effectiveness

Team productivity is determined by the quality of the individual developer’s creative flow and the

effectiveness of your collaboration. Both are strongly correlated to the toolchain effectiveness. Your

toolchain can create a major bottleneck for your productivity and your success. The developer

toolchain in the enterprise is an ever-changing and complex piece of machinery with ever-changing

and complex inputs. Sub-optimal feedback cycle times and unreliable feedback is the norm. This is

commonly the case since most enterprises do not performance manage or quality control their

toolchain by leveraging modern acceleration or troubleshooting technologies. In a recent study,

over 90% of survey respondents indicated that improving time spent waiting on build and test

feedback was a major challenge (source:

TechValidate). As a result, developers are stuck believing

that slow, unreliable feedback is a given in the enterprise. It doesn’t have to be that way.

11

Successful projects, in particular, suffer from inefficient toolchains. An

unmanaged and unaccelerated toolchain will collapse under the pressure of

project expansion as tech stack diversity and the number of lines of code,

developers, locations, dependencies, and repositories continue to grow, often

exponentially.

2.4. Software productivity suffers without Developer

Productivity Engineering

As a company grows, it needs a dedicated team of Developer Productivity Engineering experts. This

practice has the sole focus of optimizing the effectiveness of the developer toolchain to achieve a

high degree of automation, and fast and reliable feedback.

The job of DPE is to improve developer productivity across its entire lifecycle and to close the

significant and growing gap between actual and potential software development team productivity.

Too often CI/CD teams are not responsible for developer productivity and make decisions that

optimize for other metrics, such as auditability or vulnerability checking without taking into

account the adverse effects to developer productivity. The priorities and success criteria for a DPE

team are primarily based on data that comes from a fully instrumented toolchain. For such a team

to be successful it needs a culture that values and is committed to the continuous improvement of

developer productivity.

A developer’s happiness depends on their productivity. Most developers want to work in an

environment that enables them to work at their full potential. Organizations that cannot provide

such an environment are seeing a drain on their talent leading to project slowdowns or outright

abandonment.

The economic benefits of not applying this practice are dramatic. Development productivity

improvements provide significant return for every dollar invested in software development. The

amount of features that can ship is heavily affected by it. The productivity gap that comes from not

applying the practice of Developer Productivity Engineering is a major competitive disadvantage.

Innovation is at the heart of any business to survive. Today most innovations are software-driven.

In fact, a December 2020 report from IDC predicted that 65% of the global GDP will be digitally

transformed by now (2022). That means that the productivity of software development teams is

crucial for your business to survive, and developer productivity on the whole has a global impact.

Developer Productivity Engineering applies broadly across the software development process

landscape. It’s impact starts with the coding process and extends to CI/CD to the point where

DevOps distributes and scales production applications.

12

Figure 1. DPE is not a new phase of the SDLC, but a practice that supports multiple phases with a current

focus on Build, Test and CI.

To be clear, Developer Productivity Engineering is not about treating developers like factory

workers but instead like creative craftspeople. It is about the developer experience, unlocking

creative flow, restoring the joy of development, and facilitating better collaboration with the

business.

It’s easy to understand at an intuitive level why DPE is important to developer productivity and the

developer experience. Fortunately, one can also make a straightforward economic argument for

DPE based on a hard ROI that has been proven in over a hundred of the most important technology

and global business brands. This is covered in

Part 4 - ECONOMICS.

13

3. Forming a Developer Productivity

Engineering Team—Why Now?

By Wayne Caccamo

DPE is becoming the most important movement in software development and delivery since the

introduction of Agile, lean software development, and DevOps methodologies and tools. For many

companies, initiatives and experiments to boost developer productivity have been spearheaded

primarily by lead developers and build engineers, who strive to mature their processes and find

enlightened ways to create a cutting-edge development experience for their teams.

Rather than this largely ad hoc approach, the most successful DPE-enabled businesses formalize

those investments by establishing a dedicated team. Here are three reasons why it makes sense for

your company to launch a DPE initiative now.

3.1. DPE requires focus

To achieve excellence in any discipline requires focus, and focus in business requires dedicated

resources. Dedicated teams have a better chance to succeed for several reasons, particularly when

it comes to DPE. First, the mere existence of a DPE team signals that this is a management priority

with executive buy-in, and that developer productivity engineers (like release engineers and build

engineers) are first-class members of the engineering team.

Second, by standing up a dedicated team, management acknowledges that a separate set of goals

and metrics are needed to assess productivity and, ideally, will make meeting those goals the focus

of at least one person’s full-time job.

Dedicated teams also enjoy the autonomy to manage and develop their own talent and unique skill

sets. For DPE to succeed, it is necessary to find software development professionals that have a

passion and affinity for supporting development teams in matters of productivity and efficiency.

Finally, the mere presence of dedicated teams reflects a milestone in the maturity of any new

business or technical discipline. In this context, it is noteworthy that many high-profile companies

that have achieved or aspire to a high level of maturity in this area have long ago established

dedicated DPE teams.

3.2. DPE delivers a compelling ROI

While you can realize many DPE benefits without a dedicated full-time team, the magnitude and

sustainability of those gains won’t be as great. For example, it’s easy to quantify the annual dollar

savings from using DPE build and test acceleration technologies, like build caching and test

distribution, to shave minutes off your average build time:

cost per engineering minute * average build time reduction (minutes) * average number of builds

per year

A dedicated team not only provides the expertise to implement these DPE acceleration technologies

and optimize their results, but can also ensure that build, test, and restore times don’t regress as the

14

project or product evolves .

For many moderately sized development teams this quickly translates into double digit savings,

measured in engineering years, or budget dollars measured in millions. This alone can justify your

investment in a dedicated DPE team and tools many times over, without even considering the

myriad other hard and soft benefits. Such benefits include dramatically reduced mean-time-to-

resolve (MTTR) for software incidents, better management of flaky tests and other avoidable

failures, and more efficient CI resource consumption.

3.3. DPE provides competitive advantage

Winning, or at least not losing the war for software development talent, is mission-critical for many

companies. As a result, attracting and retaining top talent depends on the quality of the developer

experience you can provide.

We know that the best way to improve the developer experience is to give them back the time they

spend doing things they hate, like waiting for builds to complete and debugging software. That time

can be better spent doing the one thing they love most—building great software features that

delight end users.

These days, many companies use the existence of their dedicated DPE teams as a recruiting and

retention tool because it demonstrates their commitment to providing a rewarding developer

experience.

Businesses have a history of establishing dedicated teams at all levels to drive strategic initiatives

aimed at gaining a competitive edge or remaining competitive, such as innovation and digital

transformation teams. For example, it’s not uncommon to see dedicated teams driving productivity

and efficiency initiatives in areas like business process engineering (e.g. Lean, Six Sigma),

manufacturing (e.g. Total Quality Management, JIT), and industrial process engineering. Given the

global strategic importance of software development and delivery, shouldn’t the experience of

creating that software have the same priority?

3.4. Conclusion: Forward-thinking development

organizations are all in with DPE

For most organizations, DPE should no longer be viewed as an informal, reactive, and opportunistic

job to be done by developers in their spare time. Forward-thinking engineering teams have

discovered that:

1. Systemic developer productivity gains require organizational focus

2. The business case for DPE is a no-brainer

3. The reward for taking action is sustainable competitive advantage

15

4. Developer Productivity Engineering’s

Place in the Broader Developer Productivity

Solution Landscape

By Wayne Caccamo

As alluded to in chapter 1, there are two primary—and complementary—approaches to improving

developer productivity. Both aim to use resources more efficiently and help ship code faster, while

optimizing the developer experience.

Developer Productivity Management (DPM) focuses on the people, and answers questions like,

“How can we get more output out of individual developers and teams by defining and tracking the

right metrics?” Such metrics typically help to quantify output, evaluate performance and

competencies, build and manage teams, and optimize collaboration and time management.

Developer Productivity Engineering (DPE) focuses on the process and technology, and answers

questions like, “How can we make feedback cycles faster using acceleration technologies to speed

up builds and tests?” and “How can we make troubleshooting easier, faster, and more efficient

using data analytics?”

In analogous terms, suppose you’re the owner of an auto racing team. To win, you need both the

best drivers and the fastest cars. In the software engineering world, the drivers are your

developers, while the cars are your highly performant processes and technology toolchain.

This chapter surveys the relative advantages and disadvantages of DPM and DPE, while ultimately

recommending a DPE-first approach. In other words, no matter how good your drivers are, it’s hard

to win races without the fastest cars.

4.1. Advantages of Developer Productivity

Management

DPM can provide management insights through cohort analysis

DPM aims to give engineering management in-depth insight into the performance of their

individual developers and teams who are frequently geographically spread throughout the world.

These insights may be used to increase leadership’s understanding of the organization, give

decision makers more confidence, and provide opportunities for continuous improvement. DPM

does this through cohort analysis, which filters productivity metrics and trends by team locations

(on-site or local), domain (front-end or back-end), seniority, geography, team sizes, and technical

environment.

In contrast, while DPE also provides activity and outcome metrics by team member and technical

environment, these metrics are mainly used to identify, prioritize, and fix toolchain specific

problems like builds and tests.

16

DPM may be useful in building and managing teams and time

DPM can help you build high-performing teams through cohort analysis data about ideal team

sizes, makeup, and structure. Further, DPM metrics can be used to improve project forecasts and

estimates, prioritize work, evaluate project health and risk, and assign developers to teams based

on demonstrated skills and needed competencies.

Managing time is a primary focus of many DPM tool vendors. Using data to simply strike the right

balance between coding time and meeting time, for example, can make a tremendous difference in

team output. Deeper DPM data that focuses on the days of the week and times of the day that

optimize meeting effectiveness can further improve productivity.

DPM may be useful in optimizing individual performance

DPM metrics can be used both to measure individual output, and as inputs for evaluating

individual performance. They can also help with identifying and closing skill gaps and allowing

individuals to showcase their own domain expertise and competencies. Perhaps most importantly,

DPM can be used to monitor changes in individual activity levels (e.g., velocity) to detect if a

developer is stuck and requires some kind of intervention.

4.2. Limitations of Developer Productivity

Management

Human productivity metrics are flawed

DPM assumes there is a reliable and meaningful way to measure developer productivity. After all,

you can’t measurably improve productivity if you can’t define a baseline. In "The Pragmatic

Engineer," Gergely Orosz argues that you can’t measure the productivity of knowledge workers like

doctors, lawyers, chemists, and software engineers. He concludes that for software engineering in

particular, individual metrics can be gamed, don’t tell the whole story or, in some cases, are

completely arbitrary.

Many metrics violate “Do no harm”

The fact is there is no real consensus on what is a valuable human productivity metric that makes

sense across the industry. Moreover, many human productivity metrics can lead to behavior

changes—such as the number of commits per day—that can be counterproductive and antithetical

to the business objectives they are designed to support.

In “The Myth of Developer Productivity” (Nortal Cloud Team, 2018), the authors review several

common developer productivity management metrics, such as hours worked, source lines of code

(SLOC) produced, bugs closed, function points, and defect rates. They conclude that most are more

harmful than useful in managing developer productivity, in part because they lack context.

Consider the developer whose commits and code review metrics are down, but only because s/he

spent more time mentoring others and helping them increase their own productivity. Also consider

the “highly productive” developer who cranks out many lines of inefficient code and who will fly

under the radar when a business isn’t scrutinizing both people and outcomes.

17

Gut instinct is still king

According to developer productivity thought leader Bill Harding (“Measuring developer

productivity in 2020 for data-driven decision makers”), due to the challenges of measuring

developer output and using that data to improve team productivity, managers often hone their own

intuition to serve as their ‘north star’. Thus, a key goal of DPM is to find and retain technical

managers whose past intuitions led to success. But how do you define success, and how is that

measured?

Big brother can be a tough sell

Another potential DPM challenge is gaining buy-in from the developer teams that are being

measured. Defendants of DPM dismiss the inevitable developer pushback as largely a matter of

messaging and claim that it’s a natural evolution of a maturing software development management

process. They point to the great companies that initially resisted external measures of

performance—like Consumer Reports, Glassdoor, and Yelp—and later embraced them, failing to

acknowledge that they had no other option.

DPM is a choice. To be effective, it should be implemented with great care, considering your

company culture, objectives, and ability to execute (i.e,. the ability to translate insight into action).

Developers understand that their activities may be monitored (e.g., whether they are active on

Slack or not) and understand the need for measurement. The sensitivity stems from the purpose of

the measurement and the underlying problems management is trying to solve. When management

focuses on understanding the root cause of delivery speed variations, for example, DPM may be

welcome, but expect the developer antibodies to surface when metrics are used as input for

comparative performance evaluation.

DPM solutions may collide with other tools

The benefits that DPM targets often overlap with existing and established technologies, including

IDEs, collaboration and workflow tools, project management systems, and learning management

platforms. As a result, it’s up to you to decide where one system/process starts and another ends,

and deal with potentially multiple integration points to rationalize workflows and facilitate

reporting.

In contrast, DPE is a mostly accretive solution in terms of the kind of data it generates and the ways

in which it solves problems and augments workflows. Integrations with systems of record for

management reporting (e.g., Tableau) or CI integration (like Jenkins and TeamCity) are simple and

straightforward.

4.3. Advantages of Developer Productivity Engineering

DPE provides measures of outcomes

Perhaps the clearest distinction between DPM and DPE is that DPM provides mostly measures of

activity and DPE focuses more on measuring outcomes. Compare DPM metrics like hours worked,

SLOC, and bugs closed to DPE metrics such as build and test times for local and CI builds, failure

rates, and build comparison data that can be used to pinpoint the root cause of performance and

18

stability regressions.

DPE metrics cannot be gamed, are not arbitrary, and can be more easily marshalled to make logical

connections to higher-level performance and efficiency metrics. These metrics can, in turn, be

aligned unequivocally with C-level business objectives, like time-to-market, quality of services,

talent retention, and cost savings.

DPE delivers measurable ROI

If you Google "developer productivity measurement benefits," you will be hard-pressed to find a

straightforward explanation from industry commentators or DPM tool vendors on the benefits of

tools used to measure individual developer and team productivity. It may be that a direct answer to

the query is hard to find not because it does not exist, but because it’s obvious and assumed. That is,

more productive developers write higher quality code that ships faster and at a lower cost. It would

not be unreasonable to take this on faith, but to know if the return on your investment in DPM is a

lot or a little is difficult to say.

With DPE, measuring ROI for several key benefits is straightforward. For example, to calculate the

hard savings from achieving faster build and test feedback cycles using DPE acceleration

technologies like build caching and test distribution, the formula is simple: Multiply the average

time saved waiting for builds to complete by the number of builds per year to get total time savings

in engineering years, and then multiply that by the cost of a fully-loaded engineering year. For

many moderate-size development teams, this quickly translates into double-digit savings, measured

in engineering years, or millions of dollars if put into budgetary terms.

DPE improves the developer experience

While DPM tool vendors may claim that many features support a better developer experience (e.g.,

team assignments that play to their skill and competency strengths), the limitations of DPM

described above suggest DPM may do more to negatively impact the developer experience. As a

result, developers are not the driver for DPM adoption—in fact, they are more likely to be the

primary skeptics, while software engineering management is the primary user/practitioner.

Compare this scenario to DPE, in which the senior and lead developers are not only the drivers of

DPE initiatives, but are also the power users of the tools. Furthermore, DPE focuses primarily on

directly improving the developer experience by improving the tooling. The benefits they enjoy are

not soft, indirect, or unquantifiable. They often directly experience the benefits of faster build times

and more efficient troubleshooting a dozen times or more a day. As a result, it is not uncommon for

companies with established and dedicated DPE teams to use their DPE practice as a tool for

recruiting and retaining top engineering talent.

DPE has more robust success stories

It is difficult to find evidence of DPM successes beyond testimonial quotes. In contrast, you can find

hour-long webcasts presented by DPE leaders within some of the world’s leading business and

technology brands. They cover business drivers and objectives, solutions deployed, verifiable

benefits achieved, and next steps in their DPE journeys.

19

4.4. Limitations of Developer Productivity Engineering

DPE is relatively new and less well understood

Compared to DPM, DPE is a relatively new and emerging discipline that is socializing new concepts

and solutions—including local and remote build caching, test distribution, predictive test selection,

build scans, and build and test failure analytics. Some concepts get confused, which may create

some initial friction in their adoption, such as the functional difference between build caches and

dependency repositories. This is a normal part of the growing pains associated with establishing a

new software solution category.

DPE solutions do not yet support all developer environments

DPE solutions, like Gradle Inc.'s Gradle Enterprise, currently focus on the JVM and Android

ecosystems and may provide more limited support for other environments. It is expected that DPE

solutions will expand their footprint over time to fully support all popular developer environments.

While some DPM tool metrics are toolchain/technology specific, some apply more broadly across

ecosystems.

4.5. Conclusion

Key Characteristics DPM DPE

First level focus is on

measuring…

People Process & Technology

Second level focus is on

measuring…

Activity Outcomes

SDLC lifecycle focus is on… All phases Build & Test & CI

ROI is primarily Soft Hard

DPM and DPE are complementary, not competing approaches to improving developer productivity.

However, they can compete for the lion’s share of both your mind and your wallet. If you’re living

in a JVM ecosystem, start with investing in the fastest car: Developer Productivity Engineering.

20

5. DPE Solutions Overview

The diagram below outlines the key pain points and benefits addressed by DPE and aligns them

with the key available solution technologies and tools designed to deliver these benefits. Part 2 of

this book describes in some level of detail the key concepts needed to understand why these

solution capabilities are important, how they work, and what you can expect in terms of business

impact.

Figure 2. A Developer Productivity Engineering solution framework that aligns pain points, benefits and key

solution technologies

21

Part 2 - FEEDBACK CYCLES AND

ACCELERATION

The Essential Role of Faster Feedback Cycles

Faster Builds by Doing Less with Build Caching

Test Distribution: Faster Builds by Distributing the Work

Performance Profiling and Analytics

22

6. The Essential Role of Faster Feedback

Cycles

By Hans Dockter

A central tenet of Developer Productivity Engineering is that feedback cycles across the software

development and delivery lifecycle should be minimized. It’s not possible to truly buy into the

practice of DPE without having a full appreciation of the role feedback cycles play in optimizing

developer productivity. One reason is the positive effects it has on engineering behaviors and

productivity at the individual and team level in ways that might not be immediately apparent.

This diagram shows the complex relationship between the effects of faster build and test feedback

cycles on software developer behavior and productivity and the quality benefits of those effects.

Figure 3. The anatomy of the effects and benefits of faster feedback cycles

6.1. Faster builds improve the creative flow

Previously the impact from faster feedback cycles on creative flow was discussed. Consider a

customer example comparing feedback cycles from two teams.

Team 1 Team 2

Number of developers 11 6

Build time (with tests) 4 minutes 1 minute

Number of local builds 850 1010

Number of builds per developer 77 180

23

The developers on Team 2 ask for feedback twice as often as the developers on Team 1. This

correlation can be observed between build times and the number of builds consistently across

organizations, even when the build time is only a few minutes. The most likely reason for this is

that their builds and tests are faster. Such a correlation is not surprising when build times are

painfully long. Interestingly, even in environments where builds and tests are not perceived to be

slow, the data shows that the number of builds is inversely-proportional to the duration.

The underlying cause of this behavior is that our brains function much better when disruptions to

the creative flow are minimized. Endorphins help us enjoy our work during flow states. But, when

developers have to ask the toolchain for feedback, which ideally is frequently, it has to be fast or

they will not stay in the flow. With faster builds and tests developers can find a more productive

balance between the conflicting forces represented by the need to interrupt oneself to get feedback

and the need to preserve one’s creative flow.

6.2. Developer time is wasted waiting for builds and

tests to finish

There is another obvious reason why developers on Team 2 are more productive. A lot of the time

waiting for feedback is actually just that, waiting time. When builds and tests run in less than 10

minutes, context switching is often not worth the effort and a lot of the build and test time is pure

downtime.

The aggregated cost of wait time is surprisingly high even for very fast builds and even moderate

improvements are often worthwhile. The table below shows that, although the team with a one-

minute build time is doing very well by industry-standards, reducing the build time further by 40%

(down to 36 seconds) saves 44 developer days per year. For a team of six engineers, this is a

significant productivity improvement.

Team Number of

developers

Local builds

per week

Build Time Build Time

with DPE

Savings per

year

Team 2 6 1010 1 minute 36 seconds 44 days

On a larger team, this scales exponentially as a team of 100 developers nearly cuts their build time

in half for a savings of 5,200 developer days saved. Assuming 220 working days a year per

developer, 5,200 developer days is roughly 25% of all engineering time spent. Significant

performance improvements like this are game-changers for productivity.

Team Number of

developers

Local builds

per week

Build Time Build Time

with DPE

Savings per

year

Team 3 100 12000 9 minutes 5 minutes 5200 days

6.3. Longer builds mean more context switching

As build time increases, more and more developers switch to do different tasks while the build is

running. If the build is the final build to get a feature merged and the build is successful, this is

usually not much of a problem (although longer builds are more likely to lead to merge conflicts).

24

But if the build fails or was necessary to provide intermediate feedback, developers pay the cost of

context switching twice—once when going back to the previous task and once when continuing

with the new one. This often costs developers 10-20 minutes per switch and the reason why most

prefer to wait for builds that take less than10 minutes to run.

6.4. The effect of failed builds on build time and

context switching

On average 20% of all build and test runs fail. This is a healthy number as it is the job of the build to

detect problems with the code. But this number significantly affects the effective average build time

and the context switching frequency.

average time for a successful build = average build time + (failure frequency * average build

time) + average debugging time

context switching frequency = failure frequency * number of builds * 2

An unreliable toolchain, for example, one with a lot of flaky tests, can both dramatically increase

the average wait time as well as the context switching frequency.

6.5. Longer builds are harder to troubleshoot

The longer a build takes, the bigger the changesets will become. This is because for most

organizations the build time is roughly the same regardless of the size of the changeset. This build

time is a fixed tax paid to get any change through the software development lifecycle. If this fixed

tax per build run is high, the logical way to minimize this tax is to not run builds as often. The

amount of coding done between the build runs will increase.

Unfortunately, the tax is not fixed if the build fails. The more changes you have, the longer on

average triaging takes. This increased debugging cost caused by larger individual changesets can be

significant because it has to be paid for failing local builds, failing pull request builds, and failing

integration branch builds.

Depending on how a CI process is organized, additional effects may come into play for the

integration branch CI builds. The fewer integration branch CI builds per day that can be run due to

their duration, the fewer merge points there will be. Thus, the average number of changes included

in that CI build is higher. This may significantly increase debugging time for any failed CI build as

this will potentially involve a lot of unrelated changes.

The most extreme cases we have seen are large repositories that take an entire day to build. If this

build fails, it is possible that any one of the hundred commits could be the culprit and one should

expect triaging to be extremely complicated and time-consuming. But even with much shorter build

times, this effect may be experienced. In general, there is a non-linear relationship between the

number of changes and debugging time.

With a build cache and modularization your build times will be on average much faster for smaller

changes compared to larger ones, thus incentivizing a development workflow where small changes

are pushed frequently and quickly to production.

25

6.6. Time to troubleshoot grows exponentially with

problem detection time

Fixing problems later takes disproportionately more time than fixing them earlier.

Figure 4. Fix time grows exponentially over detection time

There are multiple reasons for this including the previously mentioned non-linear relationship

between the size of the changeset and the time required to fix the problem. Context switching also

becomes more expensive.

There is a similar effect in a multi-repository environment where the consumer team is responsible

for detecting if a consumer contract has been broken and organizing fixes as needed with the

producer team. The more dependencies that have changed during a build run, the exponentially

harder it becomes to figure out which dependencies and teams are responsible.

6.7. Longer builds lead to more merge conflicts

Both bigger changesets and more merged pull requests per integration branch CI build increase the

surface area of the changes and thus the likelihood of merge conflicts. Another effect is at play here.

Bigger changesets also increase the debugging time and thus the average time of a successful CI

integration branch build. This again reduces the frequency of merges and for the reasons discussed,

increases the likelihood of merge conflicts. This is a vicious circle. Merge conflicts are one of the

most frustrating and time-consuming issues developers must confront.

26

6.8. The effect of build speed on large versus small

projects

Smaller projects will benefit from faster builds because of less wait time and improved creative

flow. They will also be affected negatively by larger change sets as their build time increases.

Smaller projects usually don’t suffer from overlapping merges as much as larger projects, as those

are less likely to occur with a smaller number of developers.

Many larger teams break up their codebase into many different source repositories for that reason.

But this introduces other challenges. The producer of a library, for example, is no longer aware of

whether they have broken a consumer after running their build and tests. The consumer will be

impacted and has to deal with the problem when this occurs. The triaging of that is often difficult

and very time-consuming.

6.9. Many companies are moving in the wrong

direction

As build times grow there is increasing friction between the process of getting feedback early and

reducing the wait time before changes can be pushed.

Figure 5. Delaying feedback exponentially increases fix time over detection time

This is a regrettable situation and most organizations do not yet have or are not even aware of the

tools and practices that fundamentally solve this problem. Instead they go down the path of shifting

the feedback later in the development cycle, or 'to the right'. This results in weakening the local

quality gate by running fewer tests and the pull request build may be the first to run the unit tests.

This is the opposite of what a healthy CI/CD pipeline process looks like where feedback is provided

as early and as conveniently as possible. Shifting feedback to the right will only prolong the

27

inevitable and all the compounding negative effects discussed previously will land even more

forcefully because they will occur closer to production.

The only solution to this problem is to make your build faster.

6.10. A final word of caution: The problem grows with

your success

The problems described here will grow as developer teams expand, code bases bloom and

repositories increase in number. Exacerbating this is the problem of unhappy developers. Most

developers want to be productive and work at their full potential. If they don’t see an effort to turn

an unproductive environment into a more productive one, the best ones will look for other

opportunities.

The practice of Developer Productivity Engineering will put you in a position

to improve or at least maintain your current productivity and truly reap the

benefits of CI/CD.

STORIES FROM THE TRENCHES: IT’S A DOG EAT DOG WORLD

There was a fireside chat a couple of years ago with an executive of a Wall Street bank and an

engineering leader from a Bay Area software company.The executive lamented: “If I only had

the quality of your software engineers.” The Bay Area company was very developer-

productivity-focused and their engineering leader replied: "Guess where we got our

developers from? From organizations like yours."

6.11. Conclusion

Faster feedback cycles encourage and reinforce good habits and developer best practices.

Hopefully, this chapter has untangled the cause-and-effect relationship with other indirect benefits

you may have not considered.

There is one other “soft” benefit that may yet be the most important: the impact on developer

happiness. Fast feedback cycles mean developers waste less time waiting for builds and tests to

complete and performing tasks they enjoy less like troubleshooting problems. The more time

reserved for pursuing their passion—being creative and writing great code—the happier they will

be.

And, a good way to discourage developers from engaging in negative behaviors is to minimize

avoidable frustrations. Conversely, the best way to encourage positive behaviors is to make it easier

to always do the right thing by investing in processes and tools that support a great developer

experience. A good place to start is those that speed up feedback cycles.

28

7. Faster Builds by Doing Less with Build

Caching

By Hans Dockter

The concept of build caching is relatively new to the Java world. It was introduced by Gradle in

2017. Google and Facebook have been using it internally for many years. A build cache is very

different and complementary to the concept of dependency caching and binary repositories.

Whereas a dependency cache is for caching binaries that represent dependencies from one source

repository to another, a build cache caches build actions, like Gradle tasks or Maven goals. A build

cache makes building a single source repository faster.

A build cache is most effective when you have a multi-module build. Maven and Gradle multi-

module builds are configured a bit differently. Here are some example build declarations for each:

Section in parent pom.xml

<modules>

Ê <module>core</module>

Ê <module>service</module>

Ê <module>webapp</module>

Ê <module>export-api</module>

</modules>

Section in settings.gradle

include "core"

include "service"

include "webapp"

include "export-api"

For many reasons, multi-module builds are good practice, even for smaller projects. They introduce

separation of concerns and a more decoupled codebase with better maintainability as it prevents

cyclic dependencies. Additionally, modular applications will have an easier time adapting to future

development paradigms. For example, highly modular API-driven applications are easier to

deconstruct into microservices than ones without that level of modularity. Most Maven or Gradle

builds are multi-module builds. Once you start using a build cache, increased modularization will

enable even faster build and test runs by increasing the overall cacheability of the project.

When you run a build for such a multi-module project, actions like compile, test, javadoc, and

checkstyle are executed for each module. In the example above, there are four

src/main/java and

src/main/test directories that need to be compiled. The associated unit tests will be run for all four

modules. The same is true for javadoc, checkstyle, and so on.

With Maven, the only way to build reliably is to always clean the output from the previous run. This

means that even for the smallest change it is necessary to rebuild and re-test every module from

scratch:

29

Figure 6. Rebuilding everything from scratch (arrows are dependencies between actions)

Gradle is an incremental build system. It does not require cleaning the output of previous runs and

can incrementally rebuild the output depending on what has changed. For more details see:

Gradle

vs Maven: Performance Comparison

. But in cases where one switches between code branches, pulls

new changes or performs clean CI builds it will still be necessary to build everything from scratch

even when building with Gradle.

A build cache can improve this scenario. Let’s say a project has been built once. After that, a line of

code is changed in the

export-api module from the example above. Next, an argument is added to a

public method and it is assumed that no other module has a dependency on

export-api. With such a

change and the build-cache in place, only 20% of the actions need to be run.

30

Figure 7. Changing a public method in the export-api module

How does this work? All 20 build actions have inputs and outputs. For example, the compile action

have the sources and the compile classpath as an input as well as the compiler version. The output

is a directory with compiled

.class files. The test action has the test sources and the test runtime

classpath as an input, and possibly other files. The outputs are the

test-results.xml files.

The build cache logic hashes all inputs for a particular action and then calculates a key that

uniquely represents those inputs. It then looks in the cache to see if there is an entry for this key. If

one is found, the entry is copied into the Maven or Gradle build output directory of this module and

the action is not executed. The state of the build output directory will be the same as if the action

had been executed. Copying the output from the cache is much faster than executing the action. If

an entry for a key is not found in the cache, the action will be executed and its output will be copied

into the cache associated with the key.

In our example, four actions belonging to the

export-api module had a new key and needed to be

executed. That’s because one of its inputs, the compile action of the production code (the source

directory) has changed. The same is true for checkstyle. The compile action for the tests has a new

key because its compile classpath changed. The compile classpath changed because the production

code of

export-api was changed and is part of the compile classpath for the tests. The test action has

a new key because its runtime classpath has changed for the same reasons.

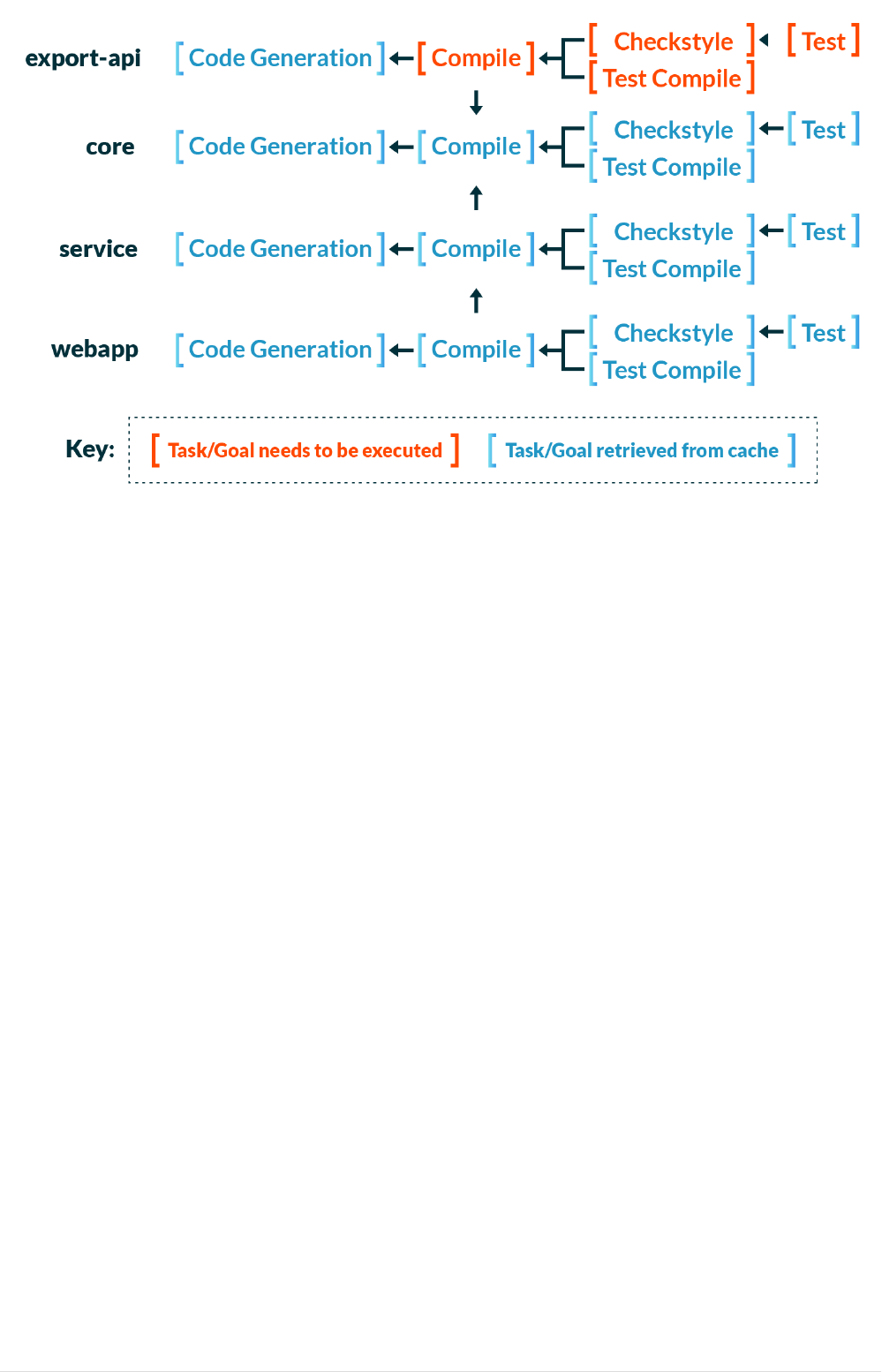

As another example, let’s say a method parameter needs to be added to a public method of the

service module. webapp has a dependency on service.

31

Figure 8. Changing a public method in the service module

Now, not only do the actions for the module that have changed need to be re-executed, but the

actions for the dependent module will need to re-execute as well. This can be detected via the

action inputs. Because the

service module code changed, the classpath of the webapp compile and

test compile action has changed, as well as the runtime classpath for its test action. As a result, all

these actions need to be executed. Yet, compared to rebuilding everything, only 40% of the actions

need to be executed.

Now let’s use the same example as before, but instead of adding an argument to a public method in

the

service module, only a change to the method body will be made. A smart build cache can now

do further optimizations:

32

Figure 9. Changing an implementation detail of a method in the service module

The cache key calculated from the compile classpath only takes the public API of the classpath items

into account. An implementation change does not affect that key, reflecting the fact that any such

change has no relevance to the Java compiler. As a result, the execution of three compile actions

can be avoided. For the runtime classpath of the test actions, implementation changes in your

dependencies are obviously relevant and lead to a new key, which in turn, results in executing the

test actions for

service and webapp. With this optimization, just 20% of the build actions need to be

executed.

Let’s look at another example where every other module depends on the

core module and a change

is made to the implementation of a method in the

core module.

33

Figure 10. Changing an implementation detail of a method in the core module

This is a very invasive change but even in this instance only 30% of the actions need to be executed.

Even given that executing the test actions will probably consume more time than the other actions,

it still saves a lot in time and compute resources. The worst-case change would be adding an

argument to a public method in the

core module.

Figure 11. Changing a public method in the core module

Even here one still gets significant savings from the cache as only 65% of all the actions need to be

executed.

34

7.1. Local vs remote build cache