Citation: Dzedzickis, A.;

Subaˇci

¯

ut

˙

e-Žemaitien

˙

e, J.; Šutinys, E.;

Samukait

˙

e-Bubnien

˙

e, U.; Buˇcinskas,

V. Advanced Applications of

Industrial Robotics: New Trends and

Possibilities. Appl. Sci. 2022, 12, 135.

https://doi.org/10.3390/

app12010135

Academic Editor: Luis Gracia

Received: 9 November 2021

Accepted: 19 December 2021

Published: 23 December 2021

Publisher’s Note: MDPI stays neutral

with regard to jurisdictional claims in

published maps and institutional affil-

iations.

Copyright: © 2021 by the authors.

Licensee MDPI, Basel, Switzerland.

This article is an open access article

distributed under the terms and

conditions of the Creative Commons

Attribution (CC BY) license (https://

creativecommons.org/licenses/by/

4.0/).

applied

sciences

Review

Advanced Applications of Industrial Robotics: New Trends

and Possibilities

Andrius Dzedzickis * , Jurga Subaˇci

¯

ut

˙

e-Žemaitien

˙

e, Ernestas Šutinys, Urt

˙

e Samukait

˙

e-Bubnien

˙

e *

and Vytautas Buˇcinskas

Department of Mechatronics, Robotics, and Digital Manufacturing, Vilnius Gediminas Technical University,

J. Basanaviciaus Str. 28, LT-03224 Vilnius, Lithuania; [email protected] (J.S.-Ž.);

[email protected] (E.Š.); [email protected] (V.B.)

* Correspondence: [email protected] (A.D.); [email protected] (U.S.-B.)

Abstract:

This review is dedicated to the advanced applications of robotic technologies in the

industrial field. Robotic solutions in areas with non-intensive applications are presented, and

their implementations are analysed. We also provide an overview of survey publications and

technical reports, classified by application criteria, and the development of the structure of existing

solutions, and identify recent research gaps. The analysis results reveal the background to the

existing obstacles and problems. These issues relate to the areas of psychology, human nature, special

artificial intelligence (AI) implementation, and the robot-oriented object design paradigm. Analysis

of robot applications shows that the existing emerging applications in robotics face technical and

psychological obstacles. The results of this review revealed four directions of required advancement

in robotics: development of intelligent companions; improved implementation of AI-based solutions;

robot-oriented design of objects; and psychological solutions for robot–human collaboration.

Keywords: industrial robots; collaborative robots; machine learning in robotics; computer vision

1. Introduction

The industrial robotics sector is one of the most quickly growing industrial divisions, pro-

viding standardised technologies suitable for various automation processes. In ISO 8373:2012

standard [

1

], an industrial robot is defined as an automatically controlled, reprogrammable,

multipurpose manipulator, programmable in three or more axes, which can be stationary or

mobile for use in industrial automation applications. However, the same standard creates an

exception for wider implementation. It states that the robot’s classification into industrial,

service, or other types is undertaken according to its intended application.

According to the International Federation of Robotics (IRF) [

2

], 373,000 industrial

robots were sold globally in 2019. In 2020 the total number of industrial robots operating

in factories globally reached 2.7 million. Successful application of industrial robots, their

reliability and availability, and the active implementation of the Industry 4.0 concept have

stimulated growing interest in robots’ optimisation and the research of new implemen-

tations in various areas, especially in non-manufacturing and non-typical applications.

According to one of the biggest scientific databases, ScienceDirect [

3

], more than 4500 scien-

tific papers were published in 2019 using the term “Industrial robot” as a keyword and,

in 2020, the number of papers with a similar interest and research direction increased to

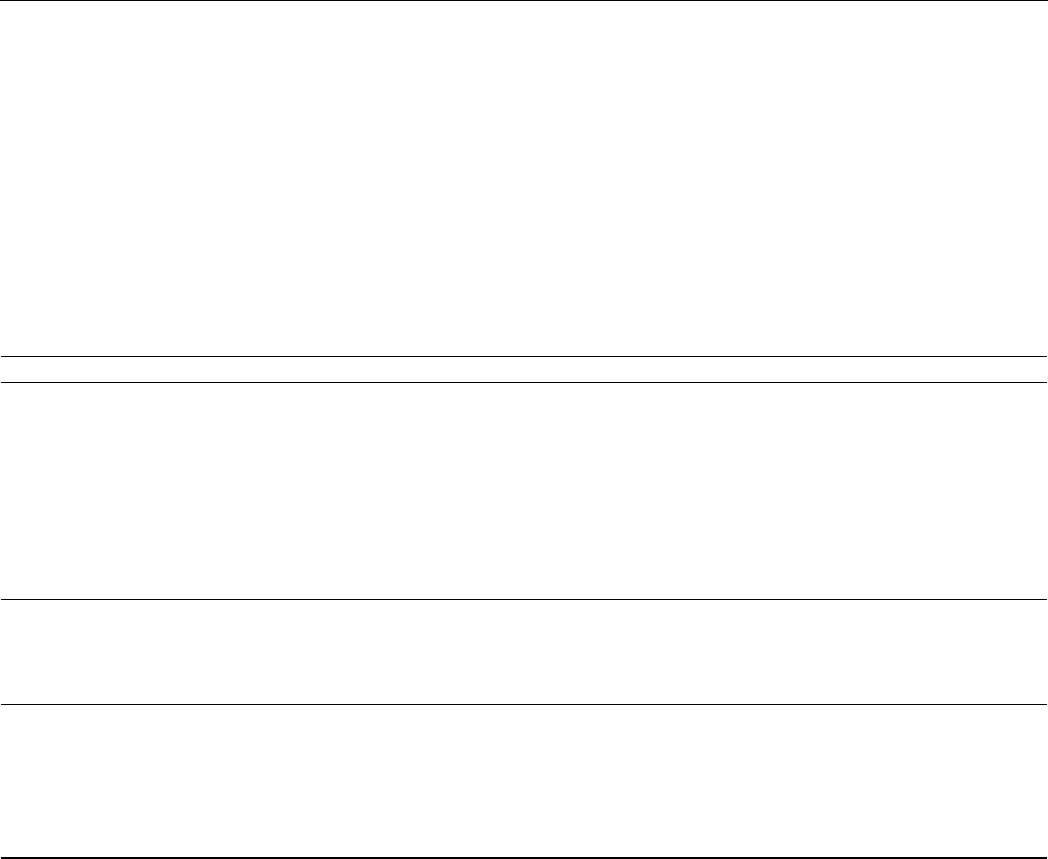

5300. Figure 1 shows the annual ratio of new robot installations vs. the number of scientific

publications in the ScienceDirect database. Scientific interest in this field is based on a

steady increase in the number of publications, independent of the political, economic, and

social factors affecting the market for new robots.

Appl. Sci. 2022, 12, 135. https://doi.org/10.3390/app12010135 https://www.mdpi.com/journal/applsci

Appl. Sci. 2022, 12, 135 2 of 25

Appl. Sci. 2022, 11, x FOR PEER REVIEW 2 of 26

Figure 1. The annual ratio of publications to newly installed industrial robots.

This review assesses the recent development trends in robotics, and identifies some

of the most relevant ethical, technological and, scientific uncertainties limiting wider im-

plementation possibilities. This literature review is focused mainly on the 2018–2021 ap-

plications of industrial robots in fields in which endorsement of robotisation has tradi-

tionally been weak (i.e., medical applications, the food industry, agricultural applications,

and the civil engineering industry). It also includes fundamental issues such as human–

machine interaction, object recognition, path planning, and optimisation.

For this review, main keywords, such as industrial robots, collaborative robots, and

robotics, were used to survey published papers over a four-year period. Because this is a

widely researched and dynamic area, the review focused on a relatively short time period

and encompassed the most recent sources to ensure the analysis conducted was novel.

According to the search request, Google Scholar returned 79,500 results, from which

115 publications were selected. The surveyed articles were selected according to the di-

rection of the literature review and the indicated criteria (application area, novelty and

significance of achievements, reliability, and feasibility of results).

Despite the ever-growing field of automation in daily life and society’s accustomed

use of smart devices, non-typical applications of robotics are still often viewed with con-

siderable scepticism. The most common myth about robots is that they will occupy human

workplaces, leaving human workers without a source of livelihood. Nevertheless, the re-

search provided in [4], which aimed to evaluate the public outcry about robots taking over

jobs in electronics and textiles industries in Japan, proved that such a point of view is

incorrect. Evaluation of the use of the robots based on their number and real implementa-

tion price determined that implementation of robots positively affects productivity, which

results in a positive impact for the most vulnerable workers in society, i.e., women, part-

time workers, high-school graduates, and aged persons.

Technological and scientific uncertainties also require a special approach. Each ro-

botisation task is unique in its own way. These tasks often require the use of individual

tools, the creation of a corresponding working environment, the use of additional sensors

or measurement systems, and the implementation of complex control algorithms to ex-

pand the functionalities or improve the characteristics of standard robots. In most appli-

cations, industrial robots form bigger units as robotic cells or automated/autonomous

manufacturing lines. As a result, the robotisation of even a relatively simple task becomes

a complex solution requiring a systemic approach.

Moreover, the issue of implementing an industrial robot remains complicated by its

interdisciplinary nature: proper organisation of the work cycle is the object of manufac-

turing management sciences; the design of grippers and related equipment lies within the

field of mechanical engineering; and the integration of all devices into a united system,

sensor data analysis and whole system control are the objects of mechatronics.

0

2000

4000

6000

8000

0

100

200

300

400

500

2012 2013 2014 2015 2016 2017 2018 2019 2020 2021

Publications

Instaliations '000 of units

Installations Publications

Figure 1. The annual ratio of publications to newly installed industrial robots.

This review assesses the recent development trends in robotics, and identifies some of

the most relevant ethical, technological and, scientific uncertainties limiting wider imple-

mentation possibilities. This literature review is focused mainly on the 2018–2021 applica-

tions of industrial robots in fields in which endorsement of robotisation has traditionally

been weak (i.e., medical applications, the food industry, agricultural applications, and the

civil engineering industry). It also includes fundamental issues such as human–machine

interaction, object recognition, path planning, and optimisation.

For this review, main keywords, such as industrial robots, collaborative robots, and

robotics, were used to survey published papers over a four-year period. Because this is a

widely researched and dynamic area, the review focused on a relatively short time period

and encompassed the most recent sources to ensure the analysis conducted was novel.

According to the search request, Google Scholar returned 79,500 results, from which

115 publications were selected. The surveyed articles were selected according to the

direction of the literature review and the indicated criteria (application area, novelty and

significance of achievements, reliability, and feasibility of results).

Despite the ever-growing field of automation in daily life and society’s accustomed

use of smart devices, non-typical applications of robotics are still often viewed with consid-

erable scepticism. The most common myth about robots is that they will occupy human

workplaces, leaving human workers without a source of livelihood. Nevertheless, the

research provided in [

4

], which aimed to evaluate the public outcry about robots taking

over jobs in electronics and textiles industries in Japan, proved that such a point of view

is incorrect. Evaluation of the use of the robots based on their number and real imple-

mentation price determined that implementation of robots positively affects productivity,

which results in a positive impact for the most vulnerable workers in society, i.e., women,

part-time workers, high-school graduates, and aged persons.

Technological and scientific uncertainties also require a special approach. Each roboti-

sation task is unique in its own way. These tasks often require the use of individual tools,

the creation of a corresponding working environment, the use of additional sensors or

measurement systems, and the implementation of complex control algorithms to expand

the functionalities or improve the characteristics of standard robots. In most applications,

industrial robots form bigger units as robotic cells or automated/autonomous manufactur-

ing lines. As a result, the robotisation of even a relatively simple task becomes a complex

solution requiring a systemic approach.

Moreover, the issue of implementing an industrial robot remains complicated by its in-

terdisciplinary nature: proper organisation of the work cycle is the object of manufacturing

management sciences; the design of grippers and related equipment lies within the field of

mechanical engineering; and the integration of all devices into a united system, sensor data

analysis and whole system control are the objects of mechatronics.

This review focuses on the hardware and software methods used to implement in-

dustrial robots in various applications. The aim was to systematically classify the newest

achievements in industrial robotics according to application fields without strong robotisa-

Appl. Sci. 2022, 12, 135 3 of 25

tion traditions. The analysis of this study was also undertaken from a multidisciplinary

perspective, and considers the implementation of computer vision and machine learning

for robotic applications.

2. Main Robotisation Strategies

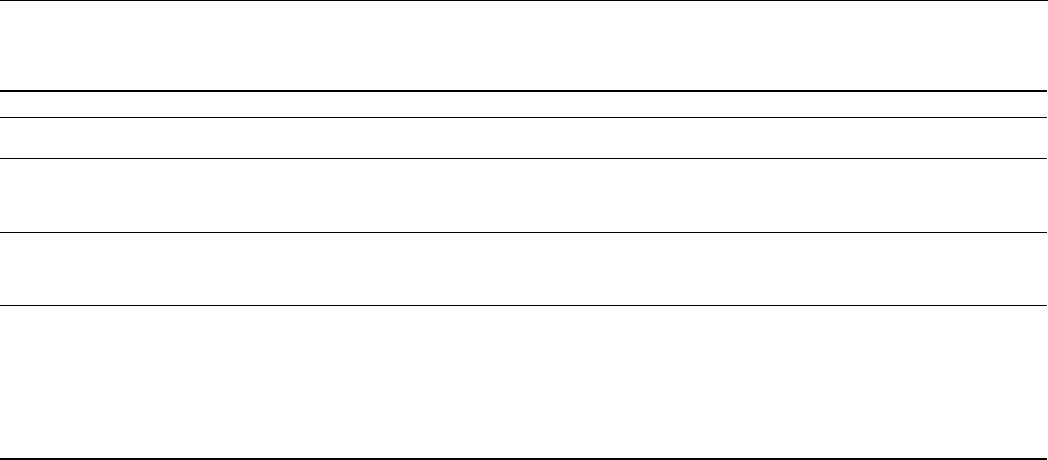

According to the human–robot cooperation type, a review of the most recent trends in

industrial robotics applications indicates two main robotisation strategies: classical and

modern. In industrial robotics, five typical levels of human–robot cooperation are defined

(Figure 2): (i) no collaboration; (ii) coexistence; (iii) synchronisation; (iv) cooperation;

(v) collaboration [5].

Appl. Sci. 2022, 11, x FOR PEER REVIEW 3 of 26

This review focuses on the hardware and software methods used to implement in-

dustrial robots in various applications. The aim was to systematically classify the newest

achievements in industrial robotics according to application fields without strong robot-

isation traditions. The analysis of this study was also undertaken from a multidisciplinary

perspective, and considers the implementation of computer vision and machine learning

for robotic applications.

2. Main Robotisation Strategies

According to the human–robot cooperation type, a review of the most recent trends

in industrial robotics applications indicates two main robotisation strategies: classical and

modern. In industrial robotics, five typical levels of human–robot cooperation are defined

(Figure 2): (i) no collaboration; (ii) coexistence; (iii) synchronisation; (iv) cooperation; (v)

collaboration [5].

The classical strategy encompasses the first cooperation level (Figure 2a). It is based

on the approach that robots must limit humans in their workplace by creating closed robot

cells in which human activity is unacceptable; if a human must enter the robot’s work-

space, the robot must be stopped. This approach uses various safety systems to detect and

prevent human access to the robot’s workspace. The modern strategy includes the remain-

ing four cooperation levels (Figure 2 b–e). This is based on an opposing approach, and

states that robots and humans can work in one workplace and collaborate. Such an ap-

proach creates additional requirements for robot’s design, control, and sensing systems.

Robots adapted to operate in conjunction with human workers are usually defined as col-

laborative robots or cobots.

Figure 2. Human–robot cooperation levels [5]: (a) no collaboration, the robot remains inside a closed

work cell; (b) coexistence, removed cells, but separate workspaces; (c) synchronisation, sharing of

the workspace, but never at the same time; (d) cooperation, shared task and workspace, no physical

interaction; (e) collaboration, operators and robots exchange forces.

2.1. Classical Robotisation Strategy

Following the issuing of the patent for the first industrial robot to George Devol in

1954, the classical robotisation strategy has indicated that robots should replace human

Figure 2.

Human–robot cooperation levels [

5

]: (

a

) no collaboration, the robot remains inside a closed

work cell; (

b

) coexistence, removed cells, but separate workspaces; (

c

) synchronisation, sharing of

the workspace, but never at the same time; (

d

) cooperation, shared task and workspace, no physical

interaction; (e) collaboration, operators and robots exchange forces.

The classical strategy encompasses the first cooperation level (Figure 2a). It is based

on the approach that robots must limit humans in their workplace by creating closed robot

cells in which human activity is unacceptable; if a human must enter the robot’s workspace,

the robot must be stopped. This approach uses various safety systems to detect and prevent

human access to the robot’s workspace. The modern strategy includes the remaining four

cooperation levels (Figure 2b–e). This is based on an opposing approach, and states that

robots and humans can work in one workplace and collaborate. Such an approach creates

additional requirements for robot’s design, control, and sensing systems. Robots adapted

to operate in conjunction with human workers are usually defined as collaborative robots

or cobots.

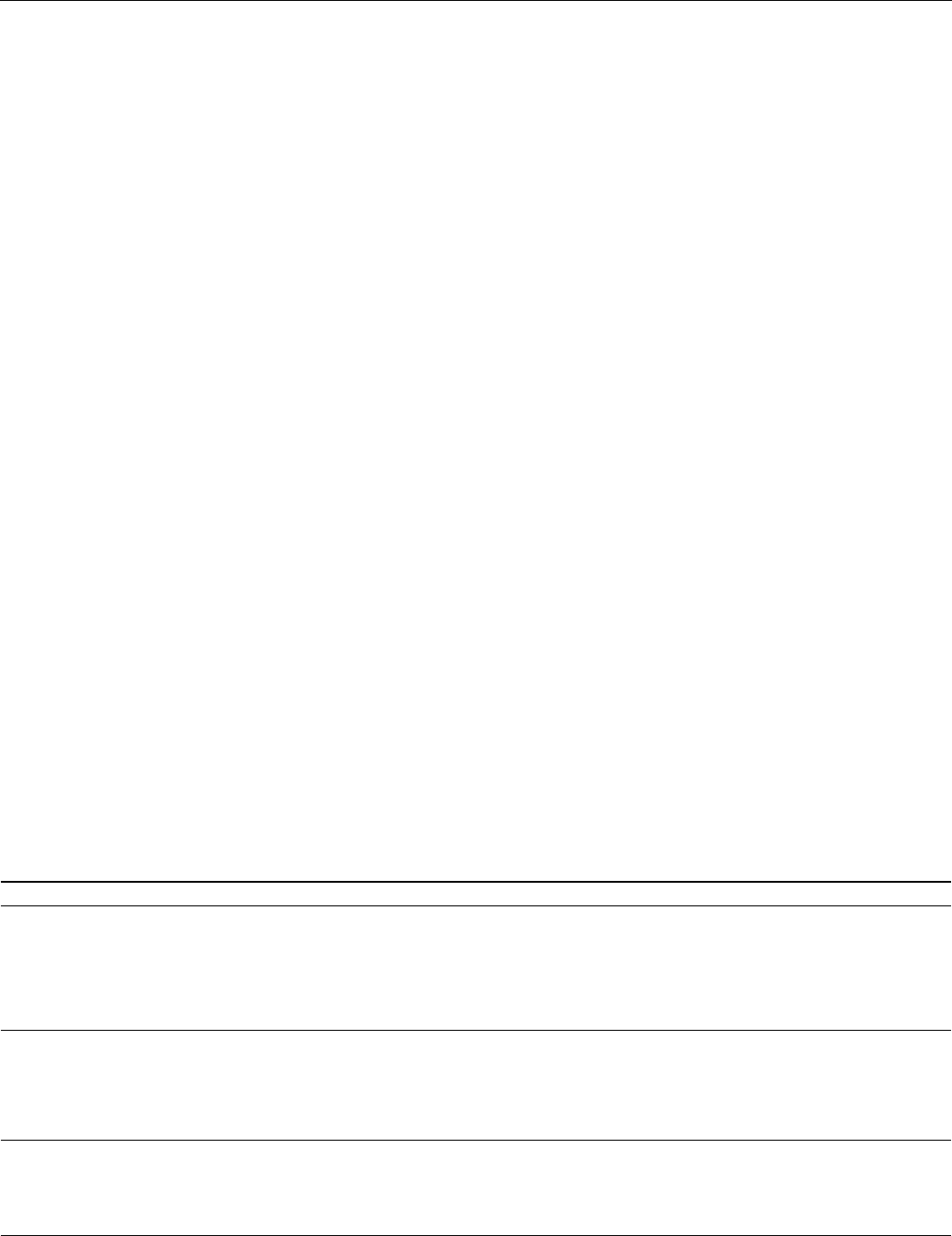

2.1. Classical Robotisation Strategy

Following the issuing of the patent for the first industrial robot to George Devol in

1954, the classical robotisation strategy has indicated that robots should replace human

workers in routine tasks and unhealthy workplaces. This strategy suggests that humans

should be removed from the robot’s workspace (Figure 3a). Direct cooperation between the

Appl. Sci. 2022, 12, 135 4 of 25

robot and humans is forbidden due to the potential danger for human health and safety.

This approach was later expanded to encompass accuracy, reliability, productivity, and

economic factors.

Appl. Sci. 2022, 11, x FOR PEER REVIEW 4 of 26

workers in routine tasks and unhealthy workplaces. This strategy suggests that humans

should be removed from the robot’s workspace (Figure 3a). Direct cooperation between

the robot and humans is forbidden due to the potential danger for human health and

safety. This approach was later expanded to encompass accuracy, reliability, productivity,

and economic factors.

Figure 3. Comparison of the operating environment: (a) industrial robots (adapted from [6]); (b)

collaborative robots (adapted from [7]).

Research provided in [8] analyses the possibilities of implementing service robots in

hotels from social, economic, and technical perspectives. The authors indicated the need

to evaluate hotel managers’ perceptions regarding the advantages and disadvantages of

service robots, compared to human workers, as the primary goal of their research,

whereas determining tasks suitable for robotisation was of secondary importance. This

approach confirms the assumption that the implementation of robotics in non-traditional

applications is often limited not by technological issues, but by the company managers’

attitudes. Analysing questionnaires completed by 79 hotel managers, it was concluded

that robots have an advantage over human employees due to better data processing capa-

bilities, work speed, protection of personal data, and fewer mistakes. The main disad-

vantages of robots were listed as: lack of capability to provide personalised service; ina-

bility to handle complaints; lack of friendliness and politeness; inability to implement a

special request that goes beyond their programming; and the lack of understanding of

emotions.

Despite the common doubts, implementing automation and robotic solutions has a

positively impact in many cases. The study provided in [9] analysed the general impact

of robot implementation in workplaces for packing furniture parts. The analysis focused

on the ergonomic perspective, and found that implementation of robotics eliminates the

risk of work-related musculoskeletal disorders. A similar study [8] analysed the design,

engineering, and testing of adaptive automation assembly systems to increase automation

levels, and to complement human workers’ skills and capabilities in assembling industrial

refrigerators. This study showed that automated assembly process productivity could be

increased by more than 79%. Implementing an industrial robot instead of partial automa-

tion would likely result in an even more significant increase in productivity. Research

comparing human capabilities with automated systems is also described in [10]. The au-

thors compared human and automated vision recognition system capabilities to recognise

and evaluate forest or mountain trails from a single monocular image acquired from the

Figure 3.

Comparison of the operating environment: (

a

) industrial robots (adapted from [

6

]);

(b) collaborative robots (adapted from [7]).

Research provided in [

8

] analyses the possibilities of implementing service robots

in hotels from social, economic, and technical perspectives. The authors indicated the

need to evaluate hotel managers’ perceptions regarding the advantages and disadvantages

of service robots, compared to human workers, as the primary goal of their research,

whereas determining tasks suitable for robotisation was of secondary importance. This

approach confirms the assumption that the implementation of robotics in non-traditional

applications is often limited not by technological issues, but by the company managers’

attitudes. Analysing questionnaires completed by 79 hotel managers, it was concluded that

robots have an advantage over human employees due to better data processing capabilities,

work speed, protection of personal data, and fewer mistakes. The main disadvantages of

robots were listed as: lack of capability to provide personalised service; inability to handle

complaints; lack of friendliness and politeness; inability to implement a special request that

goes beyond their programming; and the lack of understanding of emotions.

Despite the common doubts, implementing automation and robotic solutions has a

positively impact in many cases. The study provided in [

9

] analysed the general impact

of robot implementation in workplaces for packing furniture parts. The analysis focused

on the ergonomic perspective, and found that implementation of robotics eliminates the

risk of work-related musculoskeletal disorders. A similar study [

8

] analysed the design,

engineering, and testing of adaptive automation assembly systems to increase automation

levels, and to complement human workers’ skills and capabilities in assembling industrial

refrigerators. This study showed that automated assembly process productivity could be

increased by more than 79%. Implementing an industrial robot instead of partial automation

would likely result in an even more significant increase in productivity. Research comparing

human capabilities with automated systems is also described in [

10

]. The authors compared

human and automated vision recognition system capabilities to recognise and evaluate

forest or mountain trails from a single monocular image acquired from the viewpoint of a

robot travelling on the trail. The obtained results showed that a deep neural network-based

system, trained on a large dataset, performs better than humans.

Neural network-based algorithms can also be used to control industrial robots to

address imperfections in their mechanical systems, which typically behave as non-linear

Appl. Sci. 2022, 12, 135 5 of 25

dynamic systems due to a large number of uncertainties. The research presented in [

11

,

12

]

provides neural network-based methods for advanced control of robot movements. In [

11

],

a perspective non-linear model-based predictive control method for robotic manipulators,

which minimises the settling time and position overshoot of each joint, is provided.

The classic strategy is well suited to robotisation of mass production processes in

various fields, and its main advantages are clear requirements for work process organi-

sation, robotic cell design, and installation; the availability of a large variety of standard

equipment and typical partial solutions; and higher productivity and reliability compared

to the cases where human workers perform the same tasks. The main disadvantages are

insufficient flexibility, unsuitability for unique production, and high economic costs when

it is necessary to adapt the existing robotic cell to a new product or process. Applying a

modern robotisation strategy can avoid some of these disadvantages (or at least minimise

their impact).

2.2. Modern Robotisation Strategy

The modern robotisation strategy is based on implementing collaborative robots

(cobots). According to [

13

], the definition of cobot was first used in a 1999 US patent [

14

]

and was intended for “an apparatus and method for direct physical integration between

a person and a general-purpose manipulator controlled by a computer.” It was the result

of the efforts of General Motors to implement robotics in the automotive sector to help

humans in assembly operations. The first lightweight cobot, LBR3, designed by a German

robotics company, was introduced in 2004 [

13

]. This has led to the broader development of

a modern robotics strategy and new manufacturers in the market. In 2008, the Danish man-

ufacturer Universal Robots released the UR5, a cobot that could safely operate alongside

the employees, eliminating the need for safety caging or fencing (Figure 3b). This launched

a new era of flexible, user-friendly, and cost-efficient collaborative robots [

13

], and resulted

in the current situation, in which all of the major robot manufacturers have at least a few

cobot models in their product range.

The fourth industrial revolution—Industry 4.0—significantly fostered the development

of cobot’s technologies, because the concept fitted well with Industry 4.0 content, allowing

human–robot collaboration to be realised and being suitable for flexible manufacturing

systems. Contrary to typical industrial robots, next-generation robotics uses artificial intel-

ligence (AI) to collaboratively perform tasks and is suitable for uncontrolled/unpredictable

environments [

15

]. Moreover, due to favourable conditions (advances in AI, sensing tech-

nologies, and computer vision), collaborative industrial robots have become significantly

smarter, showing the potential of reliable and secure cooperation, and increasing the pro-

ductivity and efficiency of the involved processes [

15

]. However, it should be noted that

Industry 4.0 fostered not only the widespread of robotics, but also posed new challenges.

When developing highly automated systems, most of the equipment is related through the

Internet of Things (IoT) or other communication technologies. Therefore, cybersecurity and

privacy protection of processes used to monitor and control data [

16

,

17

] must be considered.

The issue of data protection is also becoming more critical due to the latest communica-

tion technologies, such as 5G and 6G [

18

]. These technologies allow the development

of standardised wireless communication networks for various control levels (single-cell,

production line, factory, network of factories) and, at the same time, makes systems more

sensitive to external influences. The main impact of Industry 4.0 and new communication

technologies on industrial robots is that their controllers have an increasing number of

connections, functions, and protocols to communicate with other “smart” devices.

The study presented in [

19

] analyses the possibilities of human–robot collaboration

in aircraft assembly operations. The benefits of human–robot cooperation were examined

in terms of the productivity increase and the levels of satisfaction of the human workers.

The obtained results showed that humans and robots could simultaneously work safely

in a common area without any physical separation, and significantly reduce time and

costs compared with manual operations. Moreover, assessment of employee opinions

Appl. Sci. 2022, 12, 135 6 of 25

showed that most employees positively evaluated the implementation of collaborative

robots. Nevertheless, employee attitudes depend on their practical experience: it was

noticed that experts felt more confident than beginners. This can be explained by the fact

that experts better understand the overall manufacturing process and are more accustomed

to operating with various equipment.

Compared to traditional industrial robots, cobots have more user-friendly control

features and wider teaching options. A new assembly strategy was described in a previous

study [

20

], in which a cobot learnt skills from manual teaching to perform peg-in-hole

automatic assembly when the geometric profile and material elastic parameters of parts

were inaccurate. The results showed that the manual assembly process could be analysed

mathematically, splitting it into a few stages and implementing it as a model in robot

control. Using an Elite EC75 manipulator (Elite Robot, Suzhou, CN, an assembly time

of less than 20 s was achieved, ensuring a 100% success rate from 30 attempts when the

relative error between the peg and hole was

±

4.5 mm, and the clearance between the peg

and the hole was 0.18 mm.

As a result of the development of sensor and imaging technologies, new applications

in robotics are emerging, especially in human–robot collaboration. In [

21

], detailed research

focused on identifying the main strengths and weaknesses of augmented reality (AR)

in industrial robots applications. The analysis shows that AR is mainly used to control

and program robotic arms, visualise general tasks or robot information, and visualise the

industrial robot workspace. Results of the analysis indicate that AR systems are faster

than traditional approaches; users have greater appreciation for AR systems in terms of

likeability and usability; and AR seems to reduce physical workload, whereas the impact

on mental workload depends on the interaction interface [

16

]. Nevertheless, industrial

implementation of AR is still limited by insufficient accuracy, occlusion problems, and the

limited field of view of wearable AR devices.

A summary of the analysed robotisation strategies indicates that they both have their

specific implementation fields. The classical strategy is well suited to strictly controlled

environments. The modern strategy ensures more flexible operation and is suitable for

non-predictable environments. Nevertheless, it is necessary to note that the strict line

between these strategies has gradually disappeared due to advances in sensing technolo-

gies, artificial intelligence, and computer vision. A typical industrial robot equipped with

modern sensing and control systems can operate similarly to a cobot. According to [

22

], col-

laborative regimes can be realised using industrial robots, laser sensors, and vision systems,

or controller alteration if compliance with the ISO/TS 15066 standard—which specifies

parameters and materials adapted to safe activities with and near humans—is ensured [

23

].

This standard defines four main classes of safety requirements for collaborative robots:

safety-rated monitored stop; hand-guiding; speed and separation monitoring; and power

and force limiting.

In addition, it is essential to mention that all improvements and advances in robotics

can be classified into two main types: universal and application dependent. The remaining

part of this article reviews and classifies the latest advances in robotics according to the

areas of their implementation.

3. Recent Achievements in Industrial Robotics Classified according to Implementation Area

3.1. Human–Machine Interaction

To date, manual human work has been often replaced by robotic systems in industry.

However, within complex systems, the interaction between humans and machines/robots

(HMI) still needs to occur. HMI is an area of research related to the development of robotic

systems based on understanding, evaluation, and analysis, and this system combines vari-

ous forms of cooperation or interaction with humans. Interaction requires communication

between robots and humans, and human communication and collaboration with the robot

system can take many forms. However, these forms are greatly influenced by whether

the human is close to the robot and the context being used: (i) human–computer context—

Appl. Sci. 2022, 12, 135 7 of 25

keyboard, buttons, etc.; (ii) real procedures context—haptics, sensors; and (iii) close and

exact interaction. Therefore, both human and robot communication or interaction can

be divided into two main categories: remote interaction and exact interaction. Remote

interaction takes place by remote operation or supervised control. Close interaction takes

place by operation with an assistant or companion. Close interaction may include physical

interaction. Because close interactions are the most difficult, it is crucial to consider a

number of aspects to ensure a successful collaboration, i.e., a real-time algorithm, “touch”

detection and analysis, autonomy, semantic understanding capabilities, and AI-aided antic-

ipation skills. A summary of the relevant research focused on improving and developing

HMI methods is provided in Table 1.

Table 1. Research focused on human–machine interaction.

Objective Technology Approach Improvement Ref.

To improve flexibility,

productivity and quality of a

multi-pass gas tungsten arc

welding (GTAW) process

performed by a collaborative

robot.

A haptic interface.

6-axis robotic arm (Mitsubishi

MELFA RV-13FM-D).

The end effector with GTAW

torch.

A monitoring camera (Xiris

XVC-1000).

A Load Cell (ATI Industrial

Automation Mini45-E) to

evaluate tool force

interactions with work pieces.

A haptic-based approach is

designed and tested in a

manufacturing scenario

proposing light and low-cost

real-time algorithms for

“touch” detection.

Two main criteria were analysed to assess

the performance: the 3-Sigma rule and

the Hampel identifier. Experimental

results showed better performance of the

3-Sigma rule in terms of precision

percentage (mean value of 99.9%) and

miss rate (mean value of 10%) concerning

the Hampel identifier. Results confirmed

the influence of the contamination level

related to the dataset. This algorithm

adds significant advances to enable the

use of light and simple machine learning

approaches in real-time applications.

[24,25]

To produce more advanced or

complex forms of interaction

by enabling cobots with

semantic understanding

capabilities or AI-aided

anticipation skills.

Collaborative robots Artificial intelligence.

The overview provides hints of future

cobot developments and identifies future

research frontiers related to economic,

social, and technological dimensions.

[26]

To strike a balance in order to

find a suitable level of

autonomy for human

operators.

Model of Remotely Instructed

Robots (RIRs.)

Modelling method.

Developed model in which the robot is

autonomous in task execution, but also

aids the operator’s ultimate

decision-making process about what to

do next. Presentation of the robot’s own

model of the work scene enables

corrections to be made by the robot, as

well as it can enhance the operator’s

confidence in the robot’s work.

[27,28]

The interaction between humans and robots or mechatronic systems encompasses

many interdisciplinary fields, including physical sciences, social sciences, psychology, arti-

ficial intelligence, computer science, robotics, and engineering. This interaction examines

all possible situations in which a human and a robot can systematically collaborate or

complement each other. Thus, the main goal is to provide robots with various competencies

to facilitate their interaction with humans. To implement such competencies, modelling of

real-life situations and predictions is necessary, applying models in interaction with robots,

and trying to make this interaction as efficient as possible, i.e., inherently intuitive, based

on human experience and artificial intelligence algorithms.

The role of various interfering aspects (Table 2.) in human–robot interaction may lead

to different future perspectives.

Appl. Sci. 2022, 12, 135 8 of 25

Table 2. Interfering aspects in human–robot interaction.

Objective Interaction Approach Solution Ref.

Frustration Close cooperative work Controlled coordination

Sense of control of frustration,

affective computing.

[29]

Emotion recognition

By collecting different

kinds of data.

Discrete models describing

emotions used, facial expression

analysis, camera positioning.

Affective computing.

Empowering robots to observe, interpret

and express emotions. Endow robots with

emotional intelligence.

[30]

Decoding of action

observation

Elucidating the neural

mechanisms of action

observation and

intention understanding.

Decoding the underlying

neural processes.

The dynamic involvement of the mirror

neuron systems (MNS) and the theory of

mind ToM/mentalising network during

action observation.

[31]

Verbal and non-verbal

communication

Interactive communication. Symbol grounding

Composition of grounded semantics, online

negotiation of meaning, affective interaction

and closed-loop affective dialogue, mixed

speech-motor planning, massive acquisition

of data-driven models for human–robot

communication through crowd-sourced

online games, real-time exploitation of

online information and services for

enhanced human–robot communication.

[32]

We can summarise that the growing widespread use of robots and the lack of highly

skilled professionals in the market form clear guidelines for future development in the HMI

area. The main aspirations are an intuitive, human-friendly interface, faster and simpler

programming methods, advanced communication features, and robot reactions to human

movements, mood, and even psychological state. Methods to monitor human actions

and emotions [

33

], fusion of sensors’ data, and machine learning are key technologies for

further improvement in the HMI area.

3.2. Object Recognition

Object recognition is a typical issue in industrial robotics applications, such as sorting,

packaging, grouping, pick and place, and assembling (Table 3). The appropriate recognition

method and equipment selection mainly depends on the given task, object type, and the

number of recognisable parameters. If there are a small number of parameters, simpler

sensing technologies based on typical approaches (geometry measuring, weighing, material

properties’ evaluation) can be implemented. Alternatively, if there are a significant number

of recognisable parameters, photo or video analysis is preferred. Required information

in two- or three-dimensional form from image or video can be extracted using computer

vision techniques such as object localisation and recognition. Various techniques of vision-

based object recognition have been developed, such as appearance-, model-, template-, and

region-based approaches. Most vision recognition methods are based on deep learning [

34

]

and other machine learning methods.

In a previous study [

35

], a lightweight Franka Emika Panda, cobot with seven degrees

of freedom and a Realsense D435 RGB-D camera, mounted on an end effector, was used

to extend the default robots’ function. Instead of using a large dataset-based machine

learning technique, the authors proposed a method to program the robot from a single

demonstration. This robotic system can detect various objects, regardless of their position

and orientation, achieving an average success rate of more than 90% in less than 5 min of

training time, using an Ubuntu 16.04 server running on an Intel(R) Core(TM) i5-2400 CPU

(3.10 GHz) and an NVIDIA Titan X GPU.

Another approach for grasping randomly placed objects was presented in [

36

]. The

authors proposed a set of performance metrics and compared four robotic systems for

bin picking, and took first place in the Amazon Robotics Challenge 2017. The survey

results show that the most promising solutions for such a task are RGB-D sensors and

CNN-based algorithms for object recognition, and a combination of suction-based and

typical two-finger grippers for grasping different objects (vacuum grippers for a stiff object

with large and smooth surface areas, and two-finger grippers for air-permanent items).

Appl. Sci. 2022, 12, 135 9 of 25

Similar localisation and sorting tasks appear in the food and automotive industries,

and in almost every production unit. In [

37

], an experimental method was proposed using

a pneumatic robot arm for separation of objects from a set according to their colour. If the

colour of the workpiece is recognisable, it is selected with the help of a robotic arm. If

the workpiece colour does not meet the requirements, it is rejected. The described sorting

system works according to an image processing algorithm in MATLAB software. More

advanced object recognition methods based on simultaneous colour and height detection

are presented in [38]. A robotic arm with six degrees of freedom (DoF) and a camera with

computer vision software ensure a sorting efficiency of about 99%.

A Five DoF robot arm, “OWI Robotic Arm Edge”, proposed by Pengchang Chen et al.,

was used to validate the practicality and feasibility of a faster region-based convolutional

neural network (faster R-CNN) model using a dataset containing images of symmetric

objects [

39

]. Objects were divided into classes based on colour, and defective and non-

defective objects.

Despite significant progress in existing technologies, randomly placed unpredictable

objects remain a challenge in robotics. The success of a sorting task often depends on the

accuracy with which recognisable parameters can be defined. Yan Yu et al. [

40

] proposed an

RGB-D-based method for solid waste object detection. The waste sorting system consists of a

server, vision sensors, industrial robots, and rotational speedometer. Experiments performed

on solid waste image analysis resulted in a mean average precision value of 49.1%.

Furthermore, Wen Xiao et al. designed an automatic sorting robot that uses height

maps and near-infrared (NIR) hyperspectral images to locate the region of interest (ROI)

of objects, and to perform online statistic pixel-based classification in contours [

41

]. This

automatic sorting robot can automatically sort construction and demolition waste ranging

in size from 0.05 to 0.5 m. The online recognition accuracy of the developed sorting system

reaches almost 100% and ensures operation speed up to 2028 picks/h.

Another challenging issue in object recognition and manipulation is objects having

an undefined shaped and contaminated by dust or smaller particles, such as minerals or

coal. Quite often, such a task requires not only recognising the object but also determining

the position of the centre of mass of the object. Man Li et al. [

42

] proposed an image

processing-based coal and gangue sorting method. Particle analysis of coal and gangue

samples is performed using morphological corrosion and expansion methods to obtain

a complete, clean target sample. The object’s mass centre is obtained using the centre

of the mass method, consisting of particle removal and filling, image binarization, and

separation of overlapping samples, reconstruction, and particle analysis. The presented

method achieved identification accuracy of coal and gangue samples of 88.3% and 90.0%,

and the average object mass centre coordinate errors in the x and y directions were 2.73%

and 2.72%, respectively [42].

Intelligent autonomous robots for picking different kinds of objects were studied as a

possible means to overcome the current limitations of existing robotic solutions for picking

objects in cluttered environments [

43

]. This autonomous robot, which can also be used for

commercial purposes, has an integrated two-finger gripper and a soft robot end effector to

grab objects of various shapes. A special algorithm solves 3D perception problems caused

by messy environments and selects the right grabbing point. When using lines, the time

required depends significantly on the configuration of the objects, and ranges from 0.02 s

when the objects have almost the same depth, to 0.06 s in the worst case when the depth of

the tactile objects is greater than the lowest depth but not perceived [43].

In robotics, the task of object recognition often includes not only recognition and

the determinaton of coordinates, but it also plays an essential role in the creation of a

robot control program. Based on the ABB IRB 140 robot and a digital camera, a low-

cost shapes identification system was developed and implemented, which is particularly

important due to the high variability of welded products [

44

]. The authors developed an

algorithm that recognises the required toolpath from a taken image. The algorithm defines

a path as a complex polynomial. It later approximates it by simpler shapes with a lower

Appl. Sci. 2022, 12, 135 10 of 25

number of coordinates (line, arc, spline) to realise the tool movement using standard robot

programming language features.

Moreover, object recognition can be used for robot machine learning to analyse humans’

behaviour. Such an approach was presented by Hiroaki et al. [

45

], where the authors studied

the behaviour of a human crowd, and formulated a new forecasting task, called crowd

density forecasting, using a fixed surveillance camera. The main goal of this experiment

was to predict how the density of the crowd would change in unseen future frames. To

address this issue, patch-based density forecasting networks (PDFNs) were developed.

PDFNs project a variety of complex dynamics of crowd density throughout the scene, based

on a set of spatially or spatially overlapping patches, thus adapting the receptive fields

of fully convolutional networks. Such a solution could be used to train robotic swarms

because they behave similarly to humans in crowded areas.

Table 3. Research focused on object recognition in robotics.

Objective Technology Approach Improvement Ref.

Extended default “program

from demonstration” feature

of collaborative robots to

adapt them to environments

with moving objects.

Franka Emika Panda cobot

with 7 degrees of freedom,

with a Realsense D435 RGB-D

camera mounted on the

end-effector.

Grasping method to fine-tune

using reinforcement

learning techniques.

The system can grasp various objects from a

demonstration, regardless of their position

and orientation, in less than 5 min of

training time.

[35,46]

Introduction of a set of

metrics for primary

comparison of robotic

systems’ detailed

functionality

and performance.

Robot with different grippers.

Recognition method and the

grasping method.

Developed original robot performance metrics

and tested on four robot systems used in the

Amazon Robotics Challenge competition.

Results of analysis showed the difference

between the systems and promising solutions

for further improvements.

[36,45,47]

To build a low-cost system for

identifying shapes to

program industrial robots for

the 2D welding process.

Robot ABB IRB 140 with a

digital camera, which detects

contours on a 2D surface.

A binarisation and contour

recognition method.

A low-cost system based on an industrial

vision was developed and implemented for

the simple programming of the

movement path.

[48,49]

The patch-based density

forecasting networks (PDFNs)

directly forecast crowd

density maps of future frames

instead of trajectories of each

moving person in the crowd.

Fixed surveillance camera

Density Forecasting in

Image Space.

Density Forecasting in

Latent Space.

PDFNs.

Spatio-Temporal Patch-Based

Gaussian filter.

Proposed patch-based models, PDFN-S and

PDFN-ST, outperformed baselines on all the

datasets. PDFN-ST successfully forecasted

dynamics of individuals, a small group, and a

crowd. The approach cannot always forecast

sudden changes in walking directions,

especially when they happened in the

later frames.

[45]

To separate the objects from a

set according to their colour.

Pneumatic Robot arm

Force in

response to applied pressure.

The proposed robotic arm may be considered

for sorting. Servo motors and image

processing cameras can be used to achieve

higher repeatability and accuracy.

[37,50]

An image processing-based

method for coal and gangue

sorting. Development of a

positioning and

identification system.

Coal and gangue

sorting robot

Threshold segmentation

methods. Clustering method.

Morphological corrosion and

expansion methods. The

centre of mass method.

Efficiency is evaluated using the images of

coal and gangue, which are randomly picked

from the production environment. The

average coordinate errors in the x and y

directions are 2.73% and 2.72%, and the

identification accuracy of coal and gangue

samples is 88.3% and 90.0%, respectively, and

the sum of the time for identification,

positioning, and opening the camera for a

single sample averaged 0.130 s.

[41,51,52]

A computer vision-based

robotic sorter is capable of

simultaneously detecting and

sorting objects by their

colours and heights.

Vision-based process

encompasses identification,

manipulation, selection, and

sorting objects depending on

colour and geometry.

A 5 or 6 DOF robotic arm and

a camera with the computer

vision software detecting

various colours and heights

and geometries.

Computer Vision methods

with the Haar Cascade

algorithm. The Canny edge

detection algorithm is used

for shape identification.

A robotic arm is used for picking and placing

objects based on colour and height. In the

proposed system, colour and height sorting

efficiency is around 99%. Effectiveness, high

accuracy and low cost of computer vision

with a robotic arm in the sorting process

according to color and shape are revealed.

[38,53,54]

A novel multimodal

convolutional neural network

for RGB-D object detection.

A base solid waste sorting

system consisting of a server,

vision sensors, industrial

robot, and

rotational speedometer.

Comparison with single

modal methods.

Washington RGB-D object

recognition

benchmark evaluated.

Meeting the real-time requirements and

ensuring high precision. Achieved 49.1%

mean average precision, processing images in

real-time at 35.3 FPS on one single Nvidia

GTX1080 GPU.

Novel dataset.

[40,55]

Appl. Sci. 2022, 12, 135 11 of 25

Table 3. Cont.

Objective Technology Approach Improvement Ref.

Practicality and feasibility of

a faster R-CNN model using

a dataset containing images

of symmetric objects.

Five DoF robot arm “OWI

Robotic Arm Edge.”

CNN learning algorithm that

processes images with

multiple layers (filters) and

classifies objects in images.

Regional Proposal

Network (RPN)

The accuracy and precision rate are steadily

enhanced. The accuracy rate of detecting

defective and non-defective objects is

successfully improved, increasing the training

dataset to up to 400 images of defective and

non-defective objects.

[39,56,57]

An automatic sorting robot

with height maps and

near-infrared (NIR)

hyperspectral images to

locate objects’ ROI and

conduct online statistic

pixel-based classification in

contours.

24/7 monitoring.

The robotic system with four

modules: (1) the main

conveyor, (2) a detection

module, (3) a light source

module, and (4) a

manipulator.

Mask-RCNN and YOLOv3

algorithms.

Method for an automatic

sorting robot.

Identification include pixel,

sub-pixel,

object-based methods.

The prototype machine can automatically sort

construction and demolition waste with a size

range of 0.05–0.5 m. The sorting efficiency can

reach 2028 picks/h, and the online recognition

accuracy nearly reaches 100%.

Can be applied in technology for

land monitoring.

[41,58,59]

Overcoming current

limitations on the existing

robotic solutions for picking

objects in

cluttered environments.

Intelligent autonomous

robots for picking different

kinds of objects.

Universal jamming gripper.

A comparative study of the

algorithmic performance of

the proposed method.

When a corner is detected, it takes just 0.003 s

to output the target point. With lines, the

required time depends on the object’s

configuration, ranging from 0.02 s, when

objects have almost the same depth, to 0.06 s

in the worst-case scenario.

[43,60–62]

A few main trends can be highlighted from the research analysis related to object

recognition in robotics. These can be defined as object recognition for localisation and

further manipulation; object recognition for shape evaluation and automatic generation of

the robot program code for the corresponding robot movement; and object recognition for

behaviour analysis to use as initial data for machine learning algorithms. A large number

of reliable solutions have been tested in the industrial environment for the first trend, in

contrast to the second and third cases, which are currently being developed.

3.3. Medical Application

The da Vinci Surgical System is the best-known robotic manipulator used in surgery

applications. Florian Richter et al. [

63

] presented a Patient Side Manipulator (PSM) arm

technology to implement reinforcement learning algorithms for the surgical da Vinci

robots. The authors presented the first open-source reinforcement learning environment

for surgical robots, called dVRL [

63

]. This environment allows fast training of da Vinci

robots for autonomous assistance, and collaborative or repetitive tasks, during surgery.

During the experiments, the dVRL control policy was effectively learned, and it was found

that it could be transferred to a realrobot- with minimal efforts. Although the proposed

environment resulted in the simple and primitive actions of reaching and picking, it was

useful for suction and debris removal in a real surgical setting.

Meanwhile, in their work, Yohannes Kassahun et al. reviewed the role of machine

learning techniques in surgery, focusing on surgical robotics [

64

]. They found that currently,

the research community faces many challenges in applying machine learning in surgery

and robotic surgery. The main issues are a lack of high-quality medical and surgical data,

a lack of reliable metrics that adequately reflect learning characteristics, and a lack of a

structured approach to the effective transfer of surgical skills for automated execution [

64

].

Nevertheless, the application of deep learning in robotics is a very widely studied field.

The article by Harry A. Pierson et al. in 2017 provides a recent review emphasising the

benefits and challenges vis-à-vis robotics [

65

]. Similarly to [

64

], they found that the main

limitations preventing deep learning in medical robotics are the huge volume of training

data required and a relatively long training time.

Surgery is not the only field in medicine in which robotic manipulators can be used.

Another autonomous robotic grasping system, described by John E. Downey et al., intro-

duces shared control of a robotic arm based on the interaction of a brain–machine interface

(BMI) and a vision guiding system [

66

]. A BMI is used to define a user’s intent to grasp or

transfer an object. Visual guidance is used for low-level control tasks, short-range move-

ments, definition of the optimal grasping position, alignment of the robot end-effector,

Appl. Sci. 2022, 12, 135 12 of 25

and grasping. Experiments proved that shared control movements were more accurate,

efficient, and less complicated than transfer tasks using BMI alone.

Another case that requires fast robot programming methods and is implemented

in medicine is the assessment of functional abilities in functional capacity evaluations

(FCEs) [

67

]. Currently, there is no single rational solution that simulates all or many of

the standard work tasks that can be used to improve the assessment and rehabilitation of

injured workers. Therefore, the authors proposed that, with the use of the robotic system

and machine learning algorithms, it is possible to simulate workplace tasks. Such a system

can improve the assessment of functional abilities in FCEs and functional rehabilitation

by performing reaching manoeuvres or more complex tasks learned from an experienced

therapist. Although this type of research is still in its infancy, robotics with integrated

machine learning algorithms can improve the assessment of functional abilities [67].

Although the main task of robotic manipulators is the direct manipulation of objects

or tools in medicine, these manipulators can also be used for therapeutic purposes for

people with mental or physical disorders. Such applications are often limited by the ability

to automatically perceive and respond as needed to maintain an engaging interaction.

Ognjen Rudovic et al. presented a personalised deep learning framework that can adapt

robot perception [

68

]. The researchers in the experiment focused on robot perception, for

which they developed an individualised deep learning system that could automatically

assess a patient’s emotional states and level of engagement. This makes it easier to monitor

treatment progress and optimise the interaction between the patient and the robot.

Robotic technologies can also be applied in dentistry. To date, there has been a lack

of implementation of fundamental ideas. In a comprehensive review of robotics and the

application of artificial intelligence, Jasmin Grischke et al. present numerous approaches to

apply these technologies [

69

]. Robotic technologies in dentistry can be used for maxillofacial

surgery [

70

], tooth preparation [

71

], testing of toothbrushes [

72

], root canal treatment and

plaque removal [

73

], orthodontics and jaw movement [

74

], tooth arrangement for full

dentures [75], X-ray imaging radiography [76], swab sampling [77], etc.

A summary of research focused on robotics in medical applications is provided in

Table 4. It can be seen that robots are still not very popular in this area, and technological

and phycological/ethical factors can explain this. From the technical point of view, more

active implementation is limited by the lack of fast and reliable robot program preparation

methods. Regarding psychological and ethical factors, robots are still unreliable for a large

portion of society. Therefore, they are only accepted with significant hesitation.

Table 4. Robotic solutions in medical applications.

Objective Technology Approach Improvement Ref.

Create bridge between

reinforcement learning and

the surgical robotics

communities by presenting

the first open-sourced

reinforcement learning

environments for surgical da

Vinci robots.

Patient Side Manipulator

(PSM) arm.

Da VinciR©Surgical Robot.

Large Needle Driver (LND),

with a jaw gripper to grab

objects such as suturing

needle.

Reinforced learning,

OpenAI Gym

DDPG (Deep Deterministic Policy

Gradients) and HER (Hindsight

Experience Replay)

V-REP physics simulator

Developed new reinforced learning

environment for fast and effective

training of surgical da Vinci robots for

autonomous operations.

[63]

A method of shared control

where the user controls a

prosthetic arm using a

brain–machine interface and

receives assistance with

positioning the hand when it

approaches an object.

Brain–machine interface

system.

Robotic arm.

RGB-D camera mounted

above the arm base.

Shared control system.

An autonomous robotic grasping

system

Shared control system for a robotic

manipulator, making control more

accurate, more efficient, and less

difficult than an alone control system.

[66]

A personalised deep learning

framework can adapt robot

perception of children’s

affective states and

engagement to different

cultures and individuals.

Unobtrusive audiovisual

sensors and wearable sensors,

providing the child’s

heart-rate, skin-conductance

(EDA), body temperature,

and accelerometer data.

Feed-forward multilayer neural

networks.

GPA-net

Achieved an average agreement of

~60% with human experts to estimate

effect and engagement.

[68]

Appl. Sci. 2022, 12, 135 13 of 25

Table 4. Cont.

Objective Technology Approach Improvement Ref.

An overview of existing

applications and concepts of

robotic systems and artificial

intelligence in dentistry, for

functional capacity

evaluations, of the role of ML

in surgery using surgical

robotics, of deep learning

vis-à-vis physical robotic

systems, focused on

contemporary research.

An overview An overview An overview [64,65,67,69]

Transoral robot towards

COVID-19 swab sampling.

Flexible manipulator, an

endoscope with a monitor, a

master device.

Teleoperated configuration for swab

sampling

A flexible transoral robot with a

teleoperated configuration is proposed

to address the surgeons’ risks during

the face-to-face COVID-19 swab

sampling.

[77]

3.4. Path Planning, Path Optimisation

The process known as robotic navigation aims to achieve accurate positioning and

avoiding obstacles in the pathway. It is essential to satisfy constraints such as limited

operating space, distance, energy, and time [

78

]. The path trajectory formation process

consists of these four separate modules: perception, when the robot receives the necessary

information from the sensors; localisation, when the robot aims to control its position in the

environment; path planning; and motion control [

79

]. The development of autonomous

robot path planning and path optimisation algorithms is one of the most challenging

current research areas. Nevertheless, any kind of path planning requires information

about the initial robot position. In the stationary robot’s case, such information is usually

easily accessible, contrary to industrial manipulators mounted on mobile platforms. In

mobile robots and automatically guided vehicles (AGV), accurate self-localisation in various

environments [80,81] is a basis for further trajectory planning and optimisation.

According to the amount of available information, robot path planning can be cate-

gorised into two categories, namely, local and global path planning. Through a local path

planning strategy, the robot has rather limited knowledge of the navigation environment.

The robot has in-depth knowledge of the navigation environment when planning the

global path to reach its destination by following a predetermined path. The robotic path

planning method has been applied in many fields, such as reconstructive surgery, ocean

and space exploration, and vehicle control. In the case of pure industrial robots, path

planning refers to finding the best trajectory to transfer a tool or object to the destination

in the robot workspace. It is essential to note that typical industrial robots are not feasible

for real-time path planning. Usually, trajectories are prepared in advance using online or

offline programming methods. One of the possible techniques is the implementation of

specialised commercial computer-aided manufacturing (CAM) software such as Master-

cam/Robotmaster or Sprutcam. However, the functionality of such software is relatively

constrained and does not go beyond the framework of classical tasks, such as welding or

milling. The use of CAM software also requires highly qualified professionals. As a result,

the application of this software to individual installations is economically disadvantageous.

As an alternative to CAM software, methods based on the copying movements of highly

skilled specialists using commercially available equipment, such as MIMIC from Nordbo

Robotics (Antvorskov, Denmark), may be used. This platform allows using demonstrations

to teach robots smooth, complex paths by recording required movements that are smoothed

and optimised. To overcome the limitations caused by the lack of real-time path planning

features in robot controllers, additional external controllers and real-time communication

with the manipulator is required. In the area of path planning and optimisation, experi-

ments have been conducted for automatic object and 3D position detection [

82

] quasi-static

path optimisation [

83

], image analysis [

84

], path smoothing [

85

], BIM [

86

], and accurate

Appl. Sci. 2022, 12, 135 14 of 25

self-localisation in harsh industrial environments [

80

,

81

]. More information about methods

and approaches proposed by researchers is listed in Table 5.

Table 5. Research focused on path planning and optimisation.

Objective Technology Approach Improvement Ref.

The position of the

objects—possible trajectory to

an object in real-time.

A robotic system consisting of

an ABB IRB120 robot

equipped with a gripper and

a 3D Kinect sensor.

Detection of the workpieces.

Object recognition techniques

are applied using available

algorithms in MATLAB’s

Computer Vision and Image

Acquisition Toolbox.

The algorithm for finding 3D object position

according to colour segmentation in real-time.

The main focus was on finding the depth of an

object from the Kinect sensor. Kinect could

distinguish colour correctly, and the robot could

accurately navigate to the detected object.

[82]

The combination of

eye-tracking and computer

vision automate the approach

of a robot to its targeted point

by acquiring its 3D location.

Eye-tracking device,

webcam.

Image analysis and

geometrical reconstruction.

The computed coordinates of the target 3D

localisation have an average error of 5.5 cm,

which is 92% more accurate than eye-tracking

only for the point of gaze calculation, with an

estimated error of 72 cm.

[87]

Computer vision technology

for real-time seam tracking in

robotic gas tungsten arc

welding (GTAW).

Welding robot GTAW—the

robot arm, the robot

controller, the vision system,

isolation unit, the weld power

supply, and the host

computer.

Passive vision system.

Passive vision system

image processing.

The developed method is feasible and sufficient

to meet the specific precision requirements of

some applications in robotic seam tracking.

[88]

A higher fidelity model for

predicting the entire

pose-dependent FRF of an

industrial robot by combining

the advantages of

Experimental Modal Analysis

(EMA) with Operational

Modal Analysis for milling

processes.

KUKA KR500-3 6 DOF

industrial robot

Hybrid statistical modelling:

Frequency Response Function

(FRF) modelling method.

A Bayesian inference and hyperparameter

updating approach for updating the

EMA-calibrated GPR models of the robot FRF

with OMA-based FRF data improved the

model’s compliance RMSE by 26% and 27% in

the x and y direction tool paths, respectively,

compared to only EMA-based calibration. The

methodology reduced the average number of

iterations and calibration times required to

determine the optimal GPR model

hyperparameters by 50.3% and 31.3%,

respectively.

[84]

Safe trajectories without

neglecting cognitive

ergonomics and production

efficiency aspects.

UR3 lightweight robot Experimental tasks

The task’s execution time was reduced by 13.1%

regarding the robot’s default planner and 19.6%

concerning the minimum jerk smooth

collaboration planner.

This new approach is highly relevant for

manufacturers of collaborative robots (e.g., for

integration as a path option in the robot pendant

software) and for users (e.g., an online service

for calculating the optimal path and subsequent

transfer to the robot).

[89]

An industrial robot moving

between stud welding

operations in a stud welding

station.

Industrial robot

Quasi-static path

optimisation for an industrial

robot

The method was successfully applied to a stud

welding station for an industrial robot moving

between two stud welding operations. Even for

a difficult case, the optimised path reduced the

internal force in the dress pack. It kept the

dressed robot from the surrounding geometry

with a prescribed safety clearance during the

entire robot motion.

[83]

An industrial assembly task

for learning and optimisation,

considering uncertainties.

A Franka EMIKA Panda

manipulator

Task trajectory learning

approach.

Task optimisation approach.

The proposed approach made the robot learn the

task execution and compensate for the task

uncertainties. The HMM + BO methodology and

the HMM algorithm without optimisation were

compared. This comparison shows the

capabilities of the optimisation stage to

compensate for task uncertainties. In particular,

the HMM + BO methodology shows an

assembly task success rate of 93%, while the

HMM algorithm shows a success rate of only

19%.

[90]

The postprocessing and path

optimisation based on the

non-linear errors to improve

the accuracy of multi-joint

industrial robot-based 3D

printing.

Multi-joint industrial robot

for 3D printing

Path smoothing method

Multi-joint industrial robot-based 3D printing

can be used for the high-precision printing of

complex freeform surfaces. An industrial robot

with only three joints is used, and the solutions

of joint angles for the tool orientations are not

proposed, which is essential for printing the

freeform surface.

[91]

Appl. Sci. 2022, 12, 135 15 of 25

Table 5. Cont.

Objective Technology Approach Improvement Ref.

A comparative study of robot

pose optimisation using static

and dynamic stiffness models

for different cutting scenarios.

KUKA KR 500–3 industrial

robot,

aluminium 6061

Complete pose (CP) and the

decoupled partial pose (DPP)

methods.

Effect of optimisation method

on machining accuracy

A dynamic model-based robot pose optimisation

yields significant improvement over a static

model-based optimisation for cutting conditions

where the time-varying cutting forces approach

the robot’s natural frequencies. A static

model-based optimisation is sufficient when the

frequency content of the cutting forces is not

close to the robot’s natural frequencies.

[92]

The feasibility and validity of

proposed stiffness

identification and

configuration optimisation

methods.

KUKA KR500 industrial robot

Robot stiffness characteristics

and optimisation methods.

Point selection method

The smooth processing strategy improves

optimisation efficiency, ensuring minimal

stiffness loss. According to the machining

results of a cylinder head of a vehicle engine, the

milling quality was improved obviously after

the configuration optimisation, and the validity

of these methods are verified.

[85]

Real-time compensation

setups.

A standard KUKA

KR120R2500 PRO industrial

robot with a spindle

end-effector

Real-time Closed Loop

Compensation method

Real-time metrology feedback cannot fully

compensate for the sudden error spikes caused

by the backlash. The mitigation strategy of

automatically reducing feed rate (ASC) was

demonstrated to reduce backlash error

significantly. However, ASC considerably

increases the cycle time for a toolpath that

involves many direction reversals and leads to

uneven cutter chip load and variation in surface

finish. Backlash, therefore, remains the largest

source of residual error for a robot under

real-time metrology compensation.

[93]

Building Information Model

(BIM)-based robotic assembly

model that contains all the

required information for

planning.

ABB IRB6700-235 robot (6

DOF), a construction plane

(approximately 1.5 m × 0.9

m), a scene modelling camera

(Sony a5100), and a modelling

computer (Dell Precise).

Image-based 3D modelling

method.

Experimental method

A general IFC model for robotic assembly

contains all the information needed for

task-level planning; BIM and image-based

modelling are used to calibrate robot pose for

the unification of the robot coordinate system,

construction area, and assembly task; a simple

conversion process is presented to convert the

3D placement point coordinates of each brick

into the robotic control instructions.

In the process of experimental verification,

task-level planning can maintain the same

accuracy as that of the traditional method but

saves time when facing more complex tasks.

[86]

A model of reversibly

controlled industrial robots

based on abstract semantics.

Robotic assembly

Error recovery using reverse

execution

A programming model which enables robot

assembly programs to be executed in reverse.

Temporarily switching the direction of program

execution can be an efficient error recovery

mechanism. Additional benefits arise from

supporting reversibility in robotic assembly

language, namely, increased code reuse and

automatically derived disassembly sequences.

[94]

The control strategies for

robotic PiH assemblies and

the limitations of the current

robotic assembly

technologies.

Robotic PiH assembly