Louisiana State University Louisiana State University

LSU Scholarly Repository LSU Scholarly Repository

LSU Doctoral Dissertations Graduate School

2016

Garbage Collection for General Graphs Garbage Collection for General Graphs

Hari Krishnan

Louisiana State University and Agricultural and Mechanical College

Follow this and additional works at: https://repository.lsu.edu/gradschool_dissertations

Part of the Computer Sciences Commons

Recommended Citation Recommended Citation

Krishnan, Hari, "Garbage Collection for General Graphs" (2016).

LSU Doctoral Dissertations

. 573.

https://repository.lsu.edu/gradschool_dissertations/573

This Dissertation is brought to you for free and open access by the Graduate School at LSU Scholarly Repository. It

has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU

Scholarly Repository. For more information, please contact[email protected].

GARBAGE COLLECTION FOR GENERAL GRAPHS

A Dissertation

Submitted to the Graduate Faculty of the

Louisiana State University and

Agricultural and Mechanical College

in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy

in

The School of Electrical Engineering & Computer Science

The Division of Computer Science and Engineering

by

Hari Krishnan

B.Tech., Anna University, 2010

August 2016

Dedicated to my parents, Lakshmi and Krishnan.

ii

Acknowledgments

I am very grateful to my supervisor Dr. Steven R. Brandt for giving the opportunity to fulfill my dream of

pursuing Ph.D in computer science. His advice, ideas and feedback helped me understand various concepts

and get better as a student. Without his continuous support and guidance, I wouldn’t have come so far in my

studies and research. Our discussions were very effective and motivating.

I would also like to express my sincere gratitude to Dr. Costas Busch. His expertise in the area, insights,

motivation to direct the research in theoretical way, and advices helped me to be a better researcher. I would

like to thank Dr. Gerald Baumgartner for his constructive feedbacks, advices, and interesting conversations

about my work.

I would also like to thank Dr. Gokarna Sharma for useful feedbacks, constant help and collaborations. I

would like to thank my family for their constant moral support. It is my pleasure to thank my cousin Usha

who introduced me to computers and taught me more about very early age. I would also like to thank my

friends Sudip Biswas and Dennis Castleberry, who motivated me when required and helped me to focus on

my graduate school at all times.

iii

Table of Contents

Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . iii

List of Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vi

Abstract . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Chapter 1: Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Garbage Collection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1.1 How GC’s work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Motivation and Objectives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.4 Dissertation Organization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Chapter 2: Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.1 Abstract Graph Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.2 Literature Review . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.3 Cycle detection using Strong-Weak . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.4 Brownbridge Garbage Collector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.5 Pitfalls of Brownbridge Garbage Collection . . . . . . . . . . . . . . . . . . . . . . . . . 14

Chapter 3: Shared Memory Multi-processor Garbage Collection . . . . . . . . . . . . . . . . . . 16

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.1.1

Most Related Work: Premature Collection, Non-termination, and Exponential Cleanup

Time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.1.2 Other Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.1.3 Paper Organization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.2 Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.2.1 Example: A Simple Cycle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.2.2 Example: A Doubly-Linked List . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.2.3 Example: Rebalancing A Doubly-Linked List . . . . . . . . . . . . . . . . . . . . 23

3.2.4 Example: Recovering Without Detecting a Cycle . . . . . . . . . . . . . . . . . . 25

3.3 Concurrency Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.3.1 The Single-Threaded Collector . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.3.2 The Multi-Threaded Collector . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.4 Correctness and Algorithm Complexity . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.5 Experimental Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.6 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.7 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.8 Appendix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.8.1 Multi-Threaded Collector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Chapter 4: Distributed Memory Multi-collector Garbage Collection . . . . . . . . . . . . . . . . . 44

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

iv

4.2 Model and Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.3 Single Collector Algorithm (SCA) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.3.1 Phantomization Phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.3.2 Correction Phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.4 Multi-Collector Algorithm (MCA) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.5 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.6 Appendices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.6.1 Terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.6.2 Single Collector Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

4.6.3 Multi-collector Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

4.6.4 Appendix of proofs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Chapter 5: Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Bibliography . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Vita . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

v

List of Figures

2.1

When root R deletes strong edge to A, through partial tracing A will have strong edge at the

end. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.2 When root R deletes strong edge to A, through partial tracing A will fail to identify liveness. 15

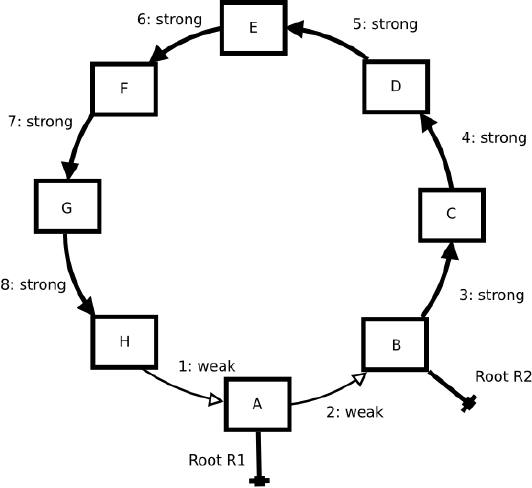

3.1

When root R1 is removed, the garbage collection algorithm only needs to trace link 2 to

prove that object A does not need to be collected. . . . . . . . . . . . . . . . . . . . . . . 18

3.2 Reclaiming a cycle with three objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.3 Doubly-linked list . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.4 Rebalancing a doubly-linked list . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.5 Graph model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.6 Subgraph model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.7

A large number of independent rings are collected by various number of worker threads.

Collection speed drops linearly with the number of cores used. . . . . . . . . . . . . . . . 32

3.8

A chain of linked cycles is created in memory. The connections are severed, then the roots

are removed. Multiple collector threads are created and operations partially overlap. . . . . 32

3.9

Graphs of different types are created at various sizes in memory, including cliques, chains

of cycles, large cycles, and large doubly linked lists. Regardless of the type of object,

collection time per object remains constant, verifying the linearity of the underlying collection

mechanism. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.1

We depict all the ways in which an initiator node,

A

, can be connected to the graph. Circles

represent sets of nodes. Dotted lines represent one or more non-strong paths. Solid lines

represent one or more strong paths. A T-shaped end-point indicates the root, R. If C

0

, D

0

, E

0

and F are empty sets, A is garbage, otherwise it is not. . . . . . . . . . . . . . . . . . . . 48

4.2 The above figure depicts the phase transitions performed by initiator in the algorithm. . . . 50

vi

Abstract

Garbage collection is moving from being a utility to a requirement of every modern programming language.

With multi-core and distributed systems, most programs written recently are heavily multi-threaded and

distributed. Distributed and multi-threaded programs are called concurrent programs. Manual memory

management is cumbersome and difficult in concurrent programs. Concurrent programming is characterized

by multiple independent processes/threads, communication between processes/threads, and uncertainty in

the order of concurrent operations.

The uncertainty in the order of operations makes manual memory management of concurrent programs

difficult. A popular alternative to garbage collection in concurrent programs is to use smart pointers. Smart

pointers can collect all garbage only if developer identifies cycles being created in the reference graph. Smart

pointer usage does not guarantee protection from memory leaks unless cycle can be detected as process/thread

create them. General garbage collectors, on the other hand, can avoid memory leaks, dangling pointers, and

double deletion problems in any programming environment without help from the programmer.

Concurrent programming is used in shared memory and distributed memory systems. State of the art

shared memory systems use a single concurrent garbage collector thread that processes the reference graph.

Distributed memory systems have very few complete garbage collection algorithms and those that exist use

global barriers, are centralized and do not scale well. This thesis focuses on designing garbage collection

algorithms for shared memory and distributed memory systems that satisfy the following properties: concur-

rent, parallel, scalable, localized (decentralized), low pause time, high promptness, no global synchronization,

safe, complete, and operates in linear time.

viii

Chapter 1

Introduction

1.1 Garbage Collection

A processor, an algorithm (software), and memory are the trio of computation. Memory is organized by

programming languages and developers to control the life of data. In this thesis, we abstract all data allocated

in memory as objects. In general, we define an object as any block of memory that saves information and

may contain address of other objects. Most programming languages support dynamic memory allocation.

Programming languages divide available memory into three types: stack, static and heap memory. Static

memory contains all the global variables used by the program. Stack memory is used to manage static

(fixed size) allocation of objects whose scope is well defined. Heap memory is used to manage dynamically

allocated objects whose scope cannot be determined at compile time.

The amount of static memory used is computed at the compilation phase and objects allocated in static

memory is never deleted. Stack memory is used to manage function calls and the allocation of objects

in a function’s scope. So the size of the stack memory required for the execution cannot be computed at

compilation time. Stack memory is filled from the lower address space of memory and heap memory is filled

from the higher address space of memory. When two kinds of memory meet, the program usually crashes.

Stack memory is used to store all objects that are actively in use or will be used in the near future. Heap

memory is used to store all objects whose scope is unknown to the program. Memory management is the

term used to describe management of the heap. When developers explicitly delete objects that are no longer

in use, it is called manual memory management.

There are three issues that happen when the heap is manually managed. They are dangling pointers,

double-free bugs, and memory leaks. All of the three issues are deadly and harmful. Some of them produce

wrong output, deletes memory that is in use, and also inefficiently organizes the heap memory. Apart from

all the above-mentioned problems, it is extremely difficult to manually manage memory for a certain class

of programs called concurrent programs. Automatic memory management avoids all the above-mentioned

problems. This thesis focuses on designing efficient algorithms for automatic memory management. The

process of automatically managing memory is termed as Garbage Collection (GC).

1

1.1.1 How GC’s work

The term garbage refers to the objects allocated in the heap that are not used anymore. All objects allocated

in memory can be referenced by their address. An object

x

is said to hold a reference to object

y

when

x

contains the address of

y

. An object is said to be in use if it is reachable through a chain of references starting

from stack or static memory. The objects in stack and static memory that are considered for defining the

whether an object is in use are referred to as

roots

. When an object is no longer in use, it is called a garbage

object. Dead is an alternative adjective used to describe a garbage object. Garbage collection is the process of

collecting all dead objects in the heap memory.

There are two major techniques to identify garbage objects. They are tracing and reference counting.

Tracing involves marking all the objects in the heap that are in use by going through all the chains of

references from the roots. Once the marking is done, all unmarked objects in the heap are considered to be

garbage and deleted by the garbage collection process. Reference counting attaches a counter to each object

that counts the number of incoming references. When the counter reaches zero, the object is garbage. All

available garbage collection algorithms are some combination of these two techniques.

1.2 Motivation and Objectives

Developers are using programming languages with automatic memory management for several benefits

including no dangling pointers, no double-free bugs, no memory leaks, high productivity, and less code to

write [13].

Dangling Pointers:

Dangling pointers occur when objects are deleted, but the addresses pointing to those

objects are not cleared. These references are very dangerous as one does not know what the reference points

to. If the reference points to some other object, then computation might yield an undesired output or may

crash because of a mismatch in type information with new object allocated in the same address.

Double-free bugs:

Double free bugs is an issue that happens when an object allocated in the heap is deleted

at some point in time and programmer deletes the object again at the same address. It is very risky and can

lead to dangling pointers in some cases. If no new objects are created at the same address, then the delete

might crash the program. In other extreme case where a new object is allocated at the same address, it deletes

the object and creates dangling pointers for all the objects that hold a reference to the deleted object.

2

Memory leaks:

Memory leaks occur when objects are not deleted, but the address pointing to those objects

are cleared. The unused objects are not deleted and they consume memory which could be reused for other

objects. This inefficient organization of heap can affect the runtime of an application heavily. This affects the

memory allocator as there will be less memory available to allocate and program crashes when there is no

more memory available to allocate memory for more objects.

The advantages of automatic memory management is important for successful execution of any application.

This thesis focuses on concurrent programs and automatic memory management requirement in concurrent

programs.

Concurrent program is a term used to describe multiple threads / processes running simultaneously to

perform some computation. There are two different environments where concurrent programs are used: shared

memory and distributed memory systems. Shared memory systems contain multiple processors / multi-core

processors sharing a common memory and execute the computation through threads / processes. Threads

communicate using shared memory and may use atomic instructions or traditional locks to execute some

critical sections of the program. In a shared memory environment, concurrent programmers use smart pointers

to manually manage memory. Smart pointers are a manual reference counting technique implemented by the

application programming interface. Distributed memory systems contain multiple processors with dedicated

memory and processors are separated by some physical distance and connected via a network. In distributed

memory systems, processes execute on each processor and communicate by sending messages across the

network to perform the computation. Both of the environments contain programs that run concurrently and

require some form of communication to perform the desired computation. The environments are distinguished

by communcation technique and memory use. Both of the environments uses automatic memory management

to help developers build applications with the above-mentioned benefits.

When concurrent programs are written by developers, manual memory management is extremely difficult

and error-prone. When multiple threads access a common object, dangling pointers are a common scenario

due to the incomplete information about ownership. Double-free bugs can occur just as frequently as the

dangling pointers. Smart pointers are the defacto standard to avoid memory management issues in concurrent

programs. These smart pointers do not guarantee complete garbage collection. The use of cyclic objects

in heap memory requires extreme care in a manually managed concurrent program. It is carefully avoided

3

by all programmers usually to make the program memory leak free. The above reasons explain difficulties

of automatic memory management in the concurrent programming environment and the necessity for

high-quality automatic memory management in concurrent programming environments.

Beyond solving the garbage collection problem in a concurrent programming environment, the objectives

of this thesis are to design garbage collectors for shared and distributed memory system that satisfy the

following properties :

1. Concurrent garbage collectors (Less pause time)

2. Multi-collector garbage collection (Parallel)

3. No global synchronization (High Throughput)

4. Locality-based garbage collection

5. Scalable

6. Prompt

7. Safety

8. Complete

There are no garbage collection algorithms available for shared and distributed memory systems that

satisfy all the above-mentioned properties. This thesis focuses on designing a novel garbage collector with

significant improvements. Apart from garbage collection, the ideas presented in the thesis will be of use

to solve other theoretical problems in distributed computing like dynamic reachability, breaking cycles,

detecting cycles, data aggregation in a sensor network, broken network detection in a sensor networks.

Concurrent:

Concurrent garbage collectors work simultaneously with the application. The application

will not experience any pauses during execution to scan memory. They have negligible to zero pause time.

There are a handful of collectors that satisfy this property.

Multi-collector Garbage Collection :

Multi-collector garbage collectors are collectors that have multiple

independent garbage collector threads / processes that work independently on the heap to identify garbage.

4

These collectors utilize multiple processors when compute resources are being underutilized. In shared

memory systems, when multiple processors are underutilized, this property helps to utilize processor cycles

better. Apart from the processor utilization, the throughput of garbage collectors can be increased by multi-

collector garbage collectors. These multi-collector garbage collector threads / processes communicate among

themselves through memory in shared memory systems and through messages in distributed memory systems.

Global Synchronization:

Multi-collector garbage collectors are common in distributed memory systems.

When multi-collector garbage collectors are used, conventional solutions require a global barrier among

all the collectors to communicate and share the information to identify garbage objects. With no global

synchronization, the throughput of garbage collectors will be high and garbage collector algorithm scales

well with this property.

Locality-based garbage collection:

Detecting garbage is a global predicate. Traditionally, popular meth-

ods of garbage collection involve computing garbage as the global predicate. This requires scanning the

entire heap for precise evaluation of the predicate. If garbage object can be detected locally based on the

small set of objects, then the garbage detection process can save a lot of time spent by not tracing all objects

in the heap.

Scalable:

Shared memory and distributed memory systems does not have a scalable garbage collectors up

until now. In shared memory systems, scalability is an issue due to most collectors designed are primarily

single collector concurrent algorithm. In distributed system, all available garbage collectors require a form of

global synchronization to detect garbage. To meet future demands of scalable garbage collection, collectors

must be multi-collector locality-based with no global synchronization.

Promptness:

With global garbage collection, the cost of scanning entire heap often is very expensive. To

reduce the high price for global scanning of the heap, garbage collection is initiated when the threshold is

met. The promptness property helps to keep heap memory free of floating garbage in a timely manner. This

property helps to avoid global scanning and also quick availability of free memory for allocation.

5

Safety:

The safety property is the most crucial part of garbage collection. This avoids any dangling pointers

and double free bugs. The property guarantees that every garbage collector will delete only garbage objects.

While most garbage collector in literature satisfies this property, there are some that cannot satisfy this

property. This thesis requires garbage collector to be safe in the multi-collector environment which is

challenging given that there is no global synchronization. In a distributed memory system, this property is

very difficult to satisfy as the garbage collector cannot get the global snapshot of a heap at any point in time.

A locality-based algorithm works by using information obtained only from neighbors. Evaluating a global

predicate using local information is challenging by all means. This thesis guarantees the safety of collectors

designed for both environments.

Completeness:

Completeness guarantees that a garbage collector will collect all garbage objects in the

heap, thereby removing all memory leaks in the heap. Reference counting garbage collectors are well known

for incomplete garbage collection due to their inability to collect cyclic garbage. This property is challenging

as the locality-based collectors usually use reference counting. Collectors designed for both environments

use reference counting and tracing techniques to solve the problem and guarantees the garbage collectors are

complete.

1.3 Contributions

The contributions of this thesis are listed below :

1.

Novel hybrid reference counting and tracing techniques are used to collect any garbage including

cyclic garbage.

2. First known concurrent multi-collector shared memory garbage collector.

3.

Well known issues in Brownbridge garbage collectors are fixed and complete and a safe garbage

collector is designed.

4.

Theoretically, our shared memory garbage collector detects garbage in fewer traversals than other state

of the art concurrent reference counted garbage collector.

6

5.

This thesis presents the first known locality-based concurrent multi-collector scalable distributed

garbage collector with no global synchronization. The thesis contains a complete proof of the garbage

collector.

6.

The distributed garbage collector introduces a novel weight based approach to convert the graph into a

directed acyclic graph and thereby detects cycles faster.

7. The designed garbage collector algorithms finishes garbage collection in linear time.

1.4 Dissertation Organization

The preliminary ideas required to understand the thesis are presented in chapter 2. Chapter 2 introduces

the abstract version of the garbage collection problem, a brief literature review of garbage collectors in

general, and in detail, it explains Brownbridge garbage collector and explains the failures of Brownbridge

garbage collector. The understanding of Brownbridge is essential for this thesis as our algorithms correct and

extend the approach. Chapter 3 contains a brief introduction of shared memory garbage collectors and related

works of the particular class of garbage collectors used in shared memory systems. Shared memory single

collector and multi-collector algorithms are described in chapter 3. Apart from describing the algorithm,

chapter also contains the proofs of safety and completeness with simulated experimental results. The chapter

also proves the linearity in the number of operations to detect garbage. Chapter 4 explains the overview

of distributed garbage collection, existing algorithms and their issues. Single collector and multi-collector

distributed garbage collector algorithms are explained in abstract and a novel technique is introduced to prove

termination of garbage collection, safety, completeness and time complexity using the isolation property. The

chapter also includes the pseudocode of the algorithm. The distributed garbage collector with scalability and

other guarantees mentioned above is a theoretical leap. Chapter 5 captures the overall contributions of the

thesis in broad view with possible future work.

7

Chapter 2

Preliminaries

2.1 Abstract Graph Model

Basic Reference Graph:

We model the relationship among various objects and references in memory

through a directed graph

G = (V, E)

, which we call a reference graph. The graph

G

has a special node

R

,

which we call the root. Node

R

represents global and stack pointer variables, and thus does not have any

incoming edges. Each node in

G

is assumed to contain a unique ID. All adjacent nodes to a given node in

G

are called

neighbors

, and denoted by

Γ

. The

in-neighbors

of a node

x ∈ G

can be defined as the set of

nodes whose outgoing edges are incident on

x

, represented by

Γ

in

(x)

. The

out-neighbors

of

x

can be defined

as the set of nodes whose incoming edges originate on

x

, represented by

Γ

out

(x)

. Note that each node

x ∈ G

does not know Γ

in

(x) at any point in time.

Garbage Collection Problem:

All nodes in

G

can be classified as either live (i.e., not garbage) or dead

(i.e., garbage) based on a property called reachability. Live and dead nodes can be defined as below:

Reachable(y, x) = x ∈ Γ

out

(y) ∨ (x ∈ Γ

out

(z) | Reachable(y, z))

Live(x) = Reachable(R, x)

Dead(x) = ¬Live(x)

We allow the live portion of

G

, denoted as

G

0

, to be mutated while the algorithm is running, and we refer

to the source of these mutations as the Adversary (Mutator). The Adversary can create nodes and attach them

to

G

0

, create new edges between existing nodes of

G

0

, or delete edges from

G

0

. Moreover, the Adversary can

perform multiple events (creation and deletion of edges) simultaneously. The Adversary, however, can never

mutate the dead portion of the graph G

00

= G\G

0

.

Axiom 1 (Immutable Dead Node). The Adversary cannot mutate a dead node.

Axiom 2 (Node Creation). All nodes are live when they are created by the Adversary.

From Axiom 3, it follows that a node that becomes dead will never become live again.

Each node experiencing deletion of an incoming edge has to determine whether it is still live. If a node

detects that it is dead then it must delete itself from the graph G.

8

Definition 2.1.1

(Garbage Collection Problem)

.

Identify the dead nodes in the reference graph

G

and delete

them.

2.2 Literature Review

McCarthy invented the concept of Garbage Collection in 1959 [

48

]. His works focused on an indirect

method of collecting garbage which is called Mark Sweep or tracing collector. Collins designed a new

method called reference counting, which is a more direct method to detect garbage [

16

]. At the time when

garbage collectors were designed, several of them could be used in practice due to bugs in the algorithms.

Dijkstra et al [

17

] modeled a tricolor tracing garbage collector that provided correctness proofs. This work

redefined the way the collectors should be designed and how one can prove the properties of the collectors.

Haddon et al [

23

] realized the fragmentation issues in the Mark Sweep algorithm and designed a Mark

Compact algorithm where objects are moved when traced and arranged in order to accumulate all the free

space together. Fenichel et al [

18

] and Cheney [

14

] provided new kind of algorithm called a copying collector

based on the idea of live objects. Copying collectors divide memory into two halves and the collector moves

live objects from one section to the other section and cleans the other section. This method uses only half

of the heap at any given time and requires copying the object which is very expensive. Based on Foderaro

and Fateman [

19

] observation, 98% of the objects collected were allocated after the last collection. Several

such observations were made and reported in [

69

,

62

]. Based on the above observations, Appel designed a

simple generation garbage collection [

2

]. Generational garbage collection utilizes idea from the all classical

method mentioned above and provides a good throughput collector. It divides the memory into at least two

generation. The old generation occupies a large portion of the heap and the young generation takes a small

portion of the heap. It exploits the weak generational hypothesis which says young objects die soon. In spite

of considerable overhead due to inter-generational pointers, promotions, and full heap collection once in a

while, this garbage collection technique dominates alternatives in terms of performance and is widely used in

many run-time systems.

While most of the methods considered until now are an indirect method of collecting garbage through

tracing, the other form of collection is reference counting. McBeth’s [

46

] article on the inability to collect

cyclic garbage by reference counting made the method less practical. Bobrow’s [

9

] solution to collect cyclic

garbage based on reference counting was the first hybrid collector which used both tracing and counting the

9

references. Hughes [

27

,

28

] identified the practical issues in the Bobrow’s algorithm. several attempts to fix

the issues in the algorithm appeared subsequently, e.g. [

21

,

9

,

39

]. In contrast to the approach followed in

[

21

,

9

,

39

] and several others, Brownbridge [

12

] proposed, in 1985, an algorithm to tackle the problem of

reclaiming cyclic structures using strong and weak pointers [

30

]. This algorithm relied on maintaining two

invariants: (a) there are no cycles in strong pointers and (b) all items in the object reference graph must be

strongly reachable from the roots.

A couple of years after the publication, Salkild [

61

] showed that Brownbridge’s algorithm [

12

] could

reclaim objects prematurely in some configurations, e.g. a double cycle. If the last strong pointer (or link) to

an object in one cycle but not the other was lost, Brownbridge’s method would incorrectly claim nodes from

the cycle. Salkild [61] corrected this problem by proposing that if the last strong link was removed from an

object which still had weak pointers, a collection process should re-start from that node. While this approach

eliminated the premature deletion problem, it introduced a potential non-termination problem.

Subsequently, Pepels et al. [

53

] proposed a new algorithm based on Brownbridge-Salkild’s algorithm and

solved the problem of non-termination by using a marking scheme. In their algorithm, they used two kinds of

marks: one to prevent an infinite number of searches, and the other to guarantee termination of each search.

Although correct and terminating, Pepels et al.’s algorithm is far more complex than Brownbridge-Salkild’s

algorithm and in some cyclic structures the cleanup cost complexity becomes at least exponential in the

worst-case [

30

]. This is due to the fact that when cycles occur, whole state space searches from each node in

the cyclic graph must be initiated, possibly many times. After Pepels et al.’s algorithm, we are not aware of

any other work on reducing the cleanup cost or complexity of the Brownbridge algorithm. Moreover, there is

no concurrent collection technique using this approach which can be applicable for the garbage collection in

modern multiprocessors.

Typical hybrid reference count collection systems, e.g. [

5

,

36

,

3

,

6

,

39

], which use a reference counting

collector combined with a tracing collector or cycle collector, must perform nontrivial work whenever a

reference count is decreased and does not reach zero. Trial deletion approach was studied by Christopher [

15

]

which tries to collect cycles by identifying groups of self-sustaining objects. Lins [

37

] used a cyclic buffer

to reduce repeated scanning of the same nodes in their Mark Scan algorithm for cyclic reference counting.

Moreover, in [

38

], Lins improved his algorithm from [

37

] by eliminating the scan operation through the use

of a Jump-stack data structure.

10

With the advancement of multiprocessor architectures, reference counting garbage collectors have become

popular because they do not require all application threads to be stopped before the garbage collection

algorithm can run [

36

]. Recent work in reference counting algorithms, e.g. [

6

,

36

,

5

,

3

], try to reduce

concurrent operations and increase the efficiency of reference counting collectors. However, as mentioned

earlier, reference counting garbage collectors cannot collect cycles [

46

]. Therefore, concurrent reference

counting collectors [

6

,

36

,

5

,

3

,

51

,

39

] use other techniques, e.g. they supplement the reference counter

with a tracing collector or a cycle detector, together with their concurrent reference counting algorithm. For

example, the reference counting collector proposed in [

51

] combines the sliding view reference counting

concurrent collector of [

36

] with the cycle collector of [

5

]. Recently, Frampton provides a detailed study of

cycle collection in his Ph.D. thesis [20].

Apple’s ARC memory management system makes a distinction between “strong” and “weak” pointers,

similar to what Brownbridge describes. In the ARC memory system, however, the type of each pointer must

be specifically designated by the programmer, and this type will not change during the program’s execution.

If the programmer gets the type wrong, it is possible for ARC to have strong cycles as well as prematurely

deleted objects. With our system, the pointer type is automatic and can change during the execution. Our

system protects against these possibilities, at the cost of lower efficiency.

There exist other concurrent techniques optimized for both uniprocessors as well as multiprocessors.

Generational concurrent garbage collectors were also studied, e.g. [

58

]. Huelsbergen and Winterbottom [

25

]

proposed an incremental algorithm for the concurrent garbage collection that is a variant of Mark Sweep

collection scheme first proposed in [

49

]. Furthermore, garbage collection is also considered for several other

systems, namely real-time systems and asynchronous distributed systems, e.g. [

55

,

67

]. Concurrent collectors

are gaining popularity. The concurrent collector described in Bacon and Rajan [

5

] can be considered to

be one of the most efficient reference counting concurrent collectors. The algorithm uses two counters per

object, one for the actual reference count and other for the cyclic reference count. Apart from the number of

the counters used, the cycle detection strategy requires a minimum of two traversals of the cycle when the

cycle is reachable and eleven cycle traversals when the cycle is garbage.

Distributed systems require garbage collectors to reclaim memory. Distributed Garbage Collector(DGC)

requires an algorithm to be designed in a different way than the other approaches. Most of the algorithm

discussed above cannot be applicable to the distributed setting. Bevan[

8

] proposed the use of Weighted

11

Reference Counting as an efficient solution to DGC. Each reference has two weights: a partial weight and a

total weight. Every reference creation halves the partial weight. The drawback of this method is an application

cannot have more than 32 or 64 references based on the architecture. Piquer[

54

] suggests an original solution

to the previous approach. Both of the above-mentioned methods use straight reference counting, so they

cannot reclaim cyclic structures and they are not resilient to message failures. Shapiro et al [

64

] provided an

acyclic truly distributed garbage collector. Although this method is not cyclic distributed garbage collector,

the work lays a foundation for a model of distributed garbage collection. A standard approach to a distributed

tracing collector is to combine independent local, per-space collectors, with a global inter-space collector.

The main problem with distributed tracing is to synchronize the distributed mark phase with independent

sweep phase[

56

]. Hughes designed a distributed tracing garbage collector with a time-stamp instead of mark

bits[

26

]. The method requires the global GC to process nodes only when the application stops its execution

or requests a stop when collecting. This is not a practical solution for many systems. Several solutions based

on a centralized collector and moving objects to one space are proposed[

44

,

42

,

40

,

33

,

65

]. Recently, a

mobile actor-based distributed garbage collector was designed[

68

]. Mobile actor DGCs cannot be easily

implemented as an actor needs access to a remote site’s root set and actors move with huge memory payload.

All of the distributed garbage collection solutions have one or more of the problems: inability to detect

cycles, moving objects to one site for cycle detection, weak heuristics to compute cycles, no fault-tolerance,

centralized solution, stopping the world, not scalable, and failure to be resilient to message failures.

2.3 Cycle detection using Strong-Weak

Brownbridge classifies the edges in the reference graph into strong and weak. The classification is based

on the invariant that there is no cycle of strong edges. So every cycle must contain at least one weak edge.

The notion of the strong edge is connected to the liveness of the node. So the existence of a strong incoming

edge for a node indicates that the node is live.

Strong edges form a directed acyclic graph in which every node is reachable from R. The rest of the

edges are classified as weak. This classification is not simple to compute as they themselves might require

complete scanning of the graph. To avoid complete scanning, some heuristic approaches are required. The

identification of cycle creating edges are the bottleneck of the classification problem. The edges are labeled

weak if it is created to a node that already exists in the memory. This heuristic guarantee that all cycles

12

contain at least one weak edge. But a node with weak incoming edge does not mean it is part of a cycle. The

heuristic helps the mutator to save time in classifying the edges. But the collectors require more information

about the topology of the subgraph to identify whether the subgraph is indeed garbage.

The heuristic idea mentioned above prevents the creation of strong cycles. The other technique required

by the Brownbridge algorithm is the ability to change the strong and weak edges whenever required by the

nodes. To solve this issue, Brownbridge used two boolean values, one for each edge in the source and one for

each node. For an edge to be strong, both the boolean values have to be same. So if a node wants to change

the type of its edges, a node changes the corresponding boolean value. A node will change all the incoming

edge types when it changes its boolean value. By changing the boolean value for an outgoing edge, a node

changes the type of the edge. The idea of toggling the edge types is an important piece of the Brownbridge

algorithm.

2.4 Brownbridge Garbage Collector

Brownbridge’s algorithm works for a system where the Adversary stops the world for the garbage collection

process. Each node contains two counters each corresponding to the type of incoming edge. When the graph

mutations are serialized, any addition / deletion of edge increments / decrements the corresponding counters

in the target node. An addition event never makes a node garbage. A deletion event might make a node

garbage. If the edge deleted is weak, no further actions are taken except decrementing the counter. Only a last

strong incoming edge deletion to a node performs additional work. When the last strong incoming edge is

deleted and the counter is decremented, the node is considered garbage if there are no weak incoming edges.

If a node contains weak incoming edges after last strong incoming edge deletion, then the algorithm performs

a partial tracing to identify if it is cyclic garbage. Tracing starts by converting all the weak incoming edges of

the node into strong by toggling the boolean value. Once the boolean value is changed, the node swaps the

counters and identifies all the incoming edges as strong. To determine whether the subgraph is cyclic garbage,

the node weakens all its outgoing edges. The node marks itself that it has performed a transformation. By

weakening the outgoing edges, we mean converting all the outgoing edges of a node into weak edges. Some

nodes might lose all strong incoming edges due to this process. This propagates the weakening process.

When a marked node receives a weakening message it will decide it is garbage and deletes itself. Every

13

FIGURE 2.1. When root R deletes strong edge to A, through partial tracing A will have strong edge at the end.

weakening call propagates by switching the weak incoming edges into strong. This method determines if

there are any external references pointing to the node that lost the strong incoming edge.

Figure 2.1 shows an example graph where the Brownbridge technique will identify the external support to

a node and restore the graph. In figure 2.1, if the strong edge to A is deleted, A will convert all incoming

weak edges into strong and start weakening the outgoing edges. When B receives a weaken message, it

weakens the outgoing edge and then converts all weak incoming edges to strong. The process continues until

it reaches A again. When the process reaches A, A realizes now it has an additional strong incoming edge.

So the graph is recovered now. If there is no edge from E to A, then the whole subgraph A, B, C and D will

be deleted.

2.5 Pitfalls of Brownbridge Garbage Collection

The idea behind the Brownbridge method is to identify external support for the node that lost the last

strong incoming edge. If the external strong support to the subgraph comes from a node that did not loose its

last strong incoming edge, then the method fails to identify the support and prematurely deletes nodes.

In figure 2.2, when the root deletes the edge to A, A starts partial tracing. When the partial tracing reaches

back to A, D has additional strong support. But the algorithm only makes a decision based on A’s strong

incoming edge. So the A, B, C, D subgraph will be deleted prematurely.

14

FIGURE 2.2. When root R deletes strong edge to A, through partial tracing A will fail to identify liveness.

15

Chapter 3

Shared Memory Multi-processor Garbage Collection

3.1 Introduction

Garbage collection is an important productivity feature in many languages, eliminating a thorny set of

coding errors which can be created by explicit memory management [

46

,

5

,

3

,

6

,

30

,

49

,

16

]. Despite the

many advances in garbage collection, there are problem areas which have difficulty benefiting, such as

distributed or real-time systems; see [

4

,

55

,

67

]. Even in more mundane settings, a certain liveness in the

cleanup of objects may be required by an application for which the garbage collector provides little help, e.g.

managing a small pool of database connections.

The garbage collection system we propose is based on a scheme originally proposed by Brownbridge [

12

].

Brownbridge proposed the use of two types of pointers: strong and weak

1

. Strong pointers are required to

connect from the roots (i.e. references from the stack or global memory) to all nodes in the graph, and contain

no cycles. A path of strong links (i.e. pointers) from the roots guarantees that an object should be in memory.

Weak pointers are available to close cycles and provide the ability to connect nodes in arbitrary ways.

Brownbridge’s proposal was vulnerable to premature collection [

61

], and subsequent attempts to improve

it introduced poor performance (at least exponential cleanup time in the worst-case) [

53

]; details in Section

3.1.1. Brownbridge’s core idea, however, of using two types of reference counts was sound: maintaining

this pair of reference counts allows the system to remember a set of acyclic paths through memory so that

the system can minimize collection operations. For example, Roy et al. [

60

] used Brownbridge’s idea to

optimize scanning in databases. In this work we will show how the above problems with the Brownbridge

collection scheme can be repaired by the inclusion of a third type of counter, which we call a phantom count.

This modified system has a number of advantages.

Typical hybrid reference count and collection systems, e.g. [

5

,

36

,

3

,

6

,

39

], which use a reference counting

collector combined with a tracing collector or cycle collector, must perform nontrivial work whenever a

reference count is decreased and does not reach zero. The modified Brownbridge system, with three types of

1

These references are unrelated to the weak, soft, and phantom reference objects available in Java under Reference class.

16

reference counts, must perform nontrivial work only when the strong reference count reaches zero and the

weak reference count is still positive, a significant reduction [12, 30].

Many garbage collectors in the literature employ a generational technique, for example the generational

collector proposed in [

58

], taking advantage of the observation that younger objects are more likely to need

garbage collecting than older objects. Because the strong/weak reference system tends to make the links in

long-lived paths through memory strong, old objects connected to these paths are unlikely to become the

focus of the garbage collector’s work.

In other conceptions of weak pointers, if a node is reachable by a strong pointer and a weak pointer and

the strong pointer is removed, the node is garbage, and the node is deleted. In Brownbridge’s conception of

weak pointers, the weak pointer is turned into a strong pointer in an intelligent way.

In many situations, the collector can update the pointer types and prove an object is still live without

tracing through memory cycles the object may participate in. For example, when root

R1

is removed from

the graph shown in Fig. 3.1, the collector only needs to convert link 1 to strong and examine link 2 to prove

that object

A

does not need to be collected. What’s more, because the strength of the pointer depends on a

combination of states in the source and target, the transformation from strong to weak can be carried out

without the need for back-pointers (i.e. links that can be followed from target to source as well as source to

target).

This combination of effects, added to the fact that our collector can run in parallel with a live system,

may prove useful in soft real-time systems, and may make the use of “finalizers” on objects more practical.

These kinds of advantages should also be significant in a distributed setting, where traversal of links in the

collector is a more expensive operation. Moreover, when a collection operation is local, it has the opportunity

to remain local, and cleanup can proceed without any kind of global synchronization operation.

The contribution of this paper is threefold. Our algorithm never prematurely deletes any reachable object,

operates in linear in time regardless of the structure (any deletion of edges and roots takes only

O(N)

time

steps, where

N

is the number of edges in the affected subgraph), and works concurrently with the application,

without the need for system-wide pauses, i.e. “stopping the world.” This is in contrast to Brownbridge’s

original algorithm and its variants [

12

,

61

,

53

] which can not handle concurrency issues that arise in modern

multiprocessor architectures [60].

17

FIGURE 3.1. When root R1 is removed, the garbage collection algorithm only needs to trace link 2 to prove that object

A does not need to be collected.

Our algorithm does, however, add a third kind of pointer, a phantom pointer, which identifies a temporary

state that is neither strong nor weak. The addition of the space overhead offsets the time overhead in

Brownbridge’s work.

3.1.1 Most Related Work: Premature Collection, Non-termination, and Exponential Cleanup Time

Before giving details of our algorithm in Section 3.2 – which in turn is a modification of Brownbridge’s

algorithm and its variants [

12

,

61

,

53

] – we first describe the problems with previous variants. In doing so,

we follow the description given in the excellent garbage collection book due to Jones and Lins [30].

It was proven by McBeth [

46

] in early sixties that reference counting collectors were unable to handle

cyclic structures; several attempts to fix this problem appeared subsequently, e.g. [

21

,

9

,

39

]. We give details

in Section 3.1.2. In contrast to the approach followed in [

21

,

9

,

39

] and several others, Brownbridge [

12

]

proposed, in 1985, a strong/weak pointer algorithm to tackle the problem of reclaiming cyclic data structures

by distinguishing cycle closing pointers (weak pointers) from other references (strong pointers) [

30

]. This

algorithm relied on maintaining two invariants: (a) there are no cycles in strong pointers and (b) all items in

the graph must be strongly reachable from the roots.

Some years after the publication, Salkild [

61

] showed that Brownbridge’s algorithm [

12

] could reclaim

objects prematurely in some configurations, e.g. a double cycle. If the last strong pointer (or link) to an

18

object in one cycle but not the other was lost, Brownbridge’s method would incorrectly claim nodes from

the cycle. Salkild [61] corrected this problem by proposing that if the last strong link was removed from an

object which still had weak pointers, a collection process should re-start from that node. While this approach

eliminated the premature deletion problem, it introduced a potential non-termination problem.

Subsequently, Pepels et al. [

53

] proposed a new algorithm based on Brownbridge-Salkild’s algorithm and

solved the problem of non-termination by using a marking scheme. In their algorithm, they used two kinds of

mark: one to prevent an infinite number of searches, and the other to guarantee termination of each search.

Although correct and terminating, Pepels et al.’s algorithm is far more complex than Brownbridge-Salkild’s

algorithm and in some cyclic structures the cleanup cost complexity becomes at least exponential in the

worst-case [

30

]. This is due to the fact that when cycles occur, whole state space searches from each node in

the cyclic graph must be initiated, possibly many times. After Pepels et al.’s algorithm, we are not aware of

any other work on reducing the cleanup cost or complexity of the Brownbridge algorithm. Moreover, there is

no concurrent collection technique using this approach which can be applicable for the garbage collection in

modern multiprocessors.

The algorithm we present in this paper removes all the limitations described above. Our algorithm does

not perform searches as such. Instead, whenever a node loses its last strong reference and still has weak

references, it marks all affected links as phantom. When this process is complete for a subgraph, the system

recovers the affected subgraph by converting phantom links to either strong or weak. Because this process

is a transformation from weak or strong to phantom, and from phantom to weak or strong, it has at most

two steps and is, therefore, manifestly linear in the number of links, i.e. it has a complexity of only

O(N)

time steps, where

N

is the number of edges in the affected subgraph. Moreover, in contrast to Brownbridge’s

algorithm, our algorithm is concurrent and is suitable for multiprocessors.

3.1.2 Other Related Work

Garbage collection is an automatic memory management technique which is considered to be an important

tool for developing fast as well as reliable software. Garbage collection has been studied extensively in

computer science for more than five decades, e.g., [

46

,

12

,

61

,

53

,

5

,

3

,

6

,

30

]. Reference counting is a

widely-used form of garbage collection whereby each object has a count of the number of references to

it; garbage is identified by having a reference count of zero [

5

]. Reference counting approaches were first

19

developed for LISP by Collins [

16

]. Improved variations were proposed in several subsequent papers, e.g.

[

21

,

29

,

30

,

39

,

36

]. We direct readers to Shahriyar et al. [

63

] for the valuable overview of the current state

of reference counting collectors.

It was noticed by McBeth [

46

] in early sixties that reference counting collectors were unable to handle

cyclic structures. After that several reference counting collectors were developed, e.g. [

21

,

9

,

37

,

38

]. The

algorithm in Friedman [

21

] dealt with recovering cyclic data in immutable structures, whereas Bobrow’s algo-

rithm [

9

] can reclaim all cyclic structures but relies on the explicit information provided by the programmer.

Trial deletion approach was studied by Christopher [

15

] which tries to collect cycles by identifying groups of

self-sustaining objects. Lins [

37

] used a cyclic buffer to reduce repeated scanning of the same nodes in their

mark-scan algorithm for cyclic reference counting. Moreover, in [

38

], Lins improved his algorithm from [

37

]

by eliminating the scan operation through the use of a Jump-stack data structure.

With the advancement of multiprocessor architectures, reference counting garbage collectors have become

popular because they do not require all application threads to be stopped before the garbage collection

algorithm can run [

36

]. Recent work in reference counting algorithms, e.g. [

6

,

36

,

5

,

3

], try to reduce

concurrent operations and increase the efficiency of reference counting collectors. Since our collector is a

reference counting collector, it can potentially benefit from the same types of optimizations discussed here.

We leave that, however, to a future work.

However, as mentioned earlier, reference counting garbage collectors cannot collect cycles [

46

]. Therefore,

concurrent reference counting collectors [

6

,

36

,

5

,

3

,

51

,

39

] use other techniques, e.g. they supplement

the reference counter with a tracing collector or a cycle detector, together with their concurrent reference

counting algorithm. For example, the reference counting collector proposed in [

51

] combines the sliding

view reference counting concurrent collector of [

36

] with the cycle collector of [

5

]. Our collector has some

similarity with these, in that our

P hantomization

process may traverse many nodes. It should, however,

trace fewer nodes and do so less frequently. Recently, Frampton provides a detailed study of cycle collection

in his PhD thesis [20].

Herein we have tried to cover a sampling of garbage collectors that are most relevant to our work.

Apple’s ARC memory management system makes a distinction between “strong” and “weak” pointers,

similar to what we describe here. In the ARC memory system, however, the type of each pointer must be

specifically designated by the programmer, and this type will not change during the program’s execution.

20

If the programmer gets the type wrong, it is possible for ARC to have strong cycles as well as prematurely

deleted objects. With our system, the pointer type is automatic and can change during the execution. Our

system protects against these possibilities, at the cost of lower efficiency.

There exist other concurrent techniques optimized for both uniprocessors as well as multiprocessors.

Generational concurrent garbage collectors were also studied, e.g. [

58

]. Huelsbergen and Winterbottom [

25

]

proposed an incremental algorithm for the concurrent garbage collection that is a variant of mark-and-sweep

collection scheme first proposed in [

49

]. Furthermore, garbage collection is also considered for several other

systems, namely real-time systems and asynchronous distributed systems, e.g. [55, 67].

Concurrent collectors are gaining popularity. The concurrent collector described in Bacon and Rajan [

5

]

can be considered to be one of the more efficient reference counting concurrent collectors. The algorithm

uses two counters per object, one for the actual reference count and other for the cyclic reference count. Apart

from the number of the counters used, the cycle detection strategy requires a minimum of two traversals of

cycle when the cycle is reachable and eleven cycle traversals when the cycle is garbage.

3.1.3 Paper Organization

The rest of the paper is organized as follows. We present our strong/weak/phantom pointer based concurrent

garbage collector in Section 3.2 with some examples. In Section 3.4, we sketch proofs of its correctness and

complexity properties. In Section 3.5, we give some experimental results. We conclude the paper with future

research directions in Section 3.6 and a short discussion in Section 3.7. Detailed algorithms may be found in

the appendix.

3.2 Algorithm

In this section, we present our concurrent garbage collection algorithm. Each object in the heap contains

three reference counts: the first two are the strong and weak, the third is the phantom count. Each object also

contains a bit named

which

(Brownbridge [

12

] called it the “strength-bit”) to identify which of the first two

counters is used to keep track of strong references, as well as a boolean called

phantomized

to keep track

of whether the node is phantomized. Outgoing links (i.e., pointers) to other objects must also contain (1)

a

which

bit to identify which reference counter on the target object they increment, and (2) a

phantom

boolean to identify whether they have been phantomized. This data structure for each object can be seen in

the example given in Fig. 3.2.

21

Local creation of links only allows the creation of strong references when no cycle creation is possible.

Consider the creation of a link from a source object

S

to a target object

T

. The link will be created strong

if (i) the only strong links to

S

are from roots i.e. there is no object

C

with a strong link to

S

; (ii) object

T

has no outgoing links i.e. it is newly created and its outgoing links are not initialized; and (iii) object

T

is

phantomized, and S is not. All self-references are weak. Any other link is created phantom or weak.

To create a strong link, the

which

bit on the link must match the value of the

which

bit on the target

object. A weak link is created by setting the

which

bit on the reference to the complement of the value of

the which bit on the target.

When the strong reference count on any object reaches zero, the garbage collection process begins. If the

object’s weak reference count is zero, the object is immediately reclaimed. If the weak count is positive, then

a a sequence of three phases is initiated:

P hantomization

,

Recovery

, and

CleanUp

. In

P hantomization

,

the object toggles its

which

bit, turning its incoming weak reference counts to strong ones, and phantomizes

its outgoing links.

Phantomizing a link transfers a reference count (either strong or weak), to the phantom count on the target

object. If this causes the object to lose its last strong reference, then the object may also phantomize, i.e.

toggle its

which

bit (if that will cause it to gain strong references), and phantomizes all its outgoing links.

This process may spread to a large number of target objects.

All objects touched in the process of a phantomization that were able to recover their strong references

by toggling their

which

bit are remembered and put in a “recovery list”. When phantomization is finished,

Recovery begins, starting with all objects in the recovery list.

To perform a recovery, the system looks at each object in the recovery list, checking to see whether it still

has a positive strong reference count. If it does, it sets the

phantomized

boolean to false, and rebuilds its

outgoing links, turning phantoms to strong or weak according to the rules above. If a phantom link is rebuilt

and the target object regains its first strong reference as a result, the target object sets its

phantomized

boolean to false and attempts to recover its outgoing phantom links (if any). The recovery continues to rebuild

outgoing links until it terminates.

Finally, after the recovery is complete,

CleanUp

begins. The recovery list is revisited a second time. Any

objects that still have no strong references are deleted.

22

Note that all three of these phases,

P hantomization

,

Recovery

, and

CleanUp

are, by their definitions,

linear in the number of links; we prove this formally in Theorem 2 in Section 3.4. Links can undergo only

one state change in each of these phases: strong or weak to phantom during

P hantomization

, phantom to

strong or weak during Recovery, and phantom to deleted in CleanU p.

We now present some examples to show how our algorithm performs collection in several real word

scenarios.

3.2.1 Example: A Simple Cycle

In Fig. 3.2 we see a cyclic graph with three nodes. This figure shows the counters, bits, and boolean values

in full detail to make it clear how these values are used within the algorithm. Objects are represented with

circles, links have a pentagon with state information at their start and an arrow at their end.

In Step 0, the cycle is supported by a root, a reference from stack or global space. In Step 1, the root

reference is removed, decrementing the strong reference by one, and beginning a

P hantomization

. Object

C toggles its which pointer and phantomizes its outgoing links. Note that toggling the which pointer causes

the link from A to C to become strong, but nothing needs to change on A to make this happen.

In Step 2, object B also toggles its which bit, and phantomizes its outgoing links. Likewise, in Step 3,

object A phantomizes, and the P hantomization phase completes.

Recovery

will attempt to unphantomize objects A, B, and C. None of them, however, have any strong

support, and so none of them recover.

Cleanup happens next, and all objects are reclaimed.

3.2.2 Example: A Doubly-Linked List

The doubly linked list depicted in Fig. 3.3 is a classic example for garbage collection systems. The structure

consists of 6 links, and the collector marks all the links as phantoms in 8 steps.

This figure contains much less detail than Fig. 3.2, which is necessary for a more complex figure.

3.2.3 Example: Rebalancing A Doubly-Linked List

Fig. 3.4 represents a worst case scenario for our algorithm. As a result of losing root

R1

, the strong links

are pointing in exactly the wrong direction to provide support across an entire chain of double links. During

P hantomization

, each of the objects in the list must convert its links to phantoms, but nothing is deleted.

23

counters

phant.:

which:

F

phant.:

which:

counters

phant.:

which:

phant.:

which:

counters

phant.:

which:

phant.:

which:

Root

F

1

0

1

0

0

F

F

F

F

0

0

0

1

1

0

0

0

0

1

Weak

Strong

Strong

Strong

counters

phant.:

which:

F

phant.:

which:

counters

phant.:

which:

phant.:

which:

counters

phant.:

which:

phant.:

which:

T

0

0

1

0

T

F

F

F

0

0

0

1

0

0

1

0

0

1

Strong

Strong

Phantom

1

counters

phant.:

which:

T

phant.:

which:

counters

phant.:

which:

phant.:

which:

counters

phant.:

which:

phant.:

which:

T

0

0

1

0

T

T

F

F

0

0

0

1

0

0

1

0

1

0

Strong

Phantom

Phantom

1

counters

phant.:

which:

T

phant.:

which:

counters

phant.:

which:

phant.:

which:

counters

phant.:

which:

phant.:

which:

T

0

1

1

0

T

T

T

T

1

0

0

1

0

0

1

0

1

0

Phantom

Phantom

Phantom

0

Step 0

Step 1

Step 2

Step 3

A

A

A

A

B

B

B

B

C

C

C

C

FIGURE 3.2. Reclaiming a cycle with three objects

FIGURE 3.3. Doubly-linked list

24

FIGURE 3.4. Rebalancing a doubly-linked list

P hantomization

is complete in the third figure from the left, and

Recovery

begins. The fourth step in the

figure, when link 6 is converted from phantom to weak marks the first phase of the recovery.

3.2.4 Example: Recovering Without Detecting a Cycle

In Fig. 3.1 we see the situation where the collector recovers from the loss of a strong link without searching

the entire cycle. When root

R1

is removed, node

A

becomes phantomized. It turns its incoming link (link

1

)

to strong, and phantomizes its outgoing link (link

2

), but then the phantomization process ends. Recovery is

successful, because

A

has strong support, and it rebuilds its outgoing link as weak. At this point, collection

operations are finished.

Unlike the doubly-linked list example above, this case describes an optimal situation for our garbage

collection system.

3.3 Concurrency Issues

This section provides details of the implementation.

3.3.1 The Single-Threaded Collector

There are several methods by which the collector may be allowed to interact concurrently with a live

system. The first, and most straightforward implementation, is to use a single garbage collection thread

to manage nontrivial collection operations. This technique has the advantage of limiting the amount of

computational power the garbage collector may use to perform its work.

For the collection process to work, phantomization must run to completion before recovery is attempted,

and recovery must run to completion before cleanup can occur. To preserve this ordering in a live system,

25

whenever an operation would remove the last strong link to an object with weak or phantom references, the

link is instead transferred to the collector, enabling it to perform phantomization at an appropriate time.

After the strong link is processed, the garbage collector needs to create a phantom link to hold onto the

object while it performs its processing, to ensure the collector itself doesn’t try to use a deleted object.

Another point of synchronization is the creation of new links. If the source of the link is a phantomized

node, the link is created in the phantomized state.

With these relatively straightforward changes, the single-threaded garbage collector may interact freely

with a live system.

3.3.2 The Multi-Threaded Collector

The second, and more difficult method, is to allow the collector to use multiple threads. In this method,

independent collector threads can start and run in disjoint areas of memory. In order to prevent conflicts from

their interaction, we use a simple technique: whenever a link connecting two collector threads is phantomized,

or when a phantom link is created by the live system connecting subgraphs under analysis by different

collector threads, the threads merge. A merge is accomplished by one thread transferring its remaining work

to the other and exiting. To make this possible, each object needs to carry a reference to the collection threads

and ensure that this reference is removed when collection operations are complete. While the addition of a

pointer may appear to be a significant increase in memory overhead, it should be noted that the pointer need

not point directly to the collector, but to an intermediate object which can carry the phantom counter, as well

as other information if desired.

An implementation of this parallelization strategy is given in pseudocode in the appendix.

3.4 Correctness and Algorithm Complexity

The garbage collection problem can be modeled as a directed graph problem in which the graph has a

special set of edges (i.e. links) called

roots

that come from nowhere. These edges determine if a node in

the graph is reachable or not. A node X is said to be reachable if there is a path from any root to a node X

directly or transitively. Thus, the garbage collection problem can be described as removing all nodes in the

graph that are not reachable from any roots.

Our algorithm uses three phases to perform garbage collection. The three phases are