ACS Ventures, LLC –

Bridging Theory & Practice

Submitted By:

Chad W. Buckendahl, Ph.D.

702.586.7386

cbuckendahl@acsventures.com

11035 Lavender Hill Drive, Suite 160-433 · Las Vegas, NV 89135 | www.acsventures.com

Conducting a Content Validation Study for the

California Bar Exam

Final Report

October 4, 2017

1 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Contents

Executive Summary ................................................................................................................................................. 3

Introduction ............................................................................................................................................................ 5

Current Examination Design ............................................................................................................................... 5

Study Purpose ..................................................................................................................................................... 5

Procedures .............................................................................................................................................................. 6

Panelists .............................................................................................................................................................. 6

Workshop Activities ............................................................................................................................................ 7

Orientation ...................................................................................................................................................... 7

Content Validity Judgments ............................................................................................................................ 8

Analysis and Results ................................................................................................................................................ 9

Content Sampling Across Years ........................................................................................................................ 12

Evaluating the Content Validation Study .............................................................................................................. 14

Procedural ......................................................................................................................................................... 15

Internal .............................................................................................................................................................. 16

External ............................................................................................................................................................. 16

Utility ................................................................................................................................................................. 17

Process Evaluation Results ................................................................................................................................ 18

Gap Analysis .......................................................................................................................................................... 19

Conclusions and Next Steps .................................................................................................................................. 21

References ............................................................................................................................................................ 23

Appendix A – Panelist Information ....................................................................................................................... 24

Appendix B – Content Validation Materials and Data .......................................................................................... 25

Appendix C – Evaluation Comments ..................................................................................................................... 26

2 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Executive Summary

The California Bar Exam recently undertook a content validation study to evaluate the alignment of content

and cognitive complexity on their exams to the results of a national job analysis. This study involved gathering

judgments from subject matter experts (SMEs) following a standardized process for evaluating examination

content, discussing judgments made by the SMEs, summarizing these judgments, and evaluating the

representation of content on the examination.

In this process, content validation judgments for the assessments were collected on two dimensions – content

match and cognitive complexity. The Written and Multistate Bar Exam (MBE) components of the examination

were evaluated for their match to the results of the National Conference of Bar Examiners’ (NCBE) 2012 job

analysis in terms of content and cognitive complexity as defined by an adaptation of Webb’s (1997) Depth of

Knowledge (DOK). For the constructed response items (i.e., essay questions, performance task), score points

specified in the scoring rubric were evaluated separately to acknowledge the potential for differential

alignment evidence (i.e., that different aspects of the scoring criteria may measure different knowledge, skills,

or abilities). Because MBE items were not available for the study, the subject areas as described in the publicly

available content outline were reviewed and evaluated based on their proportional contribution to the

examination.

Summary results suggested that all content on the examination matched with job-related expectations for the

practice of law. The cognitive complexity for the written component of the examination as measured by DOK

was also consistent with the level of cognitive complexity (e.g., analysis vs. recall) expected of entry-level

attorneys. In addition, a review of the content sampling of the examination over time suggests that most

content on the examination is consistent with content expected for entry level practice. The sampling plan

and the current representation of knowledge and skills when considering the combination of the Written and

MBE components of the examination suggest stable representation year to year. This is discussed in more

detail in the body of the report. However, there are opportunities for improvement in both the content

representation and sampling plan of the existing subject areas.

Results from the judgment tasks and qualitative feedback from panelists also suggested some formative

opportunities for improvement in the structure and representation of content on the examination that could

be considered. As recommended next steps for the California Bar Examination in its evaluation of its design

and content, the results of the gap analysis and feedback from panelists provide a useful starting point for

further discussion. Specifically, from the results of the national survey, skills and tasks were generally

interpreted as more generalizable than many of the knowledge domains. Given the diversity of subject areas

in the law, this is not surprising. At the same time, it may also suggest that a greater emphasis on skills could

be supported in the future. To answer this question, further study is warranted. This additional study would

begin with a program design that leads to a job analysis for the practice of law in California. As an examination

intended to inform a licensure decision, the focus of the measurement of the examination needs to be on

practice and not on the education or training programs. Through this combination of program design and job

analysis, results would inform and provide evidence for decisions about the breadth and depth of

measurement on the examination along with the relative emphasis (e.g., weighting) of different components.

3 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

While the results of this study provided evidence to support the current iteration of the examination, there

are also formative opportunities for the program to consider in a program redesign. Specifically, the current

design and format for the California Bar Examination has been in place for many years. Feedback from the

content validation panelists suggested that there are likely subject areas that could be eliminated or

consolidated to better represent important areas needed by all entry-level practitioners. From a design

perspective, it may be desired to define the components of the examination as a combination of a candidate’s

competency in federal law, California-specific law, and job-related lawyer skills. Further, if the MBE continues

to be included as part of the California Bar Examination, it would be important to be able to review the items

on a recently operational form (or forms) of the test to independently evaluate the content and cognitive

complexity of the items. If the California is unable to critically review this component of their program, it

should prompt questions about whether it is appropriate to continue to include it as part of their examination.

Similarly, such a redesign activity would offer the program an opportunity to evaluate the assessment item

types of the examination (e.g., multiple choice, short answer, extended response), scoring policies and

practices for human scored elements (e.g., rubric development, calibration, evaluation of graders), alternative

administration methods for components (e.g., linear on the fly, staged adaptive, item level adaptive), and

alternative scoring methods for constructed response (e.g., automated essay scoring). Advances in testing

practices and technologies as well as the evolution of the practice of law since the last program design activity

suggest that this interim study may facilitate additional research questions. As an additional resource about

the current practices within credentialing programs, interested readers are encouraged to consult Davis-

Becker and Buckendahl (2017) or Impara (1995).

For licensure examination programs, in terms of evidence to define content specifications, the primary basis

for evidence of content validity come from the results of a job analysis that provides information about the

knowledge, skills, and abilities for entry-level practitioners. Although the results of the 2012 NCBE job analysis

were used for this study, it would be appropriate for the program to conduct a state-specific study as is done

for other occupations in California to then be used to develop and support a blueprint for the examination.

The specifications contained in the blueprint are intended to ensure consistent representation of content and

cognitive complexity across forms of the examination. This would strengthen the content evidence for the

program and provide an opportunity for demonstrating a direct link between the examination and what

occurs in practice. These two activities – program design and job analysis – should be considered as priorities

with additional redevelopment and validation activities (e.g., content development, content review, pilot

testing, psychometric analysis, equating) occurring as subsequent activities.

Recognizing the interrelated aspects of validation evidence for testing programs, it is valuable to interpret the

results of this study and its potential impact on the recently conducted standard setting study for the

California Bar Examination. Specifically, the results of the content validation study suggested that most of the

content on the examination was important for entry level practice without substantive gaps in what is

currently measured on the examination compared with what is expected for practice. However, if the

examination is revised in the future, it would likely require revisiting the standard setting study.

The purpose of this report is to document who was involved in the process, processes that were used, results

of the content validation study, conclusions about content validity of the examination, and recommendations

for next steps in the examination development and validation process.

4 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Introduction

The purpose of licensure examinations like the California Bar Exam is to distinguish candidates who are at

least minimally competent from those that could do harm to the public (i.e., not competent). This examination

purpose is distinguished from other types of exams in that licensure exams are not designed to evaluate

training programs, evaluate mastery of content, predict success in professional practice, or ensure

employability. As part of the validation process for credentialing examinations, a critical component includes

content validation (see Kane, 2006). Content validation involves collecting and evaluating evidence of

alignment of content (e.g., knowledge, skills, abilities) and cognitive processing (e.g., application, analysis,

evaluation) to established job-related knowledge, skills, abilities, and judgments. Substantive overlap between

what is measured by the examination and what is important for entry level practice is needed to support an

argument that the content evidence contributes to valid scores and conclusions.

Current Examination Design

The California Bar Exam is built on multiple components intended to measure the breadth and depth of

content needed by entry level attorneys. Beginning with the July 2017 examination, these components include

the Multistate Bar Exam (MBE) (175 scored and 25 unscored multiple-choice questions), five essay questions,

and a performance task. The combined score for the examination weights the MBE at 50% and the written

response components at 50% with the performance task being weighted as twice as much as an essay

question.

1

A decision about passing or failing is based on the compensatory performance of applicants on the

examination and not any single component. This means that a total score is used to make decisions and no

one question or task is determinant of the pass/fail determination.

Study Purpose

The purpose of this study was to evaluate the content representation and content complexity of the California

Bar Examination in comparison with the results of a job analysis conducted by the National Conference of Bar

Examiners (NCBE) in 2012. To collect the information to evaluate these questions, Dr. Chad Buckendahl of ACS

Ventures, LLC (ACS) facilitated a content validation workshop on June 6-8, 2017 in San Francisco, CA. The

purpose of the meeting was to ask subject matter experts (SMEs) to make judgments about the content and

cognitive complexity of the components of the California Bar examination.

This report describes the sources of validity evidence that were collected, summarizes the results of the study,

and evaluates the results using the framework for alignment studies suggested by Davis-Becker and

Buckendahl (2013). The conclusions and recommendations for the examination program are based on this

evaluation and are intended to provide summative (i.e., decision making) and formative (i.e., information for

improvement) feedback for the California Bar Examination.

1

Before July 2017, the written section of the bar exam was weighted 65 percent of the total score and consisted of six

essay questions and two performance test questions administered over two days.

5 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Procedures

The content validation approach used for the study relies on the content and cognitive complexity judgments

suggested by Webb (1997). In this method, panelists make judgments about the cognitive complexity and

content fit of exam items or score points relative to content expectations. For this study, those content

expectations were based on the 2012 NCBE job analysis supplemented by links to the U.S. Department of

Labor’s O*NET

2

regarding lawyers that was updated in 2017.

A job analysis is a study often conducted every five to seven years to evaluate the job-related knowledge,

skills, and abilities that define a given profession. Conducting a job analysis study for a profession can often

take 9-12 months to complete. In using the results from the NCBE study as a reference point, these data were

within the typical range for conducting these studies and it was a readily available resource given the timeline

under which the California Bar Exam was asked to provide evidence of content validation of its examination.

Panelists

Ten panelists participated in the workshop and were recruited to represent a range of stakeholder groups.

These groups were defined as Recently Licensed Professionals (panelists with less than five years of

experience), Experienced Professionals (panelists with ten or more years of experience), and Faculty/Educator

(panelists employed at a college or university). A summary of the panelists’ qualifications is shown in Table 1.

Table 1. Profile of content validation workshop panel

Race/Ethnicity Freq. Percent Gender Freq. Percent

Asian 1 10.0 Female 5 50.0

Black 2 20.0 Male 5 50.0

Hispanic 1 10.0 Total 10 100.0

White 6 60.0

Total 10 100.0 Years of Practice Freq. Percent

5 Years or Less 2 20.0

>=10 8 80.0

Nominating Entity Freq. Percent Total 10 100.0

ABA Law Schools 2 20.0

Assembly Judiciary Comm. 1 10.0 Employment type Freq. Percent

Board of Trustees 1 10.0 Academic 3 30.0

BOT – COAF

3

3 30.0 Large Firm 2 20.0

CALS Law Schools 1 10.0 Non Profit 1 10.0

Registered Law Schools 1 10.0 Small Firm 1 10.0

Senior Grader 1 10.0 Solo Practice 3 30.0

2

The O*NET is an online resource when evaluating job-related characteristics of professions. See

https://www.onetonline.org/

for additional information.

3

Council on Access & Fairness.

6 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Total 10 100.0 Total 10 100.0

Workshop Activities

The California Bar Exam content validation workshop was conducted June 6-8, 2017 in San Francisco, CA. Prior

to the meeting, participants were informed that they would be engaging in tasks to evaluate the content and

cognitive complexity of the components of the California Bar Examination. The content validation process

included an orientation and training followed by operational alignment judgment activities for each

essay/performance task and MBE subject area, as well as written evaluations to gather panelists’ opinions of

the process. Workshop orientation and related materials are provided in Appendix B.

Orientation

The meeting commenced on June 6

th

with Dr. Buckendahl providing a general orientation and training for all

panelists that included the goals of the meeting, an overview of the examination, cognitive complexity levels,

and specific instructions for panel activities. Additionally, the orientation described how the results would be

used by policymakers and examination developers to evaluate the current structure and content

representation of the examination.

Specifically, the topics that were discussed in the orientation included:

• The interpretation and intended use of scores from the California Bar Exam (i.e., licensure)

• Background information on the development of the California Bar Exam

• Summary results of the NCBE job analysis and O*NET descriptions

• Purpose of alignment information for informing validity evidence

After this initial orientation, the panel was trained on the alignment processes that were used. This training

included discussions of the following:

• Cognitive complexity framework – understanding each level, evaluating content framework

• Content match – evaluating fit of score points or subject areas to job-related content

• Decision making process – independent review followed by group consensus

After the training, the panelists began making judgments about the examination. Their first task involved

making judgments about the intended cognitive complexity of the knowledge, skills, abilities, and task

statements from the 2012 NCBE job analysis. The cognitive complexity framework used for this study was an

adaptation of Webb’s (1997) Depth of Knowledge (DOK) for a credentialing exam. The DOK levels represent

the level of cognitive processing associated with performing a task or activity. Lower DOK levels correspond to

cognitive processes such as recall or remembering while higher levels correspond to application of knowledge,

analysis, or evaluation. Within Webb’s (1997) framework, Level 1 is defined as recall and reproduction, Level 2

is defined as working with skills and concepts, Level 3 is defined as short-term strategic thinking, and Level 4 is

defined as extended strategic thinking. For this study, Level 1 was defined as recall or memorization, Level 2

was further clarified as representing the understanding and application level of cognitive process, Level 3 was

defined as analysis and evaluation, and Level 4 was defined as creation of new knowledge.

Within psychological measurement, the depth of cognitive processing is considered in combination with the

content to ensure that the claims made about candidates’ abilities are consistent with the target construct.

The DOK framework is one of many potential scales that can be used to evaluate this aspect of content.

7 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Another commonly used model comes from Bloom (1956) and defines cognitive processes being knowledge,

comprehension, application, analysis, synthesis, and evaluation. The inclusion of cognitive complexity as a

consideration in the evaluation of the content validity of the California Bar Exam is important because it

provides information on not only what may be needed on the examination, but at what cognitive level should

candidates be able to function with the content. Procedurally, after rating the DOK of the first few statements

as a group, panelists made judgments independently followed by consensus discussions. This consensus

judgment was then recorded and used for the subsequent analysis.

Content Validity Judgments

Although characterized as “content,” content validation is inclusive of judgments about both cognitive

complexity and content match. After a review of the knowledge and task statements from the job analysis, the

panelists began reviewing the components of the examination. For these components, panelists made

independent judgments regarding the content match with the results of the NCBE job analysis. To calibrate

the group to the process and the rating tasks, some of the judgments occurred as a full group facilitated

discussions with other judgments occurring independently followed by consensus discussions. At key phases

of the process panelists completed a written evaluation of the process including how well they understood the

alignment tasks, their confidence in their judgments, and the time allocated to make these judgments.

On the first day, panelists reviewed and determined the cognitive complexity levels of each knowledge and

task statement of the job analysis. This activity was done to establish the expected depth of knowledge (DOK)

associated with the respective knowledge, skill, and ability (KSA). A summary of the results from these

judgments suggested that most KSAs were judged to be at Levels 2 and 3 of the DOK framework. This means

that most of the California Bar Examination is expected to measure candidates’ abilities at levels beyond recall

and memorization, specifically at the understanding, application, analysis, and evaluation levels. As shown

below in Table 2, the current examination illustrates measurement expectations consistent with these

expectations.

On the second day, the panel began making alignment judgments on the essay questions with the first one

occurring as a full group activity. This was then followed by dividing up the task to have two subgroups each

evaluate two essay questions and come to consensus on the judgments. After completing judgments on the

essay questions, the full group then reviewed the expected content and DOK for the performance task and

discussed the representation of content/skills. The third day then involved a full group facilitated discussion

where judgments about the representation of domains of the MBE examination to evaluate proportional

contribution to the overall content representation.

These judgment activities were followed by a facilitated discussion about content that could be measured on

the examination that was not discussed (e.g., subject areas that were measured in other years). A related part

of this brief discussion was where content that is eligible for sampling on the California Bar Exam may be more

appropriately represented (e.g., Bar Examination, MCLE). These results are included in the evaluation section

of this report, but should not be interpreted as a program design or redesign activity. The inclusion of this part

of the study responded to a request to gather some high-level information as a starting point for additional

exploration of how the California Bar Examination should be defined and structured.

8 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Analysis and Results

The content validation findings are intended to evaluate the following questions:

• What is the content representation of the California Bar Exam essay questions, performance task, and

MBE subject areas relative to the knowledge and task statements of the NCBE job analysis?

• What knowledge and task statements from the NCBE job analysis are NOT covered by the California

Bar Exam?

• What California Bar Exam content does NOT align with the knowledge and task statements of the

NCBE’s 2012 job analysis?

There are currently 13 subject areas that can be sampled on the written portion of the California Bar Exam.

This means that not all subject areas can be included each year and need to be sampled over time. To answer

these content validation questions, the proportional contribution (i.e., percentage) of each exam component

was estimated to approximate the distribution of content for the examination. This distribution is influenced

by the sampling of content that occurs on the examination each year. As noted, each of the 13 subject areas

cannot be included each year, so the content specifications require sampling to occur over multiple years.

For example, if a Real Property essay question is included for an examination, we would expect to see greater

representation of the Real Property subdomain relative to years where this subject area is not included as part

of the sampling plan. This is also why consideration was given to the content sampling plan for the program

and not any single year. To apply a content sampling approach, it is important that the examination meet an

assumption of unidimentionality (i.e., there is a dominant construct that is measured by the exam). If this

assumption is met, then the variability of content year-to-year does not pose a significant threat to the validity

of interpretations of the scores, even if there is an intuitive belief about what content should or should not be

on the examination.

To illustrate the effect of the content sampling over time, it is important to understand what parts of the

examination are constant versus variable across years. With the weighting of the exam beginning in July 2017

being 50% from the Multistate Bar Exam (MBE) and 50% from the written component (i.e., essay questions

and performance task, we can calculate how much each part of the examination contributes to the whole.

This breakdown is shown here:

Multistate Bar Exam (50%)

- The MBE is comprised of seven subject area sections, each with 25 scored questions. This means

that each of these sections contributes approximately 7% to the total score (i.e., 1 section divided

by 7 total sections and then multiplied by 50% to reflect that the MBE is only half of the exam).

- The blueprint for the MBE is fixed, meaning that the same seven content areas are measured each

year. Therefore, the representation of content from this exam is consistent year-to-year until any

changes are made to the blueprint.

Written Component (50%)

- The written component of the examination is comprised of five essay questions and one

performance task that is weighted twice as much as one essay question. This means that for the

written component, each of the five essay questions represent approximately 7% of the total

score and the performance task represents approximately 14% of the total score (i.e., 1 essay

9 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

question divided by 7 total scoring elements [the performance task is calculated as 2 divided by 7

total scoring elements to reflect the double weighting] and then multiplied by 50% to reflect that

the written component is only half of the exam.

- The blueprint for the written component is fixed with respect to the number of essay questions

and performance task, but there is content sampling that occurs across the 13 subject areas

currently eligible for selection. However, one of these subject areas, Professional Responsibility, is

represented each year on the examination. Additional discussion about the potential impact of

content sampling is discussed below.

The summary matrix in Table 2 represents the combination of information from the cognitive complexity

ratings (reflected as Depth of Knowledge levels) in addition to the proportion of aligned content. For

efficiency, the results are included for areas of content that were judged to align. Note that there were no

components or subcomponents of the California Bar Exam that did not align with knowledge and task

statements from the job analysis. There were, however, some areas suggested by the job analysis that could

be considered in future development efforts by the Bar Exam that are discussed in the Conclusions and Next

Steps section of this report.

Additional explanation is needed for readers to interpret the information presented in Table 2. Within the

table, the first two columns refer to the knowledge, skills, abilities, or general tasks that were part of the

summary results from the NCBE job analysis. Information in the third column relies on a coding scheme where

K-1 refers to the first knowledge statement, S-1 refers to the first skill statement, A-1 refers to the first ability

statement, T-1 refers to the first task statement in the O*NET framework. Other links within this framework

will associate a letter and numerical code to the appropriate statement (e.g., K-2 refers to the second

knowledge statement, T-3 refers to the third task statement). This information is provided to illustrate

alignment with a concurrent source of evidence regarding knowledge, skills, abilities, and tasks that may be

representative of entry-level practice. For interested readers, the narrative descriptions of these links to the

O*NET that were used by panelists are provided in Appendix B.

The Statement DOK column provides information about the expected cognitive complexity for entry-level

lawyers on the given knowledge, skill, ability, or task statement with lower numbers being associated with

lower levels of cognitive complexity on the 1 (recall or memorization), 2 (understanding and application), 3

(analysis and evaluation) and 4 (creation) scale described above.

In the last three columns of Table 2, information about the estimated percent of the examination that was

represented by content on the July 2016 administration with an important caveat. Because the goal of the

content validation study was to evaluate the content representation that may occur on the California Bar

Examination based on the new examination format that began in July 2017, we selected five essay questions

and a performance task as representative of how an examination could be constructed without regard to

specific content constraints (i.e., specific subject areas that may be included). This means that the

interpretation of the results is dependent on the content sampling selected for the study. This concept is

further discussed in the next section.

As described above, to calculate the percentage of coverage for a given content area, we first applied the

weights to the respective components of the examination (i.e., 50% for the essays and performance task

[written] component, 50% for the multistate bar exam [MBE]). We then calculated the proportion of each

subsection within a component based on its contribution to the total score. For example, each essay question

10 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

is weighted equally with the performance task weighted twice as much as an essay question. This means that

within the written component, there are six questions where one of the questions is worth twice as much.

Proportionally, this means that each essay question is worth approximately 14% of the written component

score whereas the performance task is worth approximately 28% of the written component score.

However, because the written component only represents half of the total test score, this means that these

percentages are multiplied by 50% to determine the weight for the full examination (i.e., approximately 7% for

each essay question, 14% for the performance task). The same calculation was applied to the seven equally

weighted sections of the MBE. Ratings from panelists on each of the essay questions, performance task, and

the content outline from the MBE were communicated as consensus ratings and based on proportional

contributions of knowledge, skills, and abilities. These proportions could then be analyzed as weights based on

the calculations described above to determine the component and overall content representation.

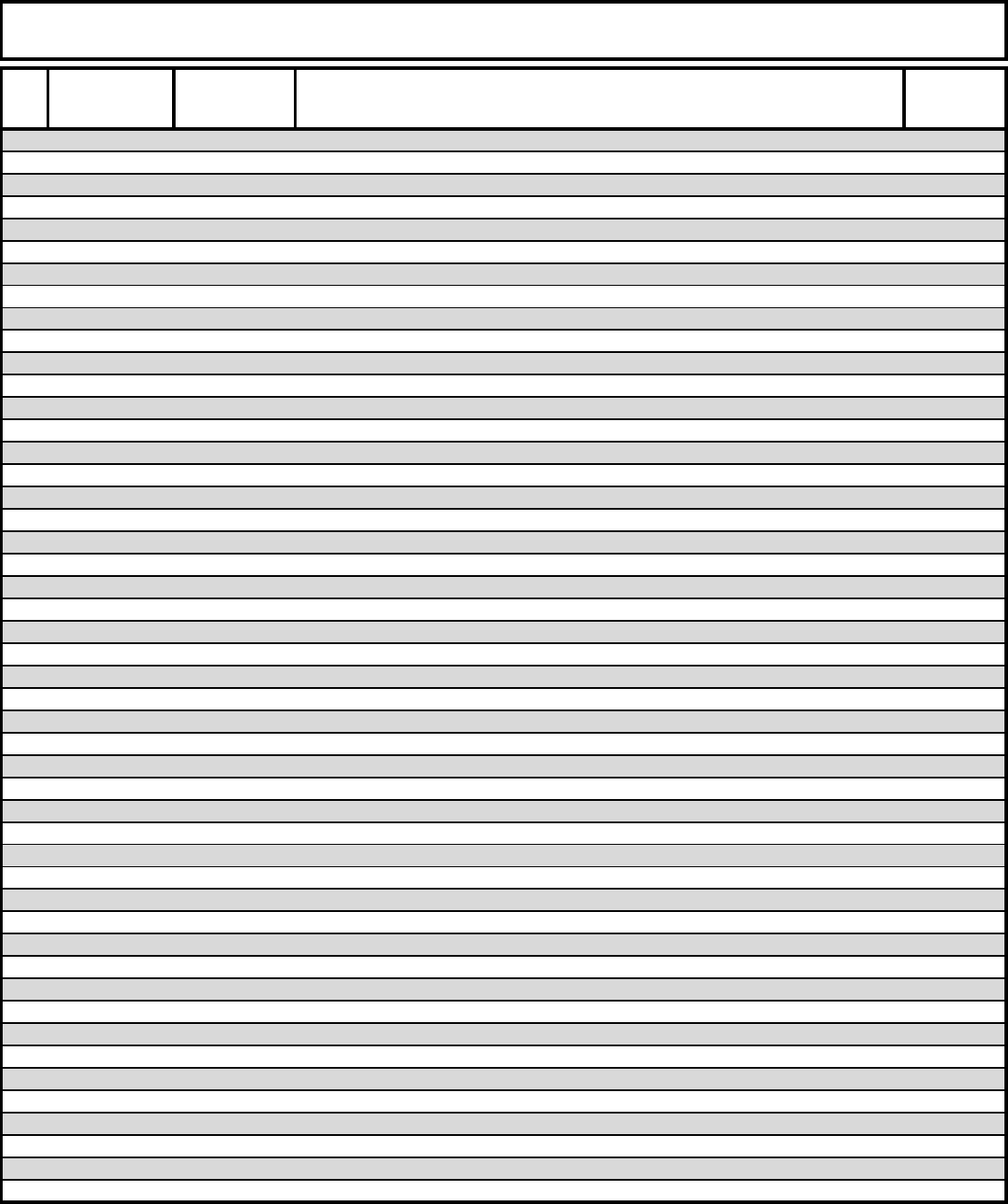

Table 2. Consolidated content validation results with approximate percentage of representation.

% of Exam

Knowledge, Skills, Abilities and Tasks

from the NCBE Job Analysis Survey

Link to

O*NET

Statement

DOK

Essays

and

PT

MBE Total

Section I. Knowledge Domains

4

1 Rules of Civil Procedure K-1 2 4% 4% 7%

2 Other Statutory and Court Rules of

Procedure

K-1 1 - 4% 4%

3 Rules of Evidence K-1 2 - 7% 7%

10 Contract Law

5

K-1 2 3% 7% 10%

11 Tort Law K-1 2 - 4% 4%

12 Criminal Law K-1 2 - 7% 7%

13 Rules of Criminal Procedure

6

K-1 2 - - 0%

14 Other Privileges

7

K-1 2 - - 0%

15 Personal Injury Law K-1 1 - 4% 4%

19 Principles of Electronic Discovery

8

K-1 1 1% - 1%

20 Real Property Law K-1 2 3% 7% 10%

21 Constitutional Law

9

K-1 2 3% 7% 10%

4

Note that a current content constraint of the examination is that Professional Responsibility and Ethics is represented

on each form of the test. When this content area is included it would reduce the representation of another content area

that would be sampled.

5

MBE content for this area was also judged to partially align with Real Property.

6

MBE content for this area was also judged to partially align with Criminal Law and Procedure.

7

MBE content for this area was also judged to partially align with Evidence.

8

MBE content for this area was also judged to partially align with Civil Procedure.

9

MBE content for this area was also judged to partially align with Civil Procedure, Criminal Law and Procedure, and Torts.

11 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

24 Family Law K-1 2 3% - 3%

Section II. Skills and Abilities

87 Written communication S-9, A-5 3 4% - 4%

93 Critical reading and comprehension S-3, A-3 3 3% - 3%

94 Synthesizing facts and law A-7 3 8% - 8%

95 Legal reasoning A-6, A-7 3 15% - 15%

100 Issue spotting S-5 3 1% - 1%

108 Fact gathering and evaluation S-5 3 2% - 2%

Section III. General Tasks

123 Identify issues in case T-1, T-12 2 2% 2%

Total 50% 50% 100%

10

As shown in the footnotes of Table 2, there were areas of the MBE that could represent additional areas of

content. However, the extent of that alignment is unknown because we did not have access to the actual test

items; only the publicly available content outline. As a result, this report includes the judgments from the

panel as a reference point for future study if the actual forms of the MBE are available for external evaluation

in the future. To avoid speculation for this report, we did not estimate the potential contribution of these

additional areas and only noted them.

Content Sampling Across Years

As noted above, the written component of the examination currently samples from 13 subject areas. Table 3

shows the number of times that each of these subject areas has been represented by essay questions over the

last decade. This information is useful to evaluate whether the content emphasis is consistent with the subject

areas that have been judged as more or less important in the practice analysis. In noting that one of the

subject areas, Professional Responsibility, is sampled every year, we would expect some variability in the other

four essay questions as subjects are sampled across years. Note that the performance is not related to the

subject area and focuses specifically on lawyer skills, so the proportional measurement of these abilities also

appears to be consistent across years.

10

Note that totals for each component of the examination and overall will not equal 100% due to rounding.

12 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Table 3. Representation of subject areas from 2008-2017 (n=20 administrations).

Subject area Frequency of

representation

11

Rating of

significance

12

Percent

Performing

13

Professional Responsibility 19 2.83 93%

Remedies 12 N/A

14

N/A

Business Associations 11 2.33 67%

Civil Procedure 10 3.08 86%

Community Property

15

10 2.23 53%

Constitutional Law 10 2.29 76%

Contracts 10 2.67 84%

Evidence 10 3.01 81%

Torts 10 2.50 61%

Criminal Law and Procedures 9 2.50/2.47

16

54%/54%

Real Property 9 2.30 56%

Trusts 7 1.95 44%

Wills 7 2.21 46%

11

Frequency is defined as the number of times a subject area was represented as a main or crossover topic on the

California Bar Examination from 2008-2017.

12

Ratings are based on the average Knowledge Domain ratings for the 2012 NCBE Job Analysis study on a scale of 1 to 4

with values closer to 4 representing more significant content.

13

Ratings are based on the percentage of respondents indicating that they perform the knowledge for the 2012 NCBE Job

Analysis study. Values range from 0% to 100% with higher percentages indicating that more practitioners perform the

knowledge.

14

Remedies does not align with a single Knowledge Domain because it crosses over multiple, substantive areas of

practice in law.

15

Community Property was interpreted to be part of Family Law.

16

Criminal Law and Procedures were asked as separate Knowledge Domain statements. Each significance rating is

included.

13 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

For the essay questions in this study, panelists judged each one as measuring approximately 50% of the

subject area knowledge (e.g., real property, contracts) and 50% of lawyer skills (e.g., application of law to

facts, analysis, reasoning). This means that for a given essay question, the measurement of the subject area

knowledge represents approximately 3.6% of the total examination (i.e., each essay question contributes

approximately 7% to the total score (7.14% to be more specific), so if 50% of this is based on the subject area,

7% multiplied by 50% results in approximately 3.6% of the measurement being attributable to the subject

area).

Knowing that the current sampling plan includes Professional Responsibility effectively yearly along with the

performance task, this means that subject area sampling only applies to the four essay questions that may

represent a different subject area year-to-year. In aggregate, this means that the potential variability in the

measurement of the examination across years is approximately 14%-15% (i.e., 3.6% multiplied by the 4 essay

questions). Another way to communicate these results is to say that 85%-86% of the measurement of the

examination remains constant across years. This suggests that what is being measured on the examination

remains stable.

In addition, the relationship between the emphasis of the subject areas in Table 3 as represented by the

frequency of occurrence, the average significance rating, and the percent performing provides some

information that will inform future examination redevelopment. Specifically, the correlation between the

frequency of subject areas being represented on the examination and the average significance rating was 0.48

while the correlation between the frequency of subject area representation and percent performing was 0.70.

The correlation between the significance of the topic and the percent performing was 0.83. However, these

results should not be over-interpreted based on the limited number of observations (n=12). These results

suggest that there is moderate relationship between the content sampling and evidence of importance of

subject areas to entry level practice. However, there are likely opportunities to further align the content

sampling with subject areas that were rated as more or less significant for entry-level practice.

Evaluating the Content Validation Study

To evaluate the content validation study, we applied Davis-Becker and Buckendahl’s (2013) framework for

alignment studies. Within this framework, the authors suggested four sources of evidence that should be

considered in the validation process: procedural, internal, external, and utility. If threats to validity are

observed in these areas, it will inform policymakers’ judgments regarding the usefulness of the results and the

validity of the interpretation. Evidence within each area that was observed in this study is discussed below.

One important limitation of the study that could pose a threat to the validity of the results is the lack of direct

evidence from the MBE. Content validation studies generally involve direct judgments about the

characteristics of the examination content. Because examination items (i.e., questions) from the MBE were

not available for the study, panelists were asked to make judgments about the content evidence from publicly

available subject matter outlines provided by the NCBE. There is then assumption that items coded to these

sections of the outline align as intended. However, these assumptions should be directly reviewed. Because

14 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

California is using scores from the MBE as an increasingly important component of its decision-making

process, it is reasonable to expect that NCBE make forms of the test available for validation studies.

17

Procedural

Procedural evidence was available when considering panelist selection and qualifications, choice of

methodology, application of the methodology, and panelists’ perspectives about the implementation of the

methodology. For this study, the panel that was recruited represented a range of stakeholders: both newer

and more experienced attorneys as well as representatives from higher education. Because content validation

judgments are more objective in nature (i.e., what does this question measure) as opposed to making

standard setting judgments (e.g., how would a minimally competent candidate perform), there are fewer

criteria needed with respect to panelist selection other than that they were knowledgeable about the content

and familiar with the population of examinees. Again, this was not an activity to determine what should be on

the examination, but rather, what is currently being measured by the examination.

In selecting the methodology for the study, alternative designs were considered. One design could have had

panelists making judgments about whether the content and cognitive complexity of the components of the

examination were appropriate for entry-level practice. The risk in this approach is the diverse opinions

represented by stakeholder groups without a common reference point or link to evidence of what occurs in

practice. This type of evidence is typically available following a practice analysis and is then used to build a

blueprint from which examination forms are constructed. At that point, such a design could have been

implemented because the common reference point would have been the blueprint that was developed with a

clear link to practice. However, this information was not available; therefore, this design would have been

inappropriate and would have only highlighted individual panelists’ opinions or biases (e.g., practitioners’

preference for content that aligns with their respective area(s) of practice, high education representatives’

preference for content that aligns with their curriculum).

To have a common reference point for panelists to evaluate the alignment of content, we selected the

summary results from the 2012 NCBE job analysis study. These results were derived from a national survey

that collected information about the knowledge, skills, abilities, and tasks of lawyers. Although the results

were not specific to California, it is reasonable to expect that these results would generalize to expectations

for attorneys in California. So, the design that included this information along with the evidence from the U.S.

Department of Labor’s O*NET provided concurrent evidence of the characteristics of attorneys in practice.

For the rating activities, essay questions and the performance task are based on scoring considerations that

include multiple traits. Therefore, panelists were asked to breakdown the scoring to proportionally align the

parts of these questions that matched with different knowledge, skills, or abilities. To have only evaluated the

questions holistically would not have revealed the differential content representation. Given the constructed

response aspects of the essay questions and performance task, the methodology and rating tasks were

consistent with the types of questions and judgments that could be provided.

17

For security reasons and to protect the integrity of the empirical characteristics of operational questions, NCBE only

makes available practice questions or “retired” questions, but not the entire exam from a specific administration.

15 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

With respect to the process evaluation, panelists’ perspectives on the process were collected and the

evaluation responses were consistently positive suggesting that they understood the process and were

confident in their judgments about the content validity. In addition, panelists provided comments about

aspects of the process that could be improved. This feedback did not threaten the validity of the results, but

does inform some of the suggested next steps for the program.

Internal

The internal evidence for content validation studies can be evaluated by examining the consistency of

panelists’ ratings and the convergence of the recommendations. One approach to content validity studies is to

use one or more rating scales where panelists rate individual questions or score points on different criteria

(Davis-Becker & Buckendahl, 2013). Decision rules can then be applied to analyze and evaluate the results

along with calculating levels of agreement among the panelists. However, this methodology is often more

appropriate with more discrete items.

For this study, the rating tasks and decision rules were based on consensus judgments that occurred based on

discussions among panelists following individual ratings. This approach is more qualitative in nature and was

selected based on the types of assessment items and corresponding scoring criteria/rubrics that were

evaluated (i.e., constructed response) along with the lack of an opportunity for direct judgments on items on

the MBE. Although the results should not be interpreted as unanimous support by the panelists, consensus

was achieved for the content and cognitive complexity rating tasks.

External

The primary source of external evidence for the study was based on the results of 2012 NCBE job analysis as

an indicator of suggested content for entry level practice based on a nationally representative sample of

practitioners. In addition, links to the U.S. Department of Labor’s O*NET that was updated for lawyers in 2017

were also included to provide another source. The summary results of the NCBE job analysis study included

ratings of knowledge, skills, abilities, and tasks.

There is an important caveat to note about NCBE’s study. Specifically, because the study was designed and

implemented as a task inventory (i.e., a list of knowledge, skills, abilities, and tasks) rather than competency

statements, there were many statements that were redundant, overlapping, or that could be consolidated or

subsumed within other statements. This means that an activity such as preparing a memo for a client was

broken down into its component parts (e.g., critical reading and comprehension, identifying the primary

question, distinguishing relevant from irrelevant facts, preparing a written response) were listed as separate

statements when these part of the same integrated, job related task. More important, the scoring criteria or

rubric would not distinguish these elements and would instead allocate points for skills such as identifying and

applying the appropriate legal principles to a given fact pattern or scenario; or drawing conclusions that are

supported with reasoning and evidence.

However, the value of the job analysis study is that it served as a common, external source against which to

evaluate the content and cognitive complexity of the California Bar Examination. A lack of overlap in some

areas should not be interpreted as a fatal flaw due to the design of the job analysis. The results can be used to

inform next steps in evaluating validity evidence for the program.

16 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Utility

Evidence of utility is based largely on the extent to which the summative and formative feedback can be used

to inform policy and operational decisions related to examination development and validation. The summative

information from the study suggests that the content and cognitive complexity as represented by content of

the examination are consistent with expectations for entry level attorneys when compared with the highly

rated knowledge, skills, and abilities of the 2012 NCBE job analysis.

However, whether the proportional contribution of this content (i.e., the percentage of representation of the

range of knowledge, skills, abilities) is being implemented as intended is a question that would need to be

evaluated as part of the next steps for the program. The intended representation of content for a

credentialing examination is generally informed by a job analysis (also sometimes called a practice analysis or

occupational analysis, see Clauser and Raymond (2017) for additional information).

These studies often begin with a focus group or task force that defines the knowledge, skills, and abilities for

the target candidate (e.g., minimally competent candidate, minimally qualified candidate) to create task or

competency statements. These statements are then typically compiled into a questionnaire that is

administered as a survey of practitioners to evaluate the relative emphasis of each statement for entry level

practice. The results from the survey can then be brought back to the focus group or task force to discuss and

make recommendations to the appropriate policy body about the recommended weighting of content on the

examination. This weighting is communicated through an examination blueprint that serves as the guide for

developing examinations for the program.

The formative information from the panelists’ ratings for the individual essay questions and performance task

can be evaluated internally to determine whether this is consistent with expectations. For example, if the

panelists judged a question to require a candidate to demonstrate knowledge of a subject area as

representing 50% of the measurement the question with the other 50% representing skills, the internal

evaluation would ask the question of whether this was intended. This intent is evaluated through the design of

the question, the stimulus material contained in it, the specific call of the question for the candidate, and the

scoring criteria or rubric associated with the question. The information from this study provided evidence to

the program of what is currently being measured by the California Bar Examination, but does not conclude

whether this is the information that should be measured on the examination. That type of determination

would be a combination of information from a job analysis in concert with discussions about the design.

In addition, the panelists’ qualitative discussions about potential structural changes to the examination or

whether some content is more appropriate as part of continuing education will be useful for policymaker

deliberations and examination development purposes. The summary of this discussion is included as part of

comments in Appendix C. However, because this was not a primary goal of the study, this information should

be interpreted as a starting point for further study and evaluation, not for decision-making at this point. A

program design activity that involves a look at the examination and the related components would be valuable

to inform decision-making. For example, a potential design for the California Bar Examination might include

the MBE as a measure of federal or cross-jurisdictional competencies, the essay questions may be useful for

measuring subject areas of law that are important and unique to California, and the performance task serving

as a content-neutral measure of the important skills that lawyers need in practice. However, this is a

facilitated activity that is more appropriate for policymakers and practitioners to engage in as a precursor to

the job analysis.

17 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Process Evaluation Results

Panelists completed a series of evaluations during the study that included both Likert scale (i.e., attitude rating

scale) and open-ended questions. The responses to the Likert scale questions are included in Table 4 and the

comments provided are included in Appendix C. With respect to training and preparation, the panelists felt

the training session provided them with an understanding of the process and their task. Following the training,

the panelists indicated they had sufficient time to complete the rating process and felt confident in the results.

The rating scales for questions can be interpreted as lower values being associated with less satisfaction or

confidence with higher values being associated with greater satisfaction or confidence with the respective

statement. Note that for question 2, panelists were only asked to indicate whether the time allocated for

training was too little (1), about right (2), or too much (3).

Table 4. Summary of Process Evaluation Results

Median 1 2 3 4

1. Success of Training

Orientation to the workshop 4 0 1 3 6

Overview of alignment 4 0 1 3 6

Discussion of DOK levels 3.5 0 1 4 5

Rating process 3.5 0 1 4 5

2. Time allocation to Training

2 0 9 1

3. Confidence in Cognitive Complexity Ratings

3 0 1 7 2

4. Time allocated to Cognitive Complexity Ratings

3 0 1 5 4

6. Confidence in Day 1 ratings

4 1 0 2 6

7. Time allocated to Day 1 ratings

3 0 0 5 4

9. Confidence in Day 2 ratings

3 0 0 5 3

10. Time allocated to Day 2 ratings

3.5 0 0 4 4

12. Confidence in Day 3 ratings

3.5 0 0 4 4

13. Time allocated to Day 3 ratings

3.5 0 0 4 4

14. Overall success of the workshop

3.5 0 0 4 4

15. Overall organization of the workshop 4 0 0 3 5

18 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

Gap Analysis

The content validation study was designed to evaluate the extent to which content on the California Bar

Examination aligned with expectations for entry level practice for lawyers. In addition, a gap analysis was

conducted to also respond to the question about what content may be important for entry level practice, but

is not currently measured on the examination. For this analysis, two criteria were evaluated.

Specifically, the ratings of significance and percent performing from the NCBE job analysis survey were

analyzed. For the purposes of this analysis, if a knowledge, skill, ability, or task (KSAT) statement received a

significance rating of 2.5 or higher on a 1-4 scale, it was included as a potential gap. Note that some KSAT

statements were not included, because they were ambiguous or not appropriate for the purposes of licensure

(e.g., Professionalism, Listening Skills, Diligence). Further, statements that were judged to be subsumed within

other statements (e.g., Organizational Skills as an element of Written Communication) are not included to

avoid redundancy. The results of this analysis are shown in Table 5.

Table 5. Summary of gap analysis of content not primarily measured on the California Bar Examination.

Knowledge, Skills, Abilities and Tasks

from the NCBE Job Analysis Survey

Link to

O*NET

Statement

DOK

Significance

(Mean)

% Performing

Section I. Knowledge Domains

5 Research Methodology K-1 2 2.91 89%

8 Statutory Interpretation K-1 1 2.83 86%

9 Document Review/Documentary

Privileges

K-1 2 2.73 81%

Section II. Skills and Abilities

92 Using office technologies (e.g., word

processing and email)

K-6 1 3.56 99%

102 Answering questions succinctly N/A 1 3.30 99%

104 Computer skills K-6 1 3.28 99%

105 Electronic researching T-8 2 3.26 98%

113 Negotiation S-7 1 2.97 87%

114 Resource management K-4, T-

11

1 2.93 96%

115 Interviewing T-14 1 2.92 91%

118 Attorney client privilege - document

reviewing

T-9 3 2.84 86%

119 Trial skills T-7 1 2.71 68%

120 Legal citation T-9, T-

15

2 2.67 95%

Section III. General Tasks

Management of attorney-client

relationship and caseload

124 Establish attorney-client relationship T-18 2 2.86 76%

125 Establish and maintain calendaring

system

T-18 1 2.86 78%

19 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

127 Establish and maintain client trust

account

T-21 1 2.52 36%

128 Evaluate potential client engagement T-12 1 2.51 67%

Research and Investigation

142 Conduct electronic legal research T-8 2 3.42 96%

143 Research statutory authority T-8 2 3.38 95%

144 Research regulations and rules T-8 2 3.31 96%

145 Research judicial authority T-8 2 3.19 89%

146 Conduct document review T-8 2 3.10 86%

147 Interview client and client

representatives

T-14 2 3.04 77%

148 Conduct fact investigation T-14 2 2.91 83%

149 Interview witness T-14 1 2.75 69%

150 Research secondary authorities T-8 2 2.70 92%

151 Obtain medical records T-14 1 2.58 61%

152 Conduct transaction due diligence

activities

T-2 1 2.54 58%

153 Request public records T-16 1 2.53 81%

Analysis and resolution of client

matters

157 Analyze law T-1 3 3.46 97%

158 Advise client T-2 2 3.20 87%

159 Develop strategy for client matter T-13 1 3.13 87%

160 Negotiate agreement T-9, T-

10

1 2.93 77%

161 Draft memo summarizing case law,

statutes, and regulations, including

legislative history

T-15 3 2.81 86%

163 Draft demand letter T-9 1 2.60 65%

164 Draft legal opinion letter T-15 2 2.54 76%

165 Draft case summary T-15 2 2.53 80%

20 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

The information from the gap analysis can be used to evaluate the current content representation of the

examination to determine whether a) existing elements of measurement should be retained, b) new elements

of measurement should be added, and c) the extent to which the current design of the examination supports

measurement of the important aspects of the domain. A caution in interpreting these results is that some of

the knowledge, skills, abilities, and tasks are not easily measurable in a written examination and may require

different types of measurement strategies, some of these being potentially technology enhanced. An

additional caution is that the statements from the 2012 NCBE job analysis overlapped with each other and

were not mutually exclusive with respect to the tasks that lawyers might perform. For future studies, I would

suggest a competency or integrated task statement based approach that is more consistent with the tasks,

responsibilities, and activities that lawyers engage with as opposed to discrete aspects of practice.

Conclusions and Next Steps

At a summative level, the results of the content validation study suggest that the current version of the

California Bar Examination is measuring important knowledge, skills, and abilities consistent with expectations

of entry level attorneys as suggested by results from the 2012 NCBE job analysis. Whether the observed

representation and proportional weighting are in alignment with the expectations for California cannot be

determined without further evaluation. However, it is important to note that all content on the current

examination was judged to align with elements of the NCBE job analysis that were rated as reasonably

significant and/or performed frequently in practice. This also included the subject areas that are sampled

across years, but were not included in this study.

As recommended next steps for the California Bar Examination in its evaluation of its design and content, the

results of the gap analysis and feedback from panelists provide a useful starting point for further discussion.

Specifically, from the results of the national survey, skills and tasks were generally interpreted as more

generalizable than many of the knowledge domains. Given the diversity of subject areas in the law, this is not

surprising. At the same time, it may also suggest that a greater emphasis on skills could be supported in the

future. To answer this question, further study is warranted. This additional study would begin with a program

design that leads to a job analysis for the practice of law in California. As an examination intended to inform a

licensure decision, the focus of the measurement of the examination needs to be on practice and not on the

education or training programs. Through this combination of program design and job analysis, results would

inform and provide evidence for decisions about the breadth and depth of measurement on the examination

along with the relative emphasis (e.g., weighting) of different components.

While the results of this study provided evidence to support the current iteration of the examination, there

are also formative opportunities for the program to consider in a program redesign. Specifically, the current

design and format for the California Bar Examination has been in place for many years. Feedback from the

content validation panelists suggested that there are likely subject areas that could be eliminated or

consolidated to better represent important areas needed by all entry-level practitioners.

To briefly reiterate an example described above, from a design perspective, it may be desired to define the

components of the examination as a combination of a candidate’s competency in federal law, California-

specific law, and job-related lawyer skills. Further, if the MBE continues to be included as part of the

California Bar Examination, it would be important to be able to review the items on a recently operational

form (or forms) of the test to independently evaluate the content and cognitive complexity of the items. If the

21 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

California is unable to critically review this component of their program, it should prompt questions about

whether it is appropriate to continue to include it as part of their examination.

Similarly, such a redesign activity would offer the program an opportunity to evaluate the assessment item

types of the examination (e.g., multiple choice, short answer, extended response), scoring policies and

practices for human scored elements (e.g., rubric development, calibration, evaluation of graders), alternative

administration methods for components (e.g., linear on the fly, staged adaptive, item level adaptive), and

alternative scoring methods for constructed response (e.g., automated essay scoring). Advances in testing

practices and technologies as well as the evolution of the practice of law since the last program design activity

suggest that this interim study may facilitate additional research questions. As an additional resource about

the current practices within credentialing programs, interested readers are encouraged to consult Davis-

Becker and Buckendahl (2017) or Impara (1995).

For licensure examination programs, in terms of evidence to define content specifications, the primary basis

for evidence of content validity come from the results of a job analysis that provides information about the

knowledge, skills, and abilities for entry-level practitioners. Although the results of the 2012 NCBE job analysis

were used for this study, it would be appropriate for the program to conduct a state-specific study as is done

for other occupations in California to then be used to develop and support a blueprint for the examination.

The specifications contained in the blueprint are intended to ensure consistent representation of content and

cognitive complexity across forms of the examination. This would strengthen the content evidence for the

program and provide an opportunity for demonstrating a direct link between the examination and what

occurs in practice. These two activities – program design and job analysis – should be considered as priorities

with additional redevelopment and validation activities (e.g., content development, content review, pilot

testing, psychometric analysis, equating) occurring as subsequent activities.

Recognizing the interrelated aspects of validation evidence for testing programs, it is valuable to interpret the

results of this study and its potential impact on the recently conducted standard setting study for the

California Bar Examination. Specifically, the results of the content validation study suggested that most of the

content on the examination was important for entry level practice without substantive gaps in what is

currently measured on the examination compared with what is expected for practice. However, if the

examination is revised in the future, it would likely require revisiting the standard setting study.

22 of 27

ACS Ventures, LLC –

Bridging Theory & Practice

References

Bloom, B., Englehart, M. Furst, E., Hill, W., & Krathwohl, D. (1956). Taxonomy of educational objectives: The

classification of educational goals. Handbook I: Cognitive domain. New York, Toronto: Longmans,

Green.

Clauser, A. L. & Raymond, M. (2017). Specifying the content of credentialing examinations. In S. Davis-Becker

and C. Buckendahl (Eds.), Testing in the professions: Credentialing policies and practice (pp. 64-84).

New York, NY: Routledge

Davis-Becker, S. & Buckendahl, C. W. (Eds.), (2017). Testing in the professions: Credentialing policies and

practice. New York, NY: Routledge.

Davis-Becker S. & Buckendahl, C. W. (2013). A proposed framework for evaluating alignment studies.

Educational measurement: Issues and practice, 32(1), 23-33.

Impara, J.C. (Ed.), (1995). Licensure testing: Purposes, procedures, and practices. Lincoln, NE: Buros Institute of

Mental Measurements.

Kane, M. T. (2006). Validation. In R. L. Brennan (Ed.), Educational measurement (4th ed., pp. 17-64). Westport,

CT: American Council on Education and Praeger.

Webb, N. L. (1997). Criteria for alignment of expectations and assessments in mathematics and science

education (Council of Chief State School Officers and National Institute for Science Education Research

Monograph No. 6). Madison, WI: University of Wisconsin, Wisconsin Center for Education Research.

23 of 27

Appendix A – Panelist Information

Content Validity

Panelists.xlsx

Last Name First Name City Role Years in Practice

Baldwin-Kennedy Ronda

Barbieri Dean

Cramer Mark

Dharap Shounak

Gramme Bridget

Jackson Yolanda

Layon Richard

Lozano Catalina

Maio Dennis

Shultz Marjorie

Appendix B – Content Validation Materials and Data

The documentation used in the standard setting are included below.

Overview of

Content Validation 1

Cal Bar Content

Validation Worksho

Cal Bar Content

Validation Worksho

Cal Bar Content

Validation Worksho

NCBE Job Analysis

Summary 2013

O*NET Summary for

Lawyers

California Bar Exam

Content Validation Workshop

Agenda

Tuesday, June 6

7:30 – 8:00 Breakfast

8:00 – 8:30 Introductions and Purpose of the Study

8:30 – 10:00 Initial training

Purpose and design of the California Bar Exam

Content validation judgments (Job Analysis/O*NET)

10:00 – 10:15 Break

10:15 – 11:45 DOK Ratings for knowledge, skills, and abilities (independent)

11:45 – 12:45 Lunch

12:45 – 2:15 DOK Ratings for knowledge, skills, and abilities (group consensus)

2:15 – 2:30 Complete first evaluation form

2:30 – 2:45 Break

2:45 – 3:45 Begin content validation judgments for first essay question (facilitated)

Review scoring rubric/criteria for the question

Evaluate content and cognitive complexity match

3:45 – 4:00 Break

4:00 – 4:45 Continue content validation judgments for first essay question (facilitated)

4:45 – 5:00 Complete second evaluation form

Wednesday, June 7

th

8:00 – 8:30 Breakfast

8:30 – 9:30 Begin content validation judgments for second/fourth essay question

(independent within subgroup)

Review scoring rubric/criteria for the question

Evaluate content and cognitive complexity match

9:30 – 10:15 Discuss initial content validation judgments for second/fourth essay question

(subgroup)

10:15 – 10:30 Break

10:30 – 11:30 Continue content validation judgments for third/fifth essay question

(independent)

11:30 – 12:15 Discuss initial content validation judgments for third/fifth essay question

(subgroup)

12:15 – 1:00 Lunch

1:00 – 2:15 Begin content validation judgments for performance task (independent)

Review scoring rubric/criteria for the question

Evaluate content and cognitive complexity match

2:15 – 2:30 Break

2:30 – 3:30 Discuss initial validation judgments for performance task (group)

3:30 – 3:45 Break

3:45 – 4:45 Begin judgments for MBE Subject Matter Outline – content focus (independent)

4:45 – 5:00 Complete third evaluation form

Thursday, June 8

8:00 – 8:30 Breakfast

8:30 – 9:30 Continue judgments for MBE Subject Matter Outline – content focus

(independent)

9:30 – 9:45 Break

9:45 – 10:45 Discuss judgments for MBE Subject Matter Outline (group)

10:45 – 11:00 Break

11:00 – 11:45 Continue discussing judgments for MBE Subject Matter Outline

11:45 – 12:00 Complete fourth evaluation form

Evaluation – 1 Content Validation Workshop

The purpose of this evaluation is to get your feedback about the various components of the content

validation workshop. Please do not put your name on this evaluation form. The information from this

evaluation will be used to improve future projects. Thank you!

Training

The training consisted of several components: orientation to the workshop, overview of alignment,

discussion of cognitive complexity levels, and training on the rating process.

1. Using the following scale, please rate the success of each training component:

Rating of Training Success

Training Components Very Unsuccessful__ _Very Successful

a. Orientation to the workshop 1 2 3 4

b. Overview of alignment 1 2 3 4

c. Discussion of DOK levels 1 2 3 4

d. Rating process 1 2 3 4

2. How would you rate the amount of time allocated to training?

a. Too little time was allocated to training.

b. The right amount of time was allocated to training.

c. Too much time was allocated to training.

Cognitive Complexity Ratings of Job Analysis/O*NET KSAs

3. How confident were you about the cognitive complexity ratings you made?

a. Very Confident

b. Somewhat Confident

c. Not very Confident

d. Not at all Confident

4. How did you feel about the time available to make your cognitive complexity ratings?

a. More than enough time was available

b. Sufficient time was available

c. Barely enough time was available

d. There was not enough time available

5. Please provide any comments about the training or cognitive complexity ratings that would help in

planning future workshops.

California Bar Exam Content Validation June 6-8, 2017

Evaluation – 2 Content Validation Workshop

Day 1 Content Validity Judgments

6. How confident were you about your Day 1 judgments of content validity for the California Bar

Exam?

a. Very Confident

b. Somewhat Confident

c. Not Very Confident

d. Not at all Confident

7. How did you feel about the time allocated for making these judgments?

a. More than enough time was available

b. Sufficient time was available

c. Barely enough time was available

d. There was not enough time available

8. Please provide any comments about the Day 1 content validity activities that would be helpful in

planning future workshops.

California Bar Exam Content Validation June 6-8, 2017

Evaluation – 3 Content Validation Workshop

Day 2 Evaluation of Essay Questions and Performance Task

9. How confident were you about your Day 2 judgments of content validity for the California Bar

Exam?

a. Very Confident

b. Somewhat Confident

c. Not Very Confident

d. Not at all Confident

10. How did you feel about the time allocated for making these judgments?

a. More than enough time was available

b. Sufficient time was available

c. Barely enough time was available

d. There was not enough time available

11. Please provide any comments about the Day 2 rating activities that would be helpful in planning future

workshops.

California Bar Exam Content Validation June 6-8, 2017

Evaluation – 4 Content Validation Workshop

Day 3 Evaluation of Content Outline for the MBE