HAL Id: tel-03164744

https://theses.hal.science/tel-03164744

Submitted on 10 Mar 2021

HAL is a multi-disciplinary open access

archive for the deposit and dissemination of sci-

entic research documents, whether they are pub-

lished or not. The documents may come from

teaching and research institutions in France or

abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est

destinée au dépôt et à la diusion de documents

scientiques de niveau recherche, publiés ou non,

émanant des établissements d’enseignement et de

recherche français ou étrangers, des laboratoires

publics ou privés.

Challenges of native android applications : obfuscation

and vulnerabilities

Pierre Graux

To cite this version:

Pierre Graux. Challenges of native android applications : obfuscation and vulnerabilities. Cryptogra-

phy and Security [cs.CR]. Université Rennes 1, 2020. English. �NNT : 2020REN1S047�. �tel-03164744�

THÈSE DE DOCTORAT DE

L’UNIVERSITE DE RENNES 1

COMUE UNIVERSITE BRETAGNE LOIRE

ECOLE DOCTORALE N° 601

Mathématiques et Sciences et Technologies

de l’Information et de la Communication

Spécialité : Informatique

Par

Pierre Graux

Challenges of Native Android Applications:

Obfuscation and Vulnerabilities

Thèse présentée et soutenue à Lieu, le 10 décembre 2020

Unité de recherche : IRISA

Thèse N° :

Rapporteurs avant soutenance :

Guillaume Bonfante Maître de Conférences HDR, Université de Lorraine

Christian Rossow Professor, Saarland University

Composition du Jury :

Examinateurs : Marie-Laure Potet Professeur des Universités, Grenoble INP

Erven Rohou Directeur de Recherche, Inria

Sarah Zennou Research engineer, Airbus

Dir. de thèse : Valérie Viet Triem Tong Professeur, CentraleSupelec

Co-dir. de thèse : Jean-François Lalande Professeur des Universités, CentraleSupélec

Encadrant : Pierre Wilke Enseignant Chercheur, CentraleSupélec

Abstract

Android is the most used operating system and thus, ensuring security for its applications is an essential task.

Securing an application consists in preventing potential attackers to divert the normal behavior of the targeted

application. In particular, the attacker may take advantage of vulnerabilities left by the developer in the

code and also tries to steal intellectual property of existing applications. To slow down the work of attackers

who try to reverse the logic of a released application, developers are incited to track potential vulnerabilities

and to introduce countermeasures in the code. Among the possible countermeasures, the obfuscation of

the code is a technique that hides the real intent of the developer by making the code unavailable to an

adversary using a reverse engineering tool. Mobile applications are complex entities that can be made of

both bytecode and assembly code. This creates new opportunities to enhance obfuscation techniques, and

also makes deobfuscation a more difficult challenge.

Obfuscating and deobfuscating programs have already been widely studied by the research community,

especially for desktop architecture. For mobile devices, ten years after the first release of Android, researchers

have mainly worked on the deobfuscation of the intermediate language, named Dalvik bytecode, executed by

the embedded virtual machine. Nevertheless, with the growing amount of malware and applications carrying

sensitive information, attackers want to hide their intents and developers want to protect their intellectual

property and the integrity of their application. Thus, a new generation of obfuscation methods based on

native code has appeared. Studying the consequences of mobile native code has not – so far – received the

same amount of attention as desktop programs even though more than one third of the available applications

embed assembly code.

This thesis presents the impact of native code on both reverse-engineering and vulnerability finding applied

to Android applications. First, by listing the possible interferences between assembly and bytecode, we

highlight new obfuscation techniques and software vulnerabilities. Then, we propose new analysis techniques

combining static and dynamic analysis blocks, such as taint tracking or system monitoring, to observe the

code behaviors that have been obfuscated or to reveal new vulnerabilities. These two objectives have led us to

develop two new tools. The first one spots a specific vulnerability that comes from inconsistently mixing

native and Java data. The second one extracts the object level behavior of an application, regardless of whether

this application contains native code, embedded for obfuscation purposes. Finally, we implemented these

new methods and conducted experimental evaluations. In particular, we automatically found a vulnerability

in the Android SSL library and we analyzed several Android firmware to detect usage of a specific class of

obfuscation.

Publications

[1] Pierre Graux

, Jean-François Lalande, and Valérie Viet Triem Tong. ‘Etat de l’Art des Techniques

d’Unpacking pour les Applications Android’. In: Rendez-Vous de la Recherche et de l’Enseignement de la

Sécurité des Systèmes d’Information. La Bresse, France, May 2018.

[2]

Jean-François Lalande, Valérie Viet Triem Tong, Mourad Leslous, and

Pierre Graux

. ‘Challenges for

reliable and large scale evaluation of android malware analysis’. In: 2018 International Conference on

High Performance Computing & Simulation. Orléans, France: IEEE, July 2018, pp. 1068–1070.

[3] Pierre Graux

, Jean-François Lalande, and Valérie Viet Triem Tong. ‘Obfuscated Android Application

Development’. In: 3rd Central European Cybersecurity Conference. Munich, Germany: ACM, Nov. 2019,

pp. 1–6.

[4]

Valérie Viet Triem Tong, Cédric Herzog, Tomàs Concepción Miranda,

Pierre Graux

, Jean-François

Lalande, and Pierre Wilke. ‘Isolating Malicious Code in Android Malware in the Wild’. In: 14th

International Conference on Malicious and Unwanted Software. Nantucket, MA, USA: IEEE, Oct. 2019.

[5]

Jean-François Lalande, Valérie Viet Triem Tong,

Pierre Graux

, Guillaume Hiet, Wojciech Mazurczyk,

Habiba Chaoui, and Pascal Berthomé. ‘Teaching android mobile security’. In: 50th ACM Technical

Symposium on Computer Science Education. Minneapolis MN, USA: ACM, Feb. 2019, pp. 232–238.

[6]

Jean-François Lalande,

Pierre Graux

, and Tomás Concepción Miranda. ‘Orchestrating Android

Malware Experiments’. In: 27th IEEE International Symposium on the Modeling, Analysis, and Simulation

of Computer and Telecommunication Systems. Rennes, France: IEEE, Oct. 2019, pp. 1–2.

[7] Pierre Graux

, Jean-François Lalande, Pierre Wilke, and Valérie Viet Triem Tong. ‘Abusing Android

Runtime for Application Obfuscation’. In: Workshop on Software Attacks and Defenses. Genova, Italy:

IEEE, Sept. 2020, pp. 616–624.

[8] Pierre Graux

, Jean-François Lalande, Valérie Viet Triem Tong, and Pierre Wilke. ‘Preventing Serializa-

tion Vulnerabilities through Transient Field Detection’. In: 36th ACM/SIGAPP Symposium On Applied

Computing. Gwangju, Korea: ACM, Mar. 2021, pp. 1598–1606.

Acknowledgments - Remerciements

First and foremost, I would like to thank a lot Valérie Viet Triem Tong, Jean-François Lalande and Pierre

Wilke, who have advised this thesis. They were always available to give great advises. I can measure the luck

to have them as advisors. They taught me to look at problems as a researcher and no longer as an engineer.

I would like to give a special thank to Marie-Laure Potet, who helped me when looking for a thesis and drove

me to find the CIDRE team. I made my first step in the research world with her, I wouldn’t have carried this

thesis without her help. I am glad she accepted to examine it and I hope it will fulfill her expectations.

I would like also to thank Christian Rossow and Guillaume Bonfante, who have accepted to review my thesis

and helped me to improve it, and Erven Rohou and Sarah Zennou, who have followed my work annually

during each Comité de Suivi Individuel (CSI) where they gave me useful and relevant advises. By giving me

part of their time they have allowed me to expose my ideas to benevolent external point of views.

Last but not least, I would like to thank all my relatives, my friends and my colleagues. Despite giving

technical advises, they gave me moral support, necessary to conduct research work which can, sometimes, be

frustrating.

Contents

Abstract iii

Publications v

Acknowledgments - Remerciements vii

Contents ix

Glossary xvii

Acronyms xviii

Prologue 1

1 Introduction 3

1.1 Problem statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.1 Android core security features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.2 Challenges in analyzing native applications . . . . . . . . . . . . . . . . . . . . . . . . 5

1.2 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3 Outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2 Analyzing native Android applications: state of the art 9

2.1 Research goals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.2 Android application datasets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.3 Analysis techniques . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.3.1 System side-effects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.3.2 Application metadata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.3.3 Bytecode level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.3.4 Framework, runtime and system level . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.4 Challenges implied by native applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.5 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Security issues introduced by native interferences in Java Android applications 21

3 Security issues introduced by interferences in Java code 23

3.1 Modification of the Java bytecode by the native code . . . . . . . . . . . . . . . . . . . . . . . 24

3.1.1 Full unpacking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.1.2 Unpacked bytecode hiding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.1.3 Partial unpacking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.1.4 Android framework bypassing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.2 Replacement of the Java bytecode by native code . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.2.1 Bytecode compiler used . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.2.2 Types of bytecode modifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.2.2.1 Removing the bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.2.2.2 Replacing the bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.2.2.3 Modifying the bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.2.2.4 Comparison of the three bytecode modification sub-techniques . . . . . . . . 29

3.3 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4 Security issues introduced by interferences in Java data 31

4.1 Injection of native data in Java data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

4.1.1

CVE-2015-3837: Example of a vulnerable transient field in an open source cryptography

library . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.1.2 Formal definition of problematic transient fields . . . . . . . . . . . . . . . . . . . . . . 35

4.2 Modification of Java data by native code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

4.2.1 Legitimate implementation: DirectByteBuffer . . . . . . . . . . . . . . . . . . . . . . . 36

4.2.2 Naive implementation: memory lookup . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4.2.3 Advanced implementation: reflection . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.3 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Detection of native interferences in Java Android applications 41

5 Static detection of native interferences in Java code 45

5.1 Detecting native interferences in DEX files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

5.2 Detecting native interferences in OAT files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

5.2.1 Detecting removed bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

5.2.2 Detecting nopped bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

5.2.3 Detecting replaced bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

5.2.3.1 Future work on detecting BFO usage . . . . . . . . . . . . . . . . . . . . . . . 50

5.3 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

6 Detection of native interferences in Java data 53

6.1 Taint-analysis across native and Java interface . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

6.2 Analyzing dataflow between native and Java at the source code level . . . . . . . . . . . . . . 54

6.2.1 Statically detecting reference fields in source code . . . . . . . . . . . . . . . . . . . . . 55

6.2.1.1 Reference field patterns in source code . . . . . . . . . . . . . . . . . . . . . . 55

6.2.1.2 Static patterns detection using taint analysis . . . . . . . . . . . . . . . . . . . 57

6.2.1.3 Static analysis limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.2.2 Analysis architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.2.3 Validation of the detection method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

6.2.3.1 Constructive validation of the patterns . . . . . . . . . . . . . . . . . . . . . . 61

6.2.3.2 Catching known errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

6.2.3.3 Checking a large open-source application . . . . . . . . . . . . . . . . . . . . 62

6.2.3.4 Analysis time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

6.3 Monitoring the interface between Java and native code at the execution time . . . . . . . . . . 63

6.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Reverse-engineering of multi-language Android applications 67

7 OATs’inside: Retrieving behavior of multi-language applications 71

7.1 Adapting instrumentation system to multi-language applications . . . . . . . . . . . . . . . . 71

7.2 OATs’inside architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

7.2.1 Runner module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

7.2.2 CFG creator module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

7.2.3 Memory Dumper module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7.2.4 Concolic analyzer module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7.2.5 Unit test results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

7.3 OATs’inside output on obfuscated application . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

7.3.1 Obfuscated application presentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

7.3.2 OATs’inside output on the application . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

7.3.3 Final words on OATs’inside output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

7.4 Performance overhead . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

7.5 OATs’inside stealthiness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

7.6 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

8 OATs’inside: implementation challenges and solutions 93

8.1 Runner and Memory Dumper modules: libart modifications . . . . . . . . . . . . . . . . . . 93

8.1.1 Analysis initialization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

8.1.2 Communication channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

8.1.3 Methods white-listing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

8.1.4 Garbage Collector internal structures browsing and resolution caching . . . . . . . . . 95

8.1.5 Signal handlers management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

8.1.6 Single-stepping and atomic instructions management . . . . . . . . . . . . . . . . . . . 96

8.1.7 Multi-thread management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

8.2 CFG Creator module: NetworkX implementation . . . . . . . . . . . . . . . . . . . . . . . . . 98

8.3 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Epilogue 103

9 Conclusion 105

9.1 Thesis contribution summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

9.2 Perspectives for future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

Appendices 109

A List of tested firmwares 111

B Java behavior unit tests 113

B.1 unobfuscated unit tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

B.2 Obfuscated unit tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

C Concolic analysis functioning proof 123

Résumé substantiel en français 129

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

1.1 Sécurité du système Android . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

1.2 Applications natives et sécurité . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

1.3 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

2 Problèmes de sécurité induits par les interférences natives dans les applications Android Java 131

2.1 Problèmes portant sur le bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

2.2 Problèmes portant sur les données du bytecode . . . . . . . . . . . . . . . . . . . . . . 132

3 Détection des interférences natives dans les applications Android Java . . . . . . . . . . . . . 132

3.1 Détection des interférences sur bytecode . . . . . . . . . . . . . . . . . . . . . . . . . . 132

3.2 Détection des interférences sur les données du bytecode . . . . . . . . . . . . . . . . . 133

4 OATs’inside: rétro-ingénierie des applications Android natives . . . . . . . . . . . . . . . . . . 134

5 Travaux futurs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

Bibliography 137

List of Figures

1.1 Exploitable window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.1 Ratio of recognized malware by at least x antivirus . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.2 Classical representation of Android system architecture . . . . . . . . . . . . . . . . . . . . . . . . 16

3.1 Packing technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.2 Bytecode Free OAT technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.3 Bytecode modification example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.4 Classification of Bytecode Free OAT (BFO) sub-techniques . . . . . . . . . . . . . . . . . . . . . . 29

4.1 Address space of processes serializing X509 Certificate . . . . . . . . . . . . . . . . . . . . . . . . 33

4.2 Set view of targeted problem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.3 Direct Heap Access (DHA) technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

4.4 Memory layout of Java objects in Android . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

5.1 Bytecode entropy for methods of AOSP Android 7.0 APKs . . . . . . . . . . . . . . . . . . . . . . 48

6.1 Set view of targeted problem, Reminder of Figure 4.2 Section 4.1 . . . . . . . . . . . . . . . . . . . 55

6.2 Architecture overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

6.3 Reference fields in Android application source code . . . . . . . . . . . . . . . . . . . . . . . . . . 56

6.4 Representation of reference flow tracking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

6.5 OpenSSLX509Certificate Java analysis output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

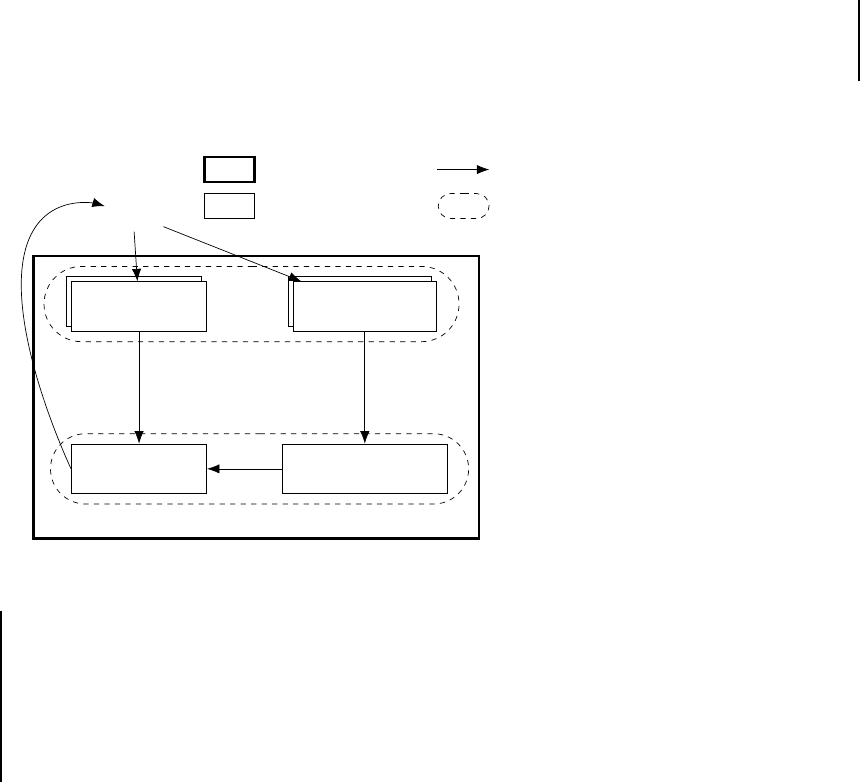

7.1 OATs’inside architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

7.2 Expected output for SimpleTestPIN.test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

7.3 Runtime patch architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

7.4 Method invocation hooking process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

7.5 Object-level control flow graph of SimpleTestPIN.test . . . . . . . . . . . . . . . . . . . . . . . . 80

7.6 Object-level control flow graph of SimpleTestPIN.test . . . . . . . . . . . . . . . . . . . . . . . . 83

7.7 Extract of MainActivity$1.onClick olCFG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

7.8 SimpleTestPIN.set

_

pin olCFG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

7.9 SimpleTestPIN.test olCFG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

7.10 SimpleTestPIN.set

_

pin olCFG after symbolic analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

7.11 SimpleTestPIN.test olCFG after symbolic analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

7.12 Runner module overhead with No. of actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

B.1 Non obfuscated testConditionObjectEq method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

B.2 Obfuscated testConditionObjectEq method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

1 Bytecode Free OAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

2 Direct Heap Access (DHA) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

3 Représentation du suivi des flux de références mémoires . . . . . . . . . . . . . . . . . . . . . . . 134

4 Architecture de OATs’inside . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

List of Tables

1.1 Number of CVE containing the keyword Android . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Extract of security additions in Android system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

4.1 Interferences between native code and Java code, in an Android application . . . . . . . . . . . . 43

5.1 Packer detection for various datasets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

5.2 Packer detection evolution inside AndroZoo . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

5.3 Nopped methods in firmware dataset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

5.4 Difference percentage for one firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

6.1 Sources and sinks for detecting reference field patterns . . . . . . . . . . . . . . . . . . . . . . . . 56

6.2 Flows reported for Telegram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

6.3 Analysis time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

6.4 Number of Direct Heap Accesses (DHAs) detected . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

6.5 Classes and libraries detected to be using DHA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

7.1 State-of-the-art solutions against native obfuscations . . . . . . . . . . . . . . . . . . . . . . . . . . 72

7.2 Monitoring of object actions for different types of executed code . . . . . . . . . . . . . . . . . . . 76

7.3 OATs’inside against native obfuscations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

7.4 Time overhead and number of actions/events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

7.5 Dump size depending on the APK size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

A.1 List of tested firmwares . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

1 Nombre de CVE contenant le mot-clef “Android” . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

2 Détection de packing dans plusieurs datasets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

3 Détection de packing selon les années . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

4 Nombre de DHAs détectés . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

List of Listings

4.1 conscrypt/src/main/java/org/conscrypt/OpenSSLX509Certificate.java . . . . . . . . . . . . 34

4.2 conscrypt/src/main/native/org_conscrypt_NativeCrypto.cpp . . . . . . . . . . . . . . . . . 34

4.3 boringssl/src/crypto/asn1/tasn_fre.c . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.4 boringssl/src/include/openssl/x509.h . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.5 DHA using DirectByteBuffer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4.6 Retrieving DirectByteBuffer address without JNI . . . . . . . . . . . . . . . . . . . . . . . . 37

4.7 DHA using memory lookup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.8 Retrieving field offset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.9 DHA using reflection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

5.1 Example of false positive for nopped bytecode search . . . . . . . . . . . . . . . . . . . . . . . 48

7.1 Simplified PIN test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

7.2 Runner module output on SimpleTestPIN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

7.3 Concolic Analyzer module output on SimpleTestPIN.test . . . . . . . . . . . . . . . . . . . 84

7.4 Unobfuscated activity code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

7.5 SimpleTestPIN unobfuscated Java code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

7.6 SimpleTestPIN unobfuscated C++ code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

7.7 List of executed method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

B.1 JNI test cases helpers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

B.2 Java method test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

B.3 JNI method test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

B.4 Java allocation test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

B.5 JNI allocation test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

B.6 Java access test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

B.7 JNI access test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

B.8 Java operations test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

B.9 JNI operations test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

B.10 Java condition test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

B.11 JNI condition test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

B.12 Java typing test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

B.13 JNI typing test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

B.14 Java exception test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

B.15 JNI exception test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

B.20 Application compilation command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

B.21 Obfuscator-LLVM command line . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

B.16 monitor test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

B.17 JNI exception test cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

B.18 DEX file location . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

B.19 Packer decode method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

B.22 Example of DHA unit test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

List of Algorithms

6.1 C/C++ analyzer taint management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

8.1 Create an iCFG and separate events between methods. . . . . . . . . . . . . . . . . . . . . . . . 98

8.2 Create olCFG for a given method. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

8.3 Remove useless blank nodes from an olCFG. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

C.1 Get taken conditions algorithm. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

Glossary

Allocator

Mechanism in charge of reserving and freeing memory areas. 4.0.0, 7.2.1

AOSP

Stands for Android Open Source Project, open source code of Android. 2.3.4, 3.1.0, 4.2.3, 5.2.0, 5.2.2,

7.4.0, 7.6.0, 8.1.0, 8.3.0, 9.2.0, 0.0

Bytecode Free OAT

Android obfuscation technique which consists in deleting bytecode from OAT files. 3.2.1, 3.2.2, 3.3.0,

4.2.0, 5.0.0, 5.2.0, 5.2.1, 5.2.3, 5.3.0, 6.4.0, 7.1.0, 7.2.0, 7.2.5, 7.3.0, 7.3.3, 9.1.0, B.0.0, B.2.0, 0.0

Common Vulnerabilities and Exposures

System that list publicly known compter security vulnerabilties and exposures. By extension, a publicly

known compter security vulnerabiltie or exposure. 1.1.1, 4.1.1, 6.2.0, 0.0

Concolic Analysis

Symbolic analysis that relies on values obtained during a classical execution called concrete execution.

7.2.0, C.0.0

Concrete (value/execution)

Execution, or values obtained during this execution, that is used during a concolic analysis. 2.3.3, 7.2.4,

C.0.0

Control Flow Graph

Graph that represents how the different instructions of code can be chained. Nodes are instructions and

two nodes are connected by a directed edge if the destination node can be executed after the source

node. 1.3.0, 2.1.0, 7.2.0, 7.2.2, 7.2.4, 7.2.5, 8.2.0, 9.1.0, B.2.0

Dataflow

Dependency between the variables of a code. 2.3.3, 6.0.0, 6.2.0

DEX

Stands for Dalvik EXecutable, file format used to store Dalvik bytecode. 2.3.3, 3.1.0–3.1.4, 3.2.1, 3.2.2,

4.3.0, 5.0.0, 5.1.0, 5.2.0, 5.2.3, 5.3.0, 7.1.0, 7.2.1, 7.2.5, 7.4.0, B.0.0, B.1.0, B.2.0, 2.1, 0.0

Direct Heap Access

Android obfuscation technique which consists in modifying Java values using native code without the

help of Java Native Interface (JNI). 4.2.0–4.2.3, 4.3.0, 6.0.0, 6.3.0, 6.4.0, 7.1.0, 7.2.1, 7.2.5, 7.3.0, 7.3.1, 7.3.3,

9.1.0, B.0.0, B.2.0, 0.0

Dynamic Analysis

Analysis that do run the analysed code. 3.2.0, 6.0.0, 7.2.0, 7.2.4

Firmware

Set of files installed on a smartphone by the smartphone constructor. It includes, among other, the

Android system and the defaults application. 2.2.0, 3.2.1, 5.2.0–5.2.3, 5.3.0, 9.1.0, A.0.0, 0.0

Heap

Memory area where allocated entities are stored including objects. 4.1.1, 4.2.0–4.2.3, 6.1.0, 6.3.0, 6.4.0,

7.1.0, 7.2.1, 7.3.2, 7.4.0, 8.1.3, 8.1.4, 8.1.6, 8.1.7, B.1.0, B.2.0

Manifest

File that describes an application. It contains, among other, requiered permissions or activities and

services classes name. 2.1.0, 2.3.2, 5.1.0

Nopping

Replacing instrucions by No-OPerations, that is by instructions that have no special effects. 3.2.2, 5.2.2,

5.3.0, 9.1.0

OAT

Unknown acronym, file format used to store compiled Dalvik bytecode. 2.4.0, 3.2.1, 3.2.2, 4.3.0, 5.0.0,

5.2.0, 5.2.3, 5.3.0, 7.2.1, 7.2.5, 7.3.1, 7.4.0, B.2.0, 2.1, 0.0

Packer

Tool that ciphers all or part of a program code without modifying its overall behavior. 3.1.0–3.1.2, 3.1.4,

5.0.0, 5.1.0, 6.4.0, 0.0

Runtime

Android library in charge of runtime the applications. 1.1.1, 2.1.0, 2.3.3, 2.3.4, 2.4.0, 3.2.0, 4.0.0, 4.2.0,

4.2.3, 5.0.0, 6.0.0, 6.3.0, 7.0.0, 7.1.0, 7.2.0, 7.2.1, 7.2.5, 7.4.0, 8.0.0, 8.1.0–8.1.2, 8.1.4, 8.3.0, 0.0

Serialization

Process of converting an object into a stream of bytes. 4.1.0, 4.1.2, 6.2.3, 0.0

Stack

Memory area where variables and parameters may be stored. 7.1.0, 7.2.2, 7.2.5, 7.3.3, 8.1.3, B.1.0

Static Analysis

Analysis that does not run the analysed code. 2.1.0, 2.3.0, 2.3.3, 2.4.0, 6.2.1, 7.0.0, 7.1.0, 7.2.5, 9.1.0

Symbolic Analysis

Analysis that consists in observing the execution of a code by replacing value by abstract values called

symbols and evaluating the code instructions in the abstract value space. 1.2.0, 7.0.0, 7.1.0, 7.2.4, 7.3.2,

9.1.0, C.0.0, 0.0

Taint Analysis

Analysis that consists in checking if some values, generated by expressions called sources, can reach

expressions called sinks. 2.3.3, 2.3.4, 2.4.0, 4.1.2, 6.1.0, 6.2.1, 6.2.2

Transient

Java field keyword that indicates that the qualified field is not pat of the serialization process. 4.1.0–4.1.2,

6.0.0, 6.2.0, 6.2.1, 6.2.3

Unpacker

Tool that reverses the obfuscation made by a packer. 3.1.0–3.1.4

Acronyms

ABI Application Binary Interface.

AIDL Android Interface Definition Language.

AOSP Android Open Source Project.

AOTC Ahead Of Time Compilation.

ASLR Address Space Layout Randomization.

AST Abstract Syntax Tree.

BFO Bytecode Free OAT.

CFG Control Flow Graph.

CVE Common Vulnerabilities and Exposures.

DEX Dalvik EXecutable.

DHA Direct Heap Access.

GC Garbage Collector.

IPC Inter-Process Communication.

JGRE Java Global Reference Exhaustion.

JIT Just In Time.

JNI Java Native Interface.

MMU Memory Management Unit.

NDK Native Development Kit.

PIE Position Independent Executable.

SLOC Single Line Of Code.

VM Virtual Machine.

Prologue

1: Common Vulnerabilities and Expo-

sures, publicly known vulnerabilities

2:

https://zerodium.com/program.

html

3: Address Space Layout Randomization

4: Position-Independent Executable

Introduction 1

1.1 Problem statement

1.1.1 Android core security features

Android is the prevalent operating system for modern smartphones.

Due to the tremendous number of users, Android has attracted lots of

malicious activities [9]

[9]: Mohamed and Patel (2015), ‘Android

vs iOS security: A comparative study’

. As shown in Table 1.1, since the release of the

first version of Android, vulnerabilities are searched and found in this

system. More than six thousand CVEs

1

contain the keyword “Android”.

Very critical vulnerabilities, such as Full Chain with Persistence (FCP)

zero click, can be sold for more than $2,500,000

2

.

< 2010 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020

18 23 89 169 123 1686 422 872 1191 457 771 528

Source: https://cve.mitre.org/cgi-bin/cvekey.cgi?keyword=Android

Table 1.1:

Number of CVE containing the

keyword Android

Because of the increasing number of Android users, and the increas-

ing number of applications handling sensitive information, Google has

brought a lot of attention to securing the Android platform. Indeed, they

have adopted a security-oriented architecture for running Android appli-

cations that mainly relies on two core features:

application sandboxing

and

permission management

. Each application is run by a dedicated

Unix user, allowing application isolation using tried-and-tested kernel

mechanisms. Additionally, applications cannot access device features

without owning specific capabilities called permissions. These permis-

sions are reviewed and granted by the user himself. For example, an

application will be able to send SMSs only if it has been granted the

SEND

_

SMS

permission. Furthermore, each new version of Android comes

with specific security features. Table 1.2 shows an excerpt of security

features added in each new Android version. In this table, we can see that

features target all aspects of security. For example, hardening techniques

such as ASLR

3

or PIE

4

have been added to the system. The operating sys-

tem constrains the accesses to resources using SELinux mandatory access

control. Network communications such as DNS queries are ciphered.

However, securing the whole Android system is not enough. Indeed,

applications installed by the user are potentially malicious or vulnerable.

An application is considered vulnerable if it can be diverted into per-

forming malicious operations. Since Android is a system built for mobile

platforms, these operations can differ from desktop ones [10, 11]

[10]: Faruki, Bharmal, Laxmi, Ganmoor,

Gaur, Conti, and Rajarajan (2014), ‘An-

droid security: a survey of issues, malware

penetration, and defenses’

[11]: Sadeghi, Bagheri, Garcia, and Malek

(2016), ‘A taxonomy and qualitative com-

parison of program analysis techniques for

security assessment of android software’

. We can

cite as notable malicious operations:

I

Premium SMS services: an application can send SMS to premium

services, i.e. services that include fees.

I

Privilege escalation: an application can exploit system vulnerabili-

ties to perform actions while not being granted the corresponding

permission.

4

1 Introduction

Table 1.2:

Extract of security additions in

Android system

Android

version

Release

date

Added feature

4.0 Oct. 2011

Address Space Layout Randomization

(ASLR) support

4.1 Jul. 2012

Position Independent Executable (PIE)

support

4.1 Jul 2012

Read only relocation (RelRO) binaries

4.2 Nov. 2012

SELinux support

4.3 Jul. 2013

SELinux enabled by default

4.4 Oct. 2013

SELinux set in enforcing mode

5.0 Nov. 2014

Support of non-PIE executable dropped

6.0 Oct. 2015

App permissions granted at runtime

9 Aug. 2018

DNS over TLS

Source: https://en.wikipedia.org/wiki/Android

_

version

_

history

I

Permission leakage, colluding applications: an application can

perform privileged operations when requested by other applica-

tions and omit to check requester permissions. If the omission is

intentional, the application is colluding.

I

Privacy leakage: an application can steal users’ private data such

as SMS contents or contact list, or spy on the user by, for example,

recording the microphone.

I

Ransomware: an application can make smartphone data, such as

pictures or contacts, unavailable by ciphering them and ask money

from user in exchange for the stolen data.

I

Application cloning: an application can copy the code of another

and replace the Google Ads ID of the real owner of the application

by its own in order to steal its wages.

I

Aggressive advertisement: an application can display numerous

advertisements by, for example, modifying the smartphone back-

ground or spawning pop-up windows.

I Botnet: an application can participate to massive network attacks.

I

Denial of Service: an application can stress resources such as the

CPU or the battery of the smartphone to make it unusable.

Unfortunately, relying on application isolation and permission restriction

to keep the user safe is not enough. Indeed, the permission system is

misunderstood and harmful permissions may be granted to malicious

applications [12, 13]

[12]: Felt, Ha, Egelman, Haney, Chin, and

Wagner (2012), ‘Android permissions: User

attention, comprehension, and behavior’

[13]: Benton,Camp, and Garg(2013),‘Study-

ing the effectiveness of android applica-

tion permissions requests’

. For example, in 2014, a fake copy of the eagerly

awaited video game Pokémon Go has been created and distributed to

countries where the official game was not released yet. The fake version,

which contained a malware called Droidjack, was installed by users

impatient to play the game and willing to grant any permission asked by

the application.

The security mechanisms provided by Android cannot prevent this type

of attack. Malicious or vulnerable application will eventually be installed

on some users’ smartphone. To minimize the impact of this phenomenon,

this thesis tackles the two following problems:

I

Detecting malicious or vulnerable applications in order to remove

them from the Google Play store.

1.1 Problem statement

5

5:

http://googlemobile.blogspot.

com/2012/02/android-and-security.

html

6: Formerly Android market

7:

https://www.blog.

google/products/android/

google-play-protect/

8: https://research.checkpoint.com/2020/google-

play-store-played-again-tekya-clicker-

hides-in-24-childrens-games-and-32-

utility-apps/

Time

New obfuscation

technique

New detection

technique

t

1

t

2

Safe Exploitable Safe

Figure 1.1: Exploitable window

I

Understanding the behavior of such applications in order to evalu-

ate the damage after a compromision.

Of course, Google already started, since 2012, to set up an automatic

service, called bouncer,

5

to address the malicious application detection

problem. Since the

bouncer

architecture is not public, we have no clue

on how and if the vulnerability detection problem is addressed.

This service scans applications available on the Google Play store

6

, the

Android application official repository, in order to find malicious and

unsafe applications. When detected, applications are removed from the

store, therefore preventing users from installing them. Improving on the

bouncer service, Google released Google Play Protect

7

in 2017. In addition

to the features provided by bouncer, this new service offers the possibility

to scan applications offline, i.e. observe the behavior of applications while

they are running directly on the users’ smartphone.

Despite all these efforts, some malicious applications still find their way

to the Google Play store,

8

and vulnerabilities are still found in Android

applications, as shown in Table 1.1.

We believe that one of the reasons that malicious and vulnerable

applications can still bypass analysis systems is the usage of native

code inside applications. This thesis focuses on this specific problem.

The following section explains why the presence of native code makes

code analysis more difficult for malicious or vulnerable application

detection.

1.1.2 Challenges in analyzing native applications

Problems involved in malware and vulnerability detection, such as

determining if a given program is equivalent to an other, are undecidable

in the general case [14]

[14]: Selçuk, Orhan, and Batur (2017), ‘Un-

decidable problems in malware analysis’

. Thus, countermeasures such as the bouncer or

Google Play Protect can only partially solve these problems. This keeps

the door open for malicious applications to hide from program analysis.

Similarly, perfect obfuscation techniques do not exist [15]

[15]: Beaucamps and Filiol (2007), ‘On the

possibility of practically obfuscating pro-

grams towards a unified perspective of

code protection’

, i.e. obfuscators

always leave information about the behavior of the original program.

Consequently, the race between malicious applications and analysis

services takes the form of a cat and mouse game: malicious applications

hide their intent using new techniques, analysis services adapt their

detection, and so on. Unfortunately, as shown in Figure 1.1, this race is in

favor of malicious applications since malware can take advantage of the

time that separate the usage of a new technique and its detection (

t

2

− t

1

).

Thus, computer security researchers should focus on reducing this time

by:

I

developing more general analysis (increasing

t

1

): this makes the

creation of new obfuscation techniques harder.

I

predicting future obfuscation techniques (decreasing

t

2

): thisallows

to faster adapt detection technique.

It is worth noting that this assessment also applies to new vulnerabilities

exploitation and detection.

6

1 Introduction

9:

https://developer.android.com/

training/articles/perf-jni

In this context, Android security researchers have shown that native

applications are more and more present in Google Play store and that

state-of-the-art tools should improve their analysis on this kind of appli-

cations [16–18]

[16]: Afonso, Geus, Bianchi, Fratantonio,

Kruegel, Vigna, Doupé, and Polino (2016),

‘Going Native: Using a Large-Scale Analy-

sis of Android Apps to Create a Practical

Native-Code Sandboxing Policy’

[17]: Tam, Feizollah, Anuar, Salleh, and

Cavallaro(2017), ‘The evolution of android

malware and android analysis techniques’

[18]: Sadeghi, Bagheri, Garcia, and Malek

(2017), ‘A taxonomy and qualitative com-

parison of program analysis techniques for

security assessment of android software’

.

Applications are traditionally written in Java or Kotlin, compiled into

bytecode and run by a Virtual Machine. This machine enforces the

correct execution of this bytecode as expected by the developer and is the

privileged interface for observing an execution. A native application is an

application that contains both Dalvik bytecode and assembly code. Due

to optimization purposes, Android supports applications that embed

assembly code obtained from, for example, C or C++ source code.

The usage of native code opens two new challenges:

I

Native code usage allows to highly obfuscate applications. Indeed,

the cat and mouse game for obfuscating and desobfuscating as-

sembly code is a well studied area since the seventies, that is way

older than Android. Thus, the attacker can easily adapt advanced

assembly obfuscation techniques and bypass analysis tools.

I

Native code usage may introduce vulnerabilities in applications.

The languages in which native code is typically written (C or C++)

are known to be error-prone. That is to say, it is easy for developers

using these languages to leave security vulnerabilities in their

programs. Indeed, contrary to Java/Kotlin, these languages do not

implement security mechanisms such as strong type verification

or security context execution. Then, allowing native code inside

Android applications drastically increases the attack surface for

malicious intents. Additionally, tips and best practices given by

Google for native Android application development

9

, are not

enforced when the applications are running. Native code and

bytecode run in the same context and the same address space[19,

20]

[19]: Sun and Tan (2014), ‘Nativeguard:Pro-

tecting android applications from third-

party native libraries’

[20]: Athanasopoulos, Kemerlis, Portoka-

lidis, and Keromytis (2016), ‘NaClDroid:

Native Code Isolation for Android Appli-

cations’

, which allows native code to interfere with bytecode.

In this thesis, we mimic the cat and mouse game by building obfuscation

techniques and exploiting vulnerable applications and in a second time,

proposing associated detection techniques and analysis tools. We limit

our study to the challenges linked to the usage of native code inside

Android applications.

1.2 Contributions

[1]:

Graux

, Lalande, and Viet Triem Tong

(2018), ‘Etat de l’Art des Techniques d’Unpacking

pour les Applications Android’

[2]: Lalande, Viet Triem Tong, Leslous, and

Graux

(2018), ‘Challenges for reliable and

large scale evaluation of android malware

analysis’

[3]:

Graux

, Lalande, and Viet Triem Tong

(2019), ‘Obfuscated Android Application

Development’

[7]:

Graux

, Lalande, Wilke, and Viet Triem

Tong (2020), ‘Abusing Android Runtime

for Application Obfuscation’

[8]:

Graux

, Lalande, Tong, and Wilke(2021),

‘Preventing Serialization Vulnerabilities

through Transient Field Detection’

The contributions of this thesis are the following:

1.

We propose two new obfuscation methods of the java bytecode,

one targeting the code and the other targeting the data [1, 3, 7].

2.

We conducted two experimental studies of the usage of these

obfuscation methods in the wild [2, 7].

3.

We developed an analysis framework, named OATs’inside, which

combines dynamic and symbolic analysis to retrieve the behavior

of obfuscated Android applications.

4.

We designed and implemented a new detection method of applica-

tion vulnerabilities due to forgotten transient keyword [8].

1.3 Outline

7

1.3 Outline

This dissertation is divided in five parts. The first part contains this

introduction and Chapter 2, that gives the necessary background about

Android native and non-native application analysis techniques.

In order to describe the contributions of this thesis we reflect the cat and

mouse game by dividing the manuscript in two supplementary parts.

Chapters 3 and 4 explore attackers’ possibilities. They describe how

native code can lead to security issues, i.e. code obfuscation or vulnerable

code. These security issues are split on whether they impact Java code

(Chapter 3), or the Java data (Chapter 4). In addition to already known

issues, we introduce new obfuscation techniques.

The next two chapters, Chapters 5 and 6, tackle these security issues by

proposing detection methods and measuring their presence in the wild.

These two chapters are also divided into code and data issues.

The last two chapters before concluding, Chapters 7 and 8, present

OATs’inside, a new Android analysis tool and the technical challenges

involved in its implementation. OATs’inside is a stealth analysis framework

that recovers object-level CFGs of Android applications despite all known

obfuscation techniques.

Finally, Chapter 9 summarizes the contributions of this thesis and gives

perspectives for future work.

Analyzing native Android

applications: state of the art 2

This chapter reviews the contributions related to the security analy-

sis of Android applications. We will focus on approaches that output

qualitative and detailed information about the analyzed application.

During this review, we will recall the technical notions about the Android

architecture.

At the end of the chapter, we focus on the impact of native code on the

challenges introduced in Chapter 2.1, i.e. obfuscation of applications and

vulnerabilities in applications.

We will make a review of the articles of the state-of-the-art that tries

to solve the aforementioned challenges. More thorough comparisons

with our work will be given later in the appropriate chapters of this

manuscript.

Section 2.1 presents goals that researchers follow when analyzing

Android applications. Then, Section 2.2 details the datasets available

for evaluating analysis methods. Section 2.3 reviews the techniques

that are used to achieve the previously described goals. Finally,

Section 2.4 highlights the challenges that native code raises for using

these techniques.

2.1 Research goals

Android applications analyses take an APK file as input. An APK file is

an archive that contains three types of files:

I

metadata: a

Manifest

file that declares the permissions, the services

and the activities of the application.

I

code: files that contain Dalvik bytecode, usually obtained from the

compilation of Java or Kotlin source code.

I resources: additional files such as pictures, fonts, or sounds.

APK files can be processed in various ways: static approaches that only

look at the file itself, or dynamic ones that observe its execution.

Independently of the method used, security researchers have different

common goals in mind:

I

Detecting malicious applications: decide whether a given applica-

tion is malicious or benign.

I

Studying code protection: find new obfuscation techniques and

associated countermeasures.

I

Exposing vulnerable applications: spot security vulnerabilities

inside applications.

10

2 Analyzing native Android applications: state of the art

1: This runtime is described in Section 2.4

Detecting malicious applications

Malicious application detection can

be declined in different annex problems. While some researchers focus

on deciding the maliciousness of an application [21–26], others try to

classify malicious applications into families [27–33]. The definition of

what a family is depends on the context. For example, family can desig-

nate the malicious operation performed such as ransomware, Remote

Access Tool (RAT), adware. It can also designate different versions of

the same malware. Some also try to identify clones and repackaged

applications. Here the goal is to detect when an attacker has introduced

his code inside an other application. These goals are often treated using

artificial intelligence and machine learning algorithms that uses APK

characteristics and artifacts obtained at execution time.

The problem of detecting malicious applications is outside of the scope

of this thesis: as stated in Chapter , we focus on studying code protection

and exposing vulnerable applications. Thus, we will not describe the

entirety of works related to this problem. Nevertheless, we highlight

DroidClone [30] which focuses on a problem close to this thesis: de-

tection of native Android malware specifically. DroidClone provides a

mechanism to build malware signatures. It operates on assembly code

but handles both native code and bytecode by compiling the bytecode

using the compiler provided by the ART runtime

1

. This idea is an elegant

way to handle bytecode and assembly code simultaneously. We used a

similar approach to propose a new obfuscation method in Chapter 3.

Studying code protection

Studying code protection consists in two

opposite goals that both need to be explored. One may want to make the

analysis of an application more difficult. This process is called obfuscation.

At first sight, it could be surprising that some security researchers

try to invent new obfuscation techniques or improve existing ones

since they are used by malicious applications to circumvent analysis

tools. However, benign applications can legitimately use obfuscation,

for example, to protect their intellectual property or to avoid being

repackaged. Additionally, as mentioned in Chapter 2.1, determining

what kinds of obfuscations malware will potentially use in the future

allows to develop countermeasures and tackle malicious application

faster.

On the contrary, some researchers try to break obfuscation. Breaking

an obfuscation technique can itself be divided in different goal varia-

tions discussed hereinafter. It can consist in detecting the usage of the

obfuscation, retrieving the original code, or getting information about

the real application behavior while being agnostic about the targeted

obfuscation.

Detection techniques are useful to determine if a specific obfuscation

technique is used by applications in the wild. It can be used as a first

step, to determine if a deobfuscation technique, potentially resource-

consuming, should be launched. Most of the time detection techniques

try to spot artifacts that reveal traces of the usage of a known obfuscation

technique. Consequently, unknown obfuscation methods are not detected

since their artifacts are also unknown.

When a tool tries to analyze an obfuscated application, it may fail, for

example if an obfuscation technique ciphers the code, therefore making

2.1 Research goals

11

it unavailable for static analysis. Facing this problem, the first solution

is to retrieve the original code of the application in order to work as

if the application had never been obfuscated. This process is called

deobfuscation.

Since deobfuscation is the principal threat of obfuscation, sophisticated

techniques aim at preventingit. In some cases, such as the usage of packers

or reflection methods, the obfuscation cannot be fully reverted. Then,

analysis tools need, in order to work properly, to determine information

that is relevant for their goal but invariant with the obfuscation. For

example, malicious application detection can be conducted over network

communications [34] or system calls patterns [35].

Discussions about code obfuscation in the specific context of native

applications are developed in Sections 3.1 and 5.1.

Exposing vulnerable applications

Exposing vulnerable applications

consists in determining if a given application is vulnerable to security

attacks. This goal seems to be inherently malicious. But, this is also

legitimately used by developers or companies that want to check, before

using it, that an external library or an application is safe. It is also used

by developers to check their own application and, if a vulnerability is

found, patch their application.

First, security researchers can manually look for vulnerabilities and

highlight new problematic issues. For example, Peles and Hay [36]

showed that a missing

transient

keyword in a Java field can lead to

severe exploits if such a field contains a native address. This approach is

precisely described in Section 4.1 because a solution of this problem is

one contribution of this thesis.

Then, for particularly widespread vulnerabilities, researchers design

methods targeting them. This can be done by statically analyzing the

bytecode. Lu et al. [37] and Zhang and Yin [38] looked for component

hijacking vulnerabilities and Sounthiraraj et al. [39] for SSL man-in-

the-middle vulnerabilities. Gu et al. [40] found JNI Global References

exhaustion (JGRE) by statically analyzing native code and bytecode. These

solutions have a high accuracy for detecting the considered vulnerability

but keep bounded to this specific vulnerability.

Some researchers adopt a more generic approach. They do not search

for a specific vulnerability but have designed methods that can work for

different ones. For example, Qian et al. [41] transform the bytecode of

an application into an annotated CFG and translate vulnerabilities into

graph-traversal properties. Dhaya and Poongodi [42] built a machine

learning system that translates application code into N-grams and au-

tomatically learns to recognize vulnerable applications. However, these

approaches [41, 42] do not evaluate their detection ratio but only report

vulnerabilities found in application datasets. While these approaches are

useful in the wild, they cannot prove that a given application is safe.

Some researchers focus on generic dynamic approaches. Sounthiraraj

et al. [39] run the application and redirect external SSL connections to

a crafted server that attempts to perform man-in-the-middle attacks.

Yang et al. [43] and Sasnauskas and Regehr [44] developed an Intent

fuzzer. A fuzzer is a tool that consists in generating invalid and faulty

12

2 Analyzing native Android applications: state of the art

2: Android Interface Definition Language

3: Inter-Process Communication

inputs and pass them to a system under attack. This approach is well

suited for Android. Indeed, in Android, applications communicate with

each other using Intents. Intents are Java objects that are sent trough

the binder, the Android IPC mechanism. In order to work properly, the

binder requires applications to declare, in an AIDL

2

file, the types of

Intents they are willing to receive. This file is stored in the APK archive

and fuzzers exploit this AIDL file to generate inputs that are not rejected

by the application, revealing vulnerabilities. Similarly to the preceding

generic approach, the accuracy detection is difficult to evaluate.

Finally, researchers develop solutions to improve and facilitate the cor-

rection of vulnerabilities. For example, Zhang and Yin [38] automatically

propose a patch for vulnerable bytecode and [45] developed a system to

patch the Android system when manufacturers do not update properly

the system.

In this thesis, we have studied a specific vulnerability involving native

code introduced by Peles and Hay [36] for which we have built a dedicated

method.

The implementation of some of the solutions presented above introduces

additional challenges. First, dynamic systems that emulate Android are

not transparent. This means that malicious applications can detect that

they are being analyzed, and then choose to behave differently. This is

called emulation system evasion [46, 47]. Another challenge, extensively

studied in Android, is the problem of code coverage [48–52]. The Android

application architecture is very modular and event-driven. Applications

are composed of multiple activities and services, that can be triggered

using various ways such as user interaction and IPCs

3

. These services and

activities constitute multiple entry-points of the Android application, in

contrast with classic desktop executables that only have one single entry-

point. This is problematic when a dynamic system wants to stimulate

the execution to cover as much code as possible. For example, Abraham

et al. [49] propose to explore exhaustively the graphical interface of

applications under analysis.

These challenges have not been faced during this thesis and thus, these

solutions are not further described.

2.2 Android application datasets

As in any research field, the Android security community needs datasets

to evaluate their methods and produce easily reproducible experiments.

Thus, various datasets exist [53]:

I

Google Play store: official Android application repository. It con-

tains more than two million applications. However, this dataset

is not suitable for scientific experiments since it highly dynamic:

applications are added, removed or updated frequently. It not

easily retrievable: Google does not provide an API to download

applications. Finally, applications are not labeled as goodware or

malware since malware are removed from the store.

2.2 Android application datasets

13

4: https://www.virustotal.com

5:

https://contagiominidump.

blogspot.com/

6: https://koodous.com/

7: Arbitrarily set to 27 in the graph.

8: See Chapter 3.2.2.1

I

Genome [54]: ground-truth dataset of more than 1,200 malware

samples. This dataset is qualified as ground-truth, meaning that

every application has been manually verified to be malicious. Unfor-

tunately, the service is no longer maintained by the authors. Copies

of this dataset are still available but it is no longer representative of

malware in the wild [55].

I

Drebin [56]: a dataset of 123,453 applications and 5,560 malware.

Malware have been detected using VirusTotal

4

, an online malware

detection service. This dataset is largely used as a detection bench-

mark in the research community, such that at the time of writing, it

has been cited more than two thousands times. However, similarly

to the Genome dataset, it is getting old and not representative. For

example, it contains only very few native applications.

I

AndroZoo [57]: a dataset of more than 13 million applications.

The authors are continuously downloading new samples from

various stores, including the Google Play store. Samples come with

metadata such as size, checksum and retrieve date. Additionally,

they also tag if the application is malicious using VirusTotal.

I

AMD [58]: a ground-truth dataset of 24,553 malware classified

among different families.

I

GM19 [4]: contains two balanced sets of 5,000 goodware (GOOD)

and 5,000 malware (MAL) with an homogeneous distribution of

dates (2015-2018) and APK size to avoid statistical biases.

I

Contagio mobile

5

: web repository containing 252 malicious appli-

cations.

I

Koodous

6

: web repository containing 19 million malware out of 66

million applications.

It is worth noting that Drebin, AndroZoo and GM19 datasets use Virus-

Total, an online detection service which aggregates the results of around

50 antivirus, to classify applications between goodware and malware.

However, we believe that this approach is not reliable. In [2], we collected

2,000 malware samples by downloading each day 20 recent samples from

the Koodous repository and 30 random samples from the AndroZoo

repository. As shown in Figure 2.1, 48% of samples are not recognized by

any antivirus used by VirusTotal. Figure 2.1 shows that there is no obvi-

ous threshold to decide that a sample has been recognized by enough

antiviruses to classify it as a malware. We were expecting a drop of

detection for a certain number of antiviruses

7

, as represented by the

light blue curve. Additionally, these results may change with time, as the

pool of antiviruses used by VirusTotal frequently updates their signature

database. From this experiment, we conclude that using VirusTotal as an

oracle for confirming that a sample is malicious is not reliable, especially

for recent samples.

All the aforementioned datasets are used to test malicious detection

techniques. For obfuscation studies of this thesis, as we do not attempt to

detect malicious applications, we only use datasets to detect the presence

of obfuscation techniques in the wild and thus, focus on recent datasets:

AndroZoo, AMD and GM19.

Also, we have not found any dataset of firmware applications, that

is applications pre-compiled and installed on the smartphone by the

manufacturer or the firmware vendor. Since we have developed an

obfuscation technique specifically for this kind of applications

8

, we have

14

2 Analyzing native Android applications: state of the art

Figure 2.1:

Ratio of recognized malware

by at least x antivirus

9: Alcatel, Archos, Huawei, Samsung,

Sony, Wiko

constructed one for our experiments. We have downloaded 17 firmwares

from six different brands

9

. All the firmwares run Android 7.0 or 7.1.

For each firmware, all compiled applications have been extracted. The

complete list of firmware is available in Appendix A.

For the detection of vulnerable applications, we found only one dataset

named Ghera [59]. It is an open source repository of vulnerable and

safe applications. For each vulnerable application, details about the

vulnerabilities present in the application are provided. Unfortunately,

this dataset does not contain samples for the vulnerability we have

studied in this thesis: missing transient keyword.

2.3 Analysis techniques

To achieve their goals (detecting malicious applications, studying code

protection and exposing vulnerable applications), researchers rely on

several techniques, used as building blocks that can be tuned and com-

bined together to tackle specific problems. This section reviews these

different techniques. Classically, techniques are separated between static,

that study the data and the code of the applications, and dynamic ones,

that observe executions of the applications.

However, these two sets of techniques are not disjoint. For example,

symbolic execution is a static technique since it does not execute the

application. Nevertheless it attempts to mimic a possible set of executions.

On the other side, some dynamic techniques, such as fuzzing, rely on

a preliminary static analysis phase used to configure the subsequent

dynamic phase.

In this section, we have chosen to present techniques from high-level to

low-level. Indeed, this thesis deals with applications composed of Java

and assembly code, that are languages of completely different levels.

Such a classification is relevant in this context.

2.3 Analysis techniques

15

10: Description shown in the Google Play

store and written by the application devel-

oper.

11: Dalvik EXecutables

12: Just-In-Time

2.3.1 System side-effects

We consider as high level techniques, the ones that work with artifacts left

by the execution of the analyzed application rather that the application

itself. For example, Shao et al. [60] observe the network communications

to detect unsafe applications that accept remote commands without

any preliminary authentication phase. Bhatia et al. [61] analyze memory

snapshots and reconstruct a timeline composed of, for example, activities

and services that have been launched. These approaches are too high-level

for handling specifically native code.

2.3.2 Application metadata

Looking at techniques getting closer to the application and the system,

researchers can work on the application metadata. Metadata about

Android applications is stored in the APK archive inside a file called

Manifest. This file contains:

I The list of permissions required by the application.

I The list of activities: classes that represent an interface window.

I The list of services: classes that are launched in the background.

I

The list of receivers: classes that are able to receive messages sent

by other application or by the system.

Metadata-based techniques can work on permissions and API calls [62] or

permissions and application description

10

[63]. Actually, all the informa-

tion stored in the

Manifest

can be used to achieve malicious detection [64].

Again, such approaches cannot be of interest for native code.

2.3.3 Bytecode level

Techniques can look at the application bytecode. This bytecode is stored

inside the APK archive as DEX

11

files. New DEX files can be loaded

during the execution by the bytecode itself. That means static analysis

cannot, in the general case, cover all the code. This bytecode is named

Dalvik bytecode, after the name of the virtual machine that interprets

or JIT-compiles

12

it: the Dalvik VM. This bytecode is a register-based

version of the Java bytecode. Bytecode level analysis techniques are

widely used by researchers because the bytecode is clearly the place

where the behavior of the application is described.

In order to analyze application bytecode, solutions can use bytecode

simplification techniques before conducting their analysis. This allows to

reduce the amount of resources needed to process an application. For

example, SAAF [65] proposes to compute slices of the bytecode according

the dataflow of this instruction. An instruction is part of a slice if, given

a value (variable, object field, ...), this instruction participates to the

computation of this value. Similarly, Harvester [66] slices the program

according to the dataflow of a given value. Then, it executes the obtained

slice and logs, at runtime, the value.

To simplify their analysis, tools can also use Intermediate Representation

(IR) such as Jimple [67]. An Intermediate Representation (IR) is a language

that abstractlower-level languages. It is used to make writing optimization

16

2 Analyzing native Android applications: state of the art

Figure 2.2:

Classical representation of An-

droid system architecture

Applications:

∗Pre-ins

talled apps, user-installed apps

F

ramework:

Activity Manager, Package Manager, Content Providers, ...

Librar

ies:

libc, SSL, OpenGL, ∗Vendors libs, ...

R

untime:

Core libs, Dalvik VM

Linux

Kernel:

Memory manager, Proccess scheduler, ∗Drivers, ...

∗: modified by vendors

A

OSP

13: International Mobile Equipment Iden-

tity, number that identify uniquely a mo-

bile device

14: Android Open Source Project:

https:

//source.android.com/

rules easier. For example, AppSealer [38] uses program slicing according

to dataflow performed over Jimple, rather than on the bytecode, to search

for component hijacking vulnerabilities.

Taint analysis is a common analysis conducted on application bytecode.

In particular, we have used the taint analyzer provided by FlowDroid [68]

to perform the analysis conducted in Section 6.2. A taint analysis consists

in identifying, for a given list of sources, all the sinks that can receive